Similarity-based image search, also known as content-based image retrieval, has historically been a challenging computer vision task. This problem is especially difficult for visual art, because it is less obvious as to what a metric of “similarity” should be defined as and who should set that standard for art.

For example, when I upload a photo of a wall mural featuring a face among colorful rectangles and bold lines (see images below) to Google to find similar images, Google gives me an array of options under its “Visually similar images” section. Most images were wall murals with a face prominently depicted in the mural; others were pure paintings with a face in it. All the images spanned a wide variety of color schemes and stylistic textures.

Right: Screenshot from Google of what Google considers to be similar images to this photo.

A 2018 paper from Geirhos, et al. [1] revealed that convolutional neural networks (CNNs) trained on ImageNet are biased towards the image’s stylistic texture. To force a CNN to learn a shape-based representation instead, the researchers applied style transfer on ImageNet to create a “Stylized-ImageNet” dataset instead.

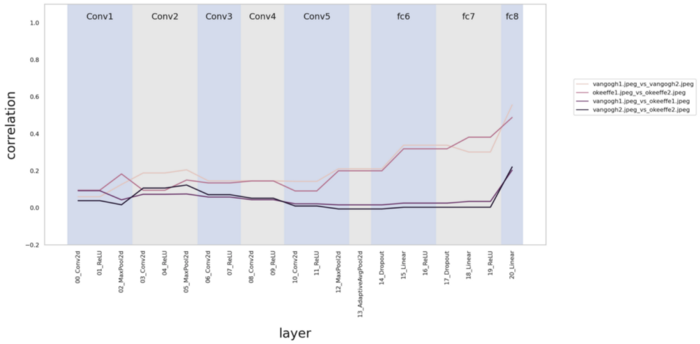

I decided to build upon their findings to investigate the effect of training pairs of art pieces from the same artistic styles on a texture-biased vs. shape-biased model. In comparing pairs of paintings from Vincent van Gogh vs. Georgia O’Keeffe, both artists with very distinctive art styles, I found that the texture-biased ImageNet-trained AlexNet model did a far better job correlating the pieces from the same artists (Figure 1) compared to the shape-biased Stylized-ImageNet-trained AlexNet model (Figure 2).

My main conclusion from this experiment was that in evaluating the similarity of visual art, if we considered art pieces being from the same artist as a criterion of similarity, then the stylistic texture was far more important to look for and compare than the shape representations. However, the evaluation of “style” seems like a very subjective and human perceptual process. This finding had me even more curious about what technical methods could combine both human and quantitative judgment in determining artistic similarity.

A 2011 paper from Hughes et al. [2] combined quantitative and psychological research to conclude that combining human perceptual information with higher-order statistical representations of art was extremely effective in solving the similarity-based search problem for art. Human perception of artistic style is generally grounded in the quality of elements such as lines, shading, and color, which are hard to capture using low-order statistics. Thus these researchers tapped into higher-order spatial statistics and applied their findings to comparing visual art. Then, they conducted psychophysical experiments that asked participants to judge the similarity between pairs of art pieces and used these results in tandem with their predictive models.

If this in-depth educational content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

Quantitative Process and Results

Hughes et al. performed their research on a dataset of 308 high-resolution images of art pieces spanning a variety of artists. They used two image decomposition methods to extract features from images:

- Gabor filter, which is sensitive to lines and edges at specific orientations and spatial frequencies

- Sparse coding model, which learns a set of basis functions associated with higher-order statistical characteristics of an image

Having extracted the features, they then compared and evaluated these artistic images by the following four metrics:

- Peak orientation, which looks at what orientation the peak amplitude occurs in the 2D Fourier transform of the basis function learned from the sparse coding model

- Peak spatial frequency, which looks at what spatial frequency the peak amplitude occurs at

- Orientation bandwidth, which measures how selective a basis function is for that preferred orientation

- Spatial frequency bandwidth, which measures how selective a basis function is for that preferred spatial frequency

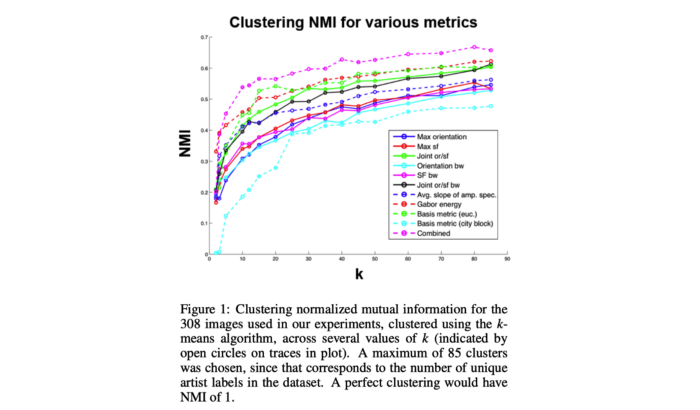

Then, the researchers explored different distance metrics (e.g. KL divergence) to compare distributions of the four metrics above to derive distance matrices. It is important to note that since there is no ground truth of stylistic similarity, the researchers compared art pieces by true artist labeling, i.e. all paintings by Picasso are granted the same label, so the distance matrices were constructed with respect to the true artist labeling. Performing k-means clustering using different distance metrics revealed overall success of using these higher-order statistical representations for visual art images (see graph below).

Psychophysical Perceptual Similarity Experiments

In addition to developing a method to quantitatively characterize the style of visual art pieces, the researchers conducted two psychophysical experiments to take advantage of human perceptual information. They asked participants to judge similarity between pairs of art images in abstract art, landscapes, and portraits, aggregating their answers to create a similarity matrix for each of the three categories.

Experiment 1 sought to compare the efficacy of perceptual judgments at predicting the stylistic relationship between art pieces. The researchers held out two images per image category; then, they trained a regression model using feature-based distances to predict the distance between two images according to their perceived similarity. With the learned models, they predicted the distances between the held-out images and the training images. Finally, they compared the predicted distance with the true perceptual distance between the images.

The researchers found that the perceptual information from abstract and landscape artworks enabled statistically significant prediction, which tells us that useful statistical information not only exists in perceptual similarity data but also can be used to model the differences across visual art pieces.

Experiment 2 measured the extent to which limited perceptual information of the three categories of images could predict stylistic distinctions and relationships in larger sets of images, which is directly relevant to the problem of similarity-based image search. The process was similar to Experiment 1, only that this time they held out 51 images across the three categories and used the remaining images to create a perceptual distance matrix. Their predicted distance matrix suggested that even with limited perceptual information, such information is helpful in “guiding the ways in which we combine statistical features to understand style perception.”

Final Thoughts

In summary, Hughes et al.’s paper “Comparing Higher-Order Spatial Statistics and Perceptual Judgements in the Stylometric Analysis of Art” showed us the importance and need of combining both human perceptual information with higher-order statistical information for evaluating the similarity of visual art.

More psychological research still needs to be conducted in evaluating how artistic style is perceived, defined, and evaluated with respect to similarity. In their paper, they mention how “[a]t present … there are but a handful of quantitative studies of the factors that govern human style perception.”

With consideration to the broader context of computer vision, it is also interesting to think about the need for higher-order statistical representations of artistic style in analogy with the need for deeper convolution layers in CNNs.

All in all, rooting the judgments in human perception while also optimizing and taking advantage of all the available quantitative information is key in considering how to develop a better similarity-based image search system for visual artwork.

References

[1] Geirhos, R., Rubisch, P., Michaelis, C., Bethge, M., Wichmann, F. A., and Brendel, W. “ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness.” ICLR 2019. arXiV preprint: https://arxiv.org/abs/1811.12231.

[2] Hughes, J. M., Graham, D. J., Jacobsen, C. R., and Rockmore, D. N. “Comparing higher-order spatial statistics and perceptual judgements in the stylometric analysis of art.” 2011 19th European Signal Processing Conference. https://ieeexplore.ieee.org/abstract/document/7073967.

Catherine Yeo is an undergraduate at Harvard studying Computer Science. You can find her on Twitter @catherinehyeo.

This article was inspired by Harvard’s PSYCH 1406, “Biological and Artificial Visual Systems: How Humans and Machines Represent the Visual World.” Thank you to Professor George Alvarez for his feedback and guidance.

This article was originally published on Towards Data Science and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more technical education.

The post Similarity-Based Image Search for Visual Art appeared first on TOPBOTS.

- '

- "

- 10

- 11

- 2019

- 2D

- 7

- 9

- a

- About

- ABSTRACT

- According

- across

- addition

- administration

- ADvantage

- AI

- All

- among

- analysis

- analytics

- applied

- Applying

- Art

- article

- artificial

- artist

- artistic

- Artists

- artwork

- associated

- available

- basis

- being

- below

- between

- Biggest

- build

- business

- capture

- cases

- Category

- challenge

- challenging

- CNN

- Coding

- colorful

- combined

- compared

- computer

- computer science

- Conference

- consideration

- considers

- content

- could

- create

- customer

- Customer Support

- data

- decided

- deeper

- describe

- determining

- develop

- developing

- DID

- different

- difficult

- directly

- distance

- distributions

- Education

- educational

- effect

- Effective

- elements

- European

- evaluation

- Event

- example

- experiment

- Face

- factors

- Features

- Featuring

- feedback

- Figure

- Finally

- finance

- finding

- First

- following

- found

- from

- function

- functions

- generally

- George

- Georgia

- granted

- harvard

- helpful

- higher

- How

- How To

- However

- hr

- HTTPS

- human

- Humans

- image

- images

- importance

- important

- increasing

- information

- inspired

- investigate

- IT

- Job

- judge

- judgments

- Key

- Know

- known

- Label

- labeling

- landscape

- larger

- LEARN

- learned

- Legal

- Limited

- lines

- Look

- Machines

- Marketing

- material

- Matrix

- measures

- medium

- methods

- Metrics

- model

- models

- more

- most

- needs

- networks

- obvious

- Operations

- optimizing

- Options

- Other

- overall

- Paper

- participants

- performing

- pieces

- predict

- prediction

- present

- Problem

- process

- processing

- Product

- Professor

- quality

- quantitative

- relationship

- Relationships

- release

- relevant

- remaining

- represent

- representation

- research

- researchers

- Results

- Revealed

- robustness

- sales

- same

- schemes

- Science

- Search

- selective

- set

- Shape

- sign

- significant

- similar

- since

- So

- specific

- standard

- statistical

- statistics

- Still

- studies

- style

- success

- support

- system

- Systems

- taking

- Technical

- tells

- The

- three

- time

- towards

- Training

- transfer

- Transform

- under

- understand

- Unsplash

- Updates

- us

- use

- variety

- vision

- W

- ways

- What

- while

- WHO

- world

- Your