A tiny ball of brain cells hums with activity as it sits atop an array of electrodes. For two days, it receives a pattern of electrical zaps, each stimulation encoding the speech peculiarities of eight people. By day three, it can discriminate between speakers.

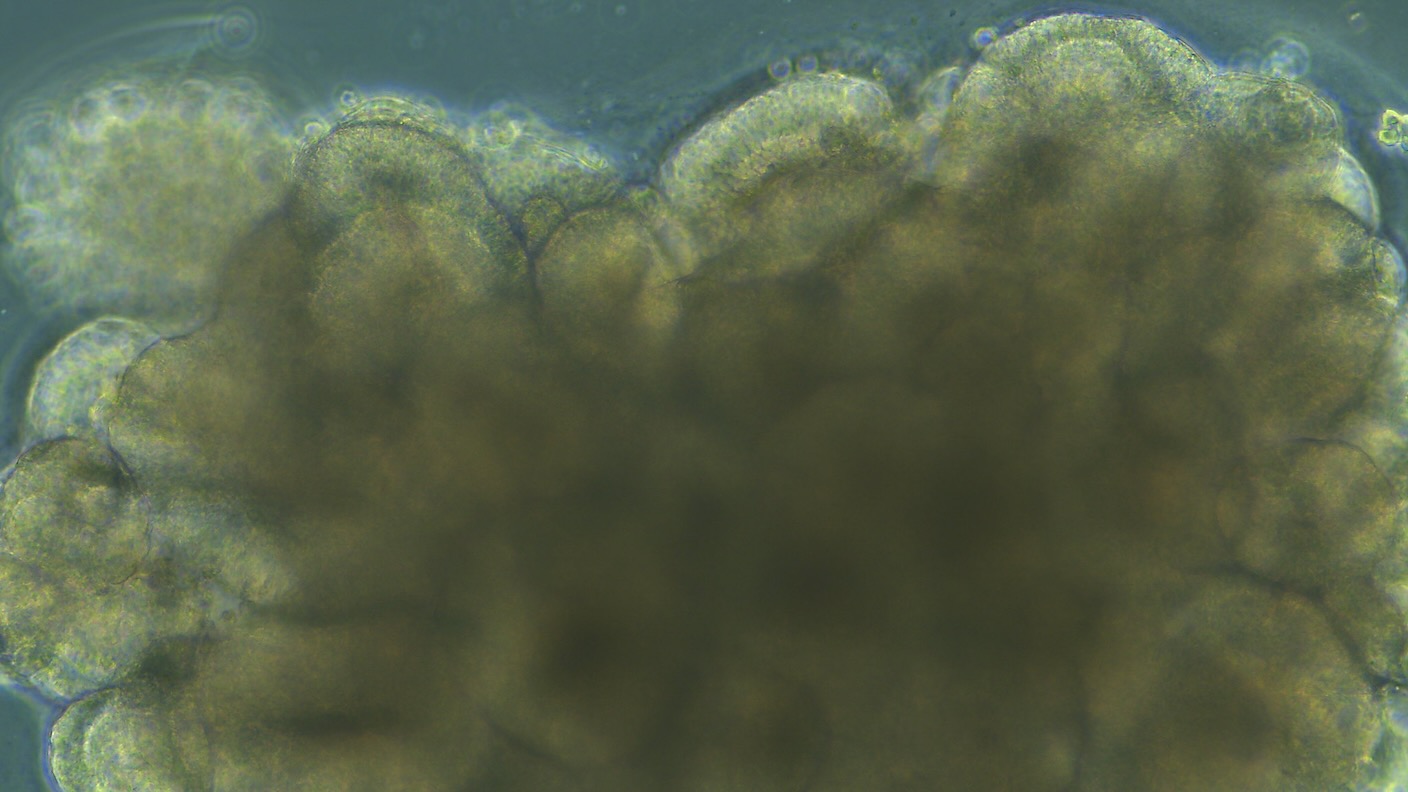

Dubbed Brainoware, the system raises the bar for biocomputing by tapping into 3D brain organoids, or “mini-brains.” These models, usually grown from human stem cells, rapidly expand into a variety of neurons knitted into neural networks.

Like their biological counterparts, the blobs spark with electrical activity—suggesting they have the potential to learn, store, and process information. Scientists have long eyed them as a promising hardware component for brain-inspired computing.

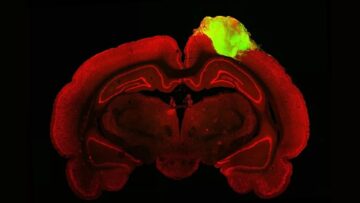

This week, a team at Indiana University Bloomington turned theory into reality with Brainoware. They connected a brain organoid resembling the cortex—the outermost layer of the brain that supports higher cognitive functions—to a wafer-like chip densely packed with electrodes.

The mini-brain functioned like both the central processing unit and memory storage of a supercomputer. It received input in the form of electrical zaps and outputted its calculations through neural activity, which was subsequently decoded by an AI tool.

When trained on soundbites from a pool of people—transformed into electrical zaps—Brainoware eventually learned to pick out the “sounds” of specific people. In another test, the system successfully tackled a complex math problem that’s challenging for AI.

The system’s ability to learn stemmed from changes to neural network connections in the mini-brain—which is similar to how our brains learn every day. Although just a first step, Brainoware paves the way for increasingly sophisticated hybrid biocomputers that could lower energy costs and speed up computation.

The setup also allows neuroscientists to further unravel the inner workings of our brains.

“While computer scientists are trying to build brain-like silicon computers, neuroscientists are trying to understand the computations of brain cell cultures,” wrote Drs. Lena Smirnova, Brian Caffo, and Erik C. Johnson at Johns Hopkins University who were not involved in the study. Brainoware could offer new insights into how we learn, how the brain develops, and even help test new therapeutics for when the brain falters.

A Twist on Neuromorphic Computing

With its 200 billion neurons networked into hundreds of trillions of connections, the human brain is perhaps the most powerful computing hardware known.

Its setup is inherently different than classical computers, which have separate units for data processing and storage. Each task requires the computer shuttle data between the two, which dramatically increases computing time and energy. In contrast, both functions unite at the same physical spot in the brain.

Called synapses, these structures connect neurons into networks. Synapses learn by changing how strongly they connect with others—upping the connection strength with collaborators that help solve problems and storing the knowledge at the same spot.

The process may sound familiar. Artificial neural networks, an AI approach that’s taken the world by storm, are loosely based on these principles. But the energy needed is vastly different. The brain runs on 20 watts, roughly the power needed to run a small desktop fan. A comparative artificial neural network consumes eight million watts. The brain can also easily learn from a few examples, whereas AI notoriously relies on massive datasets.

Scientists have tried to recapitulate the brain’s processing properties in hardware chips. Built from exotic components that change properties with temperature or electricity, these neuromorphic chips combine processing and storage within the same location. These chips can power computer vision and recognize speech. But they’re difficult to manufacture and only partially capture the brain’s inner workings.

Instead of mimicking the brain with computer chips, why not just use its own biological components?

A Brainy Computer

Rest assured, the team didn’t hook living brains to electrodes. Instead, they turned to brain organoids. In just two months, the mini-brains, made from human stem cells, developed into a range of neuron types that connected with each other in electrically active networks.

The team carefully dropped each mini-brain onto a stamp-like chip jam-packed with tiny electrodes. The chip can record the brain cells’ signals from over 1,000 channels and zap the organoids using nearly three dozen electrodes at the same time. This makes it possible to precisely control stimulation and record the mini-brain’s activity. Using an AI tool, abstract neural outputs are translated into human-friendly responses on a normal computer.

In a speech recognition test, the team recorded 240 audio clips of 8 people speaking. Each clip capturing an isolated vowel. They transformed the dataset into unique patterns of electrical stimulation and fed these into a newly grown mini-brain. In just two days, the Brainoware system was able to discriminate between different speakers with nearly 80 percent accuracy.

Using a popular neuroscience measure, the team found the electrical zaps “trained” the mini-brain to strengthen some networks while pruning others, suggesting it rewired its networks to facilitate learning.

In another test, Brainoware was pitted against AI on a challenging math task that could help generate stronger passwords. Although slightly less accurate than an AI with short-term memory, Brainoware was much faster. Without human supervision, it reached nearly compatible results in less than 10 percent of the time it took the AI.

“This is a first demonstration of using brain organoids [for computing],” study author Dr. Feng Guo told MIT Technology Review.

Cyborg Computers?

The new study is the latest to explore hybrid biocomputers—a mix of neurons, AI, and electronics.

Back in 2020, a team merged artificial and biological neurons in a network that communicated using the brain chemical dopamine. More recently, nearly a million neurons, lying flat in a dish, learned to play the video game Pong from electrical zaps.

Brainoware is a potential step up. Compared to isolated neurons, organoids better mimic the human brain and its sophisticated neural networks. But they’re not without faults. Similar to deep learning algorithms, the mini-brains’ internal processes are unclear, making it difficult to decode the “black box” of how they compute—and how long they retain memories.

Then there’s the “wetlab “problem. Unlike a computer processor, mini-brains can only tolerate a narrow range of temperature and oxygen levels, while constantly at risk of disease-causing microbe infections. This means they have to be carefully grown inside a nutrient broth using specialized equipment. The energy required to maintain these cultures may offset gains from the hybrid computing system.

However, mini-brains are increasingly easier to culture with smaller and more efficient systems—including those with recording and zapping functions built-in. The harder question isn’t about technical challenges; rather, it’s about what’s acceptable when using human brains as a computing element. AI and neuroscience are rapidly pushing boundaries, and brain-AI models will likely become even more sophisticated.

“It is critical for the community to examine the myriad of neuroethical issues that surround biocomputing systems incorporating human neural tissues,” wrote Smirnova, Caffo, and Johnson.

Image Credit: A developing brain organoid / National Institute of Allergy and Infectious Diseases, NIH

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://singularityhub.com/2023/12/14/a-ball-of-brain-cells-on-a-chip-can-learn-simple-speech-recognition-and-math/

- :is

- :not

- $UP

- 000

- 1

- 10

- 20

- 200

- 200 billion

- 2020

- 3d

- 8

- 80

- a

- ability

- Able

- About

- ABSTRACT

- acceptable

- accuracy

- accurate

- active

- activity

- against

- AI

- algorithms

- allows

- also

- Although

- an

- and

- Another

- approach

- ARE

- Array

- artificial

- artificial neural networks

- AS

- assured

- At

- audio

- author

- ball

- bar

- based

- BE

- become

- Better

- between

- Billion

- both

- boundaries

- Brain

- brain cells

- brains

- Brian

- build

- built

- built-in

- but

- by

- calculations

- CAN

- capture

- Capturing

- carefully

- cell

- Cells

- central

- challenges

- challenging

- change

- Changes

- changing

- channels

- chemical

- chip

- Chips

- clips

- cognitive

- collaborators

- combine

- communicated

- community

- compared

- compatible

- complex

- component

- components

- computation

- computations

- computer

- Computer Vision

- computers

- computing

- Connect

- connected

- connection

- Connections

- constantly

- contrast

- control

- Costs

- could

- counterparts

- credit

- critical

- Culture

- data

- data processing

- datasets

- day

- Days

- deep

- deep learning

- desktop

- developed

- developing

- develops

- different

- difficult

- diseases

- dish

- dozen

- dr

- dramatically

- dropped

- each

- easier

- easily

- efficient

- eight

- electricity

- Electronics

- element

- encoding

- energy

- equipment

- erik

- Even

- eventually

- Every

- every day

- examine

- examples

- Exotic

- Expand

- explore

- facilitate

- Falters

- familiar

- fan

- faster

- faults

- Fed

- few

- First

- flat

- For

- form

- found

- from

- functions

- further

- Gains

- game

- generate

- grown

- harder

- Hardware

- Have

- help

- higher

- hopkins

- How

- HTTPS

- human

- Hundreds

- Hybrid

- in

- incorporating

- Increases

- increasingly

- Indiana

- Infections

- Infectious diseases

- information

- inherently

- input

- inside

- insights

- instead

- Institute

- internal

- into

- involved

- isolated

- issues

- IT

- ITS

- johns

- Johns Hopkins

- Johns Hopkins University

- Johnson

- just

- knowledge

- known

- latest

- layer

- LEARN

- learned

- learning

- less

- levels

- like

- likely

- living

- location

- Long

- lower

- made

- maintain

- MAKES

- Making

- massive

- math

- May..

- means

- measure

- Memories

- Memory

- million

- mix

- models

- months

- more

- more efficient

- most

- much

- myriad

- narrow

- National

- Nature

- nearly

- needed

- network

- networks

- Neural

- neural network

- neural networks

- Neurons

- Neuroscience

- New

- newly

- normal

- of

- offer

- offset

- on

- only

- onto

- or

- Other

- Others

- our

- out

- outputs

- over

- own

- Oxygen

- packed

- Passwords

- Pattern

- patterns

- paves

- People

- percent

- perhaps

- physical

- pick

- pitted

- plato

- Plato Data Intelligence

- PlatoData

- Play

- Pong

- pool

- Popular

- possible

- potential

- power

- powerful

- precisely

- principles

- Problem

- problems

- process

- processes

- processing

- Processor

- promising

- properties

- Pushing

- question

- raises

- range

- rapidly

- rather

- reached

- Reality

- received

- receives

- recently

- recognition

- recognize

- record

- recorded

- recording

- required

- requires

- resembling

- responses

- Results

- retain

- Risk

- roughly

- Run

- runs

- same

- scientists

- separate

- setup

- short-term

- signals

- Silicon

- similar

- Simple

- sits

- small

- smaller

- SOLVE

- some

- sophisticated

- Sound

- Spark

- speakers

- speaking

- specialized

- specific

- speech

- Speech Recognition

- speed

- Spot

- Stem

- stem cells

- stemmed

- Step

- storage

- store

- Storm

- strength

- Strengthen

- stronger

- strongly

- structures

- Study

- Subsequently

- Successfully

- supercomputer

- supervision

- Supports

- Synapses

- system

- Systems

- taken

- tapping

- Task

- team

- Technical

- Technology

- test

- than

- that

- The

- the world

- their

- Them

- theory

- therapeutics

- These

- they

- this

- those

- three

- Through

- time

- tissues

- to

- took

- tool

- trained

- transformed

- translated

- tried

- trillions

- trying

- Turned

- twist

- two

- types

- unclear

- understand

- unique

- unit

- unite

- units

- university

- unlike

- unravel

- use

- using

- usually

- variety

- Video

- video game

- vision

- was

- Way..

- we

- week

- were

- when

- whereas

- which

- while

- WHO

- why

- will

- with

- within

- without

- workings

- world

- wrote

- zephyrnet