Yesterday at TechCrunch Disrupt, Harrison Chase, founder of LangChain, Ashe Magalhaes founder of Hearth, & Henry Scott-Green, founder of Context.ai, & I discussed the future of building LLM-enabled applications.

We assembled the panel as a three layer cake : Hearth, the application ; Langchain, the infrastructure ; Context.ai, the product analytics. Here are my takeaways from the conversation.

First, it’s very early in LLM application development in every sense of the word. Few applications are managing significant user volumes. Many remain in testing & are working to develop quality scores for LLM performance before launch. The state of the art is using “vibes” ; how much better did the model feel?

Fine-tuning may top the conversations in social media, but not many have launched fine-tuned models into production.

Second, the stack is maturing at the same time. The core components include technologies to provide context to a model (retrieving relevant information), the models themselves, & then the monitoring (LangChain’s LangSmith)/analytics (Context.ai) infrastructure to investigate performance.

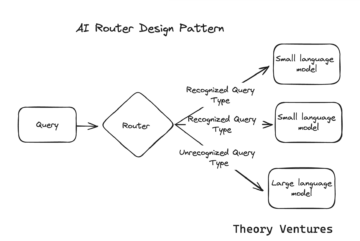

It’s possible that the world will move to “constellations of models.” A user issues a query, the system adds the right context. Then a router sends to the best model for the task. (either a large language model or a bespoke small model). But, today, most traffic is going to the most accurate LLMs. There’s not much specialization in models just yet.

Third, user intent data — following a user to understand their goal in a product — is essential to building great LLM applications. Part of this data set will be derived from product use; some of it will be imputed using analysis ; & human labeling may play a role.

Understanding when a model acts unexpectedly or inappropriately is critical but hard to do & even harder to catch in real time. But this will be an important part of the stack.

Last, agentic applications, those which act on behalf of users, have the potential to transform work. There are new product design challenges to solve including how to fashion these products to work for an individual & a team. Most of the LLM products today are single-player.

Thanks to Ashe, Harrison, & Henry for joining me on the panel.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.tomtunguz.com/tc_disrupt/

- :is

- :not

- a

- accurate

- Act

- acts

- Adds

- AI

- an

- analysis

- analytics

- Application

- Application Development

- applications

- ARE

- Art

- AS

- assembled

- At

- BE

- before

- behalf

- bespoke

- BEST

- Better

- Building

- but

- by

- CAKE

- Catch

- challenges

- components

- context

- Conversation

- conversations

- Core

- critical

- data

- data set

- Derived

- Design

- develop

- Development

- DID

- discussed

- Disrupt

- do

- Early

- either

- essential

- Ether (ETH)

- Even

- Every

- Fashion

- feel

- few

- following

- For

- founder

- from

- future

- goal

- going

- great

- Hard

- harder

- Have

- henry

- here

- How

- How To

- HTTPS

- human

- i

- image

- important

- in

- include

- Including

- individual

- information

- Infrastructure

- intent

- into

- investigate

- issues

- IT

- joining

- jpg

- just

- labeling

- language

- large

- launch

- launched

- layer

- Lessons

- managing

- many

- May..

- me

- Media

- model

- models

- monitoring

- most

- move

- much

- my

- New

- new product

- of

- on

- or

- panel

- part

- performance

- plato

- Plato Data Intelligence

- PlatoData

- Play

- possible

- potential

- Product

- product design

- Production

- Products

- products today

- provide

- quality

- real

- real-time

- relevant

- remain

- right

- Role

- router

- same

- scores

- sends

- sense

- set

- significant

- small

- Social

- social media

- SOLVE

- some

- stack

- State

- system

- Takeaways

- Task

- team

- TechCrunch

- TechCrunch Disrupt

- Technologies

- Testing

- that

- The

- The Future

- The State

- the world

- their

- themselves

- then

- There.

- These

- this

- those

- three

- time

- to

- today

- top

- traffic

- Transform

- understand

- use

- User

- users

- using

- very

- volumes

- when

- which

- will

- with

- Word

- Work

- working

- world

- yet

- zephyrnet