Image by Author

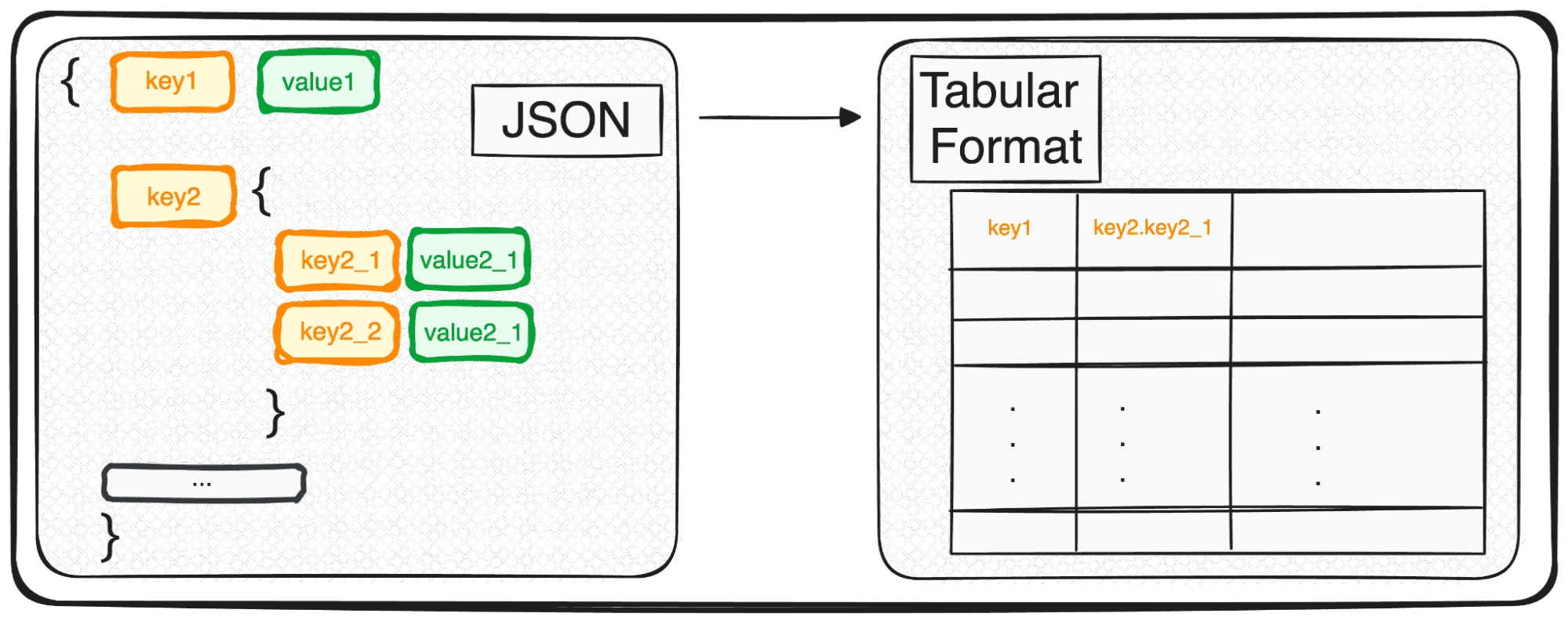

Diving into the world of data science and machine learning, one of the fundamental skills you’ll encounter is the art of reading data. If you have already some experience with it, you’re probably familiar with JSON (JavaScript Object Notation) – a popular format for both storing and exchanging data.

Think of how NoSQL databases like MongoDB love to store data in JSON, or how REST APIs often respond in the same format.

However, JSON, while perfect for storage and exchange, isn’t quite ready for in-depth analysis in its raw form. This is where we transform it into something more analytically friendly – a tabular format.

So, whether you’re dealing with a single JSON object or a delightful array of them, in Python’s terms, you’re essentially handling a dict or a list of dicts.

Let’s explore together how this transformation unfolds, making our data ripe for analysis ????

Today I’ll be explaining a magic command that allows us to easily parse any JSON into a tabular format in seconds.

And it is… pd.json_normalize()

So let’s see how it works with different types of JSONs.

The first type of JSON that we can work with is single-leveled JSONs with a few keys and values. We define our first simple JSONs as follows:

Code by Author

So let’s simulate the need to work with these JSON. We all know there’s not much to do in their JSON format. We need to transform these JSONs into some readable and modifiable format… which means Pandas DataFrames!

1.1 Dealing with simple JSON structures

First, we need to import the pandas library and then we can use the command pd.json_normalize(), as follows:

import pandas as pd

pd.json_normalize(json_string)

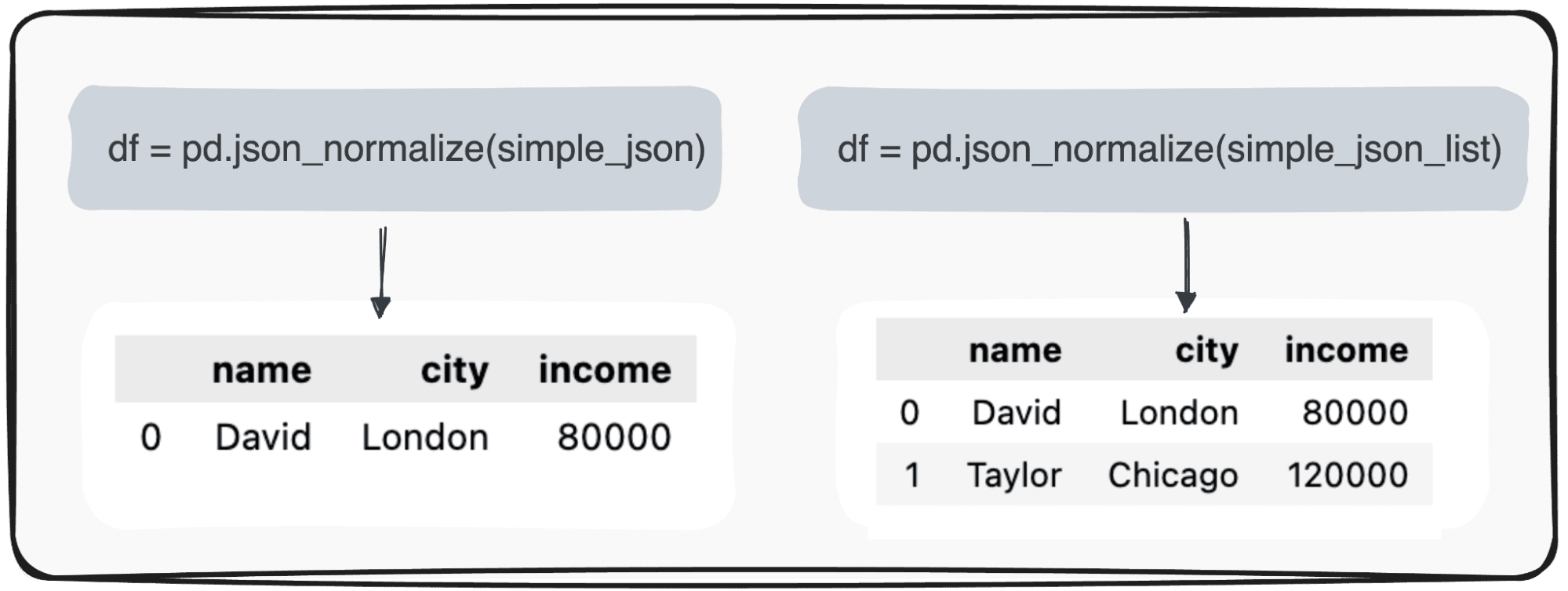

By applying this command to a JSON with a single record, we obtain the most basic table. However, when our data is a little bit more complex and presents a list of JSONs, we can still use the same command with no further complications and the output will correspond to a table with multiple records.

Image by Author

Easy… right?

The next natural question is what happens when some of the values are missing.

1.2 Dealing with null values

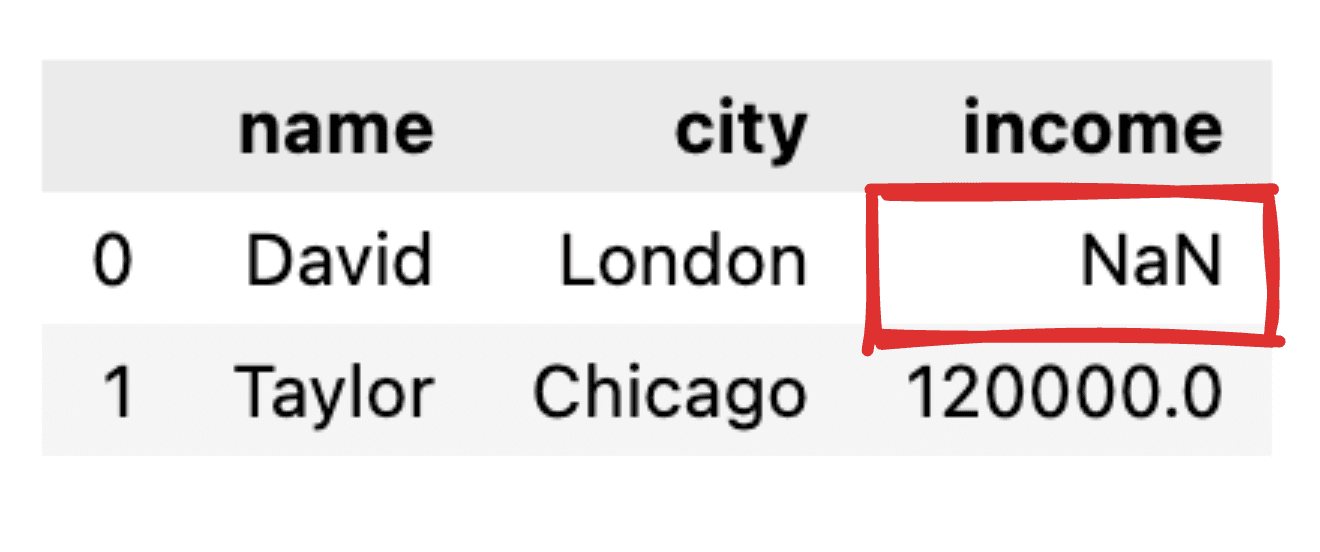

Imagine some of the values are not informed, like for instance, the Income record for David is missing. When transforming our JSON into a simple pandas dataframe, the corresponding value will appear as NaN.

Image by Author

And what about if I only want to get some of the fields?

1.3 Selecting only those columns of interest

In case we just want to transform some specific fields into a tabular pandas DataFrame, the json_normalize() command does not allow us to choose what fields to transform.

Therefore, a small preprocessing of the JSON should be performed where we filter just those columns of interest.

# Fields to include

fields = ['name', 'city'] # Filter the JSON data

filtered_json_list = [{key: value for key, value in item.items() if key in fields} for item in simple_json_list] pd.json_normalize(filtered_json_list)

So, let’s move to some more advanced JSON structure.

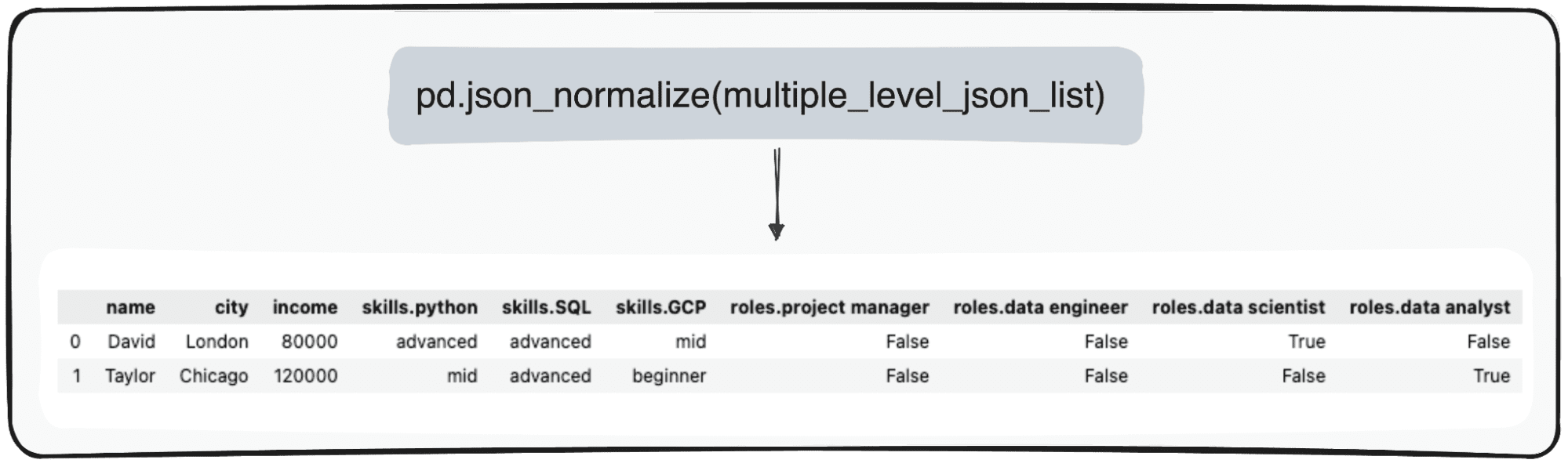

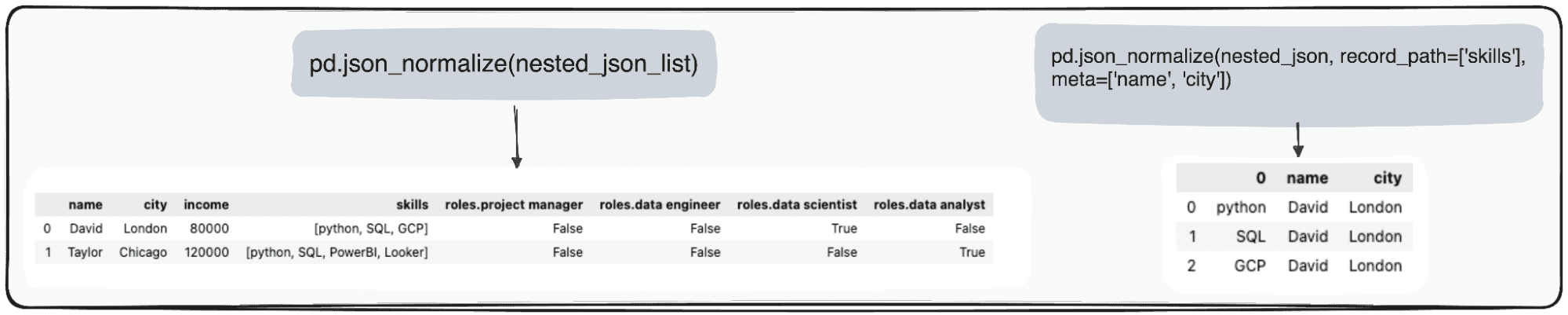

When dealing with multiple-leveled JSONs we find ourselves with nested JSONs within different levels. The procedure is the same as before, but in this case, we can choose how many levels we want to transform. By default, the command will always expand all levels and generate new columns containing the concatenated name of all the nested levels.

So if we normalize the following JSONs.

Code by Author

We would get the following table with 3 columns under the field skills:

- skills.python

- skills.SQL

- skills.GCP

and 4 columns under the field roles

- roles.project manager

- roles.data engineer

- roles.data scientist

- roles.data analyst

Image by Author

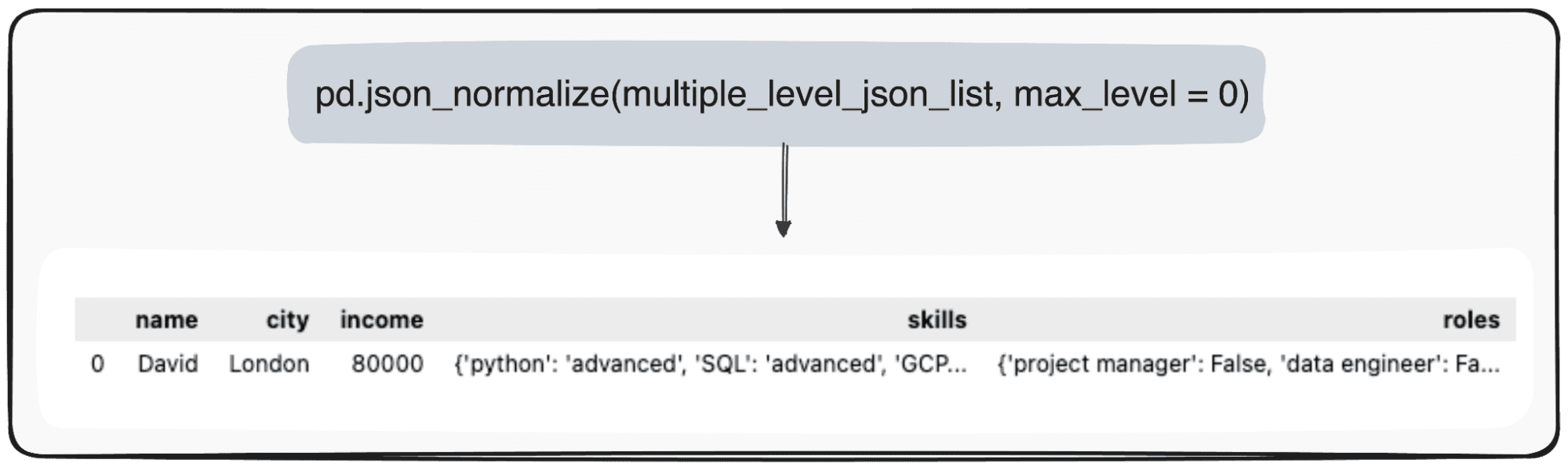

However, imagine we just want to transform our top level. We can do so by specifically defining the parameter max_level to 0 (the max_level we want to expand).

pd.json_normalize(mutliple_level_json_list, max_level = 0)

The pending values will be maintained within JSONs within our pandas DataFrame.

Image by Author

The last case we can find is having a nested List within a JSON field. So we first define our JSONs to use.

Code by Author

We can effectively manage this data using Pandas in Python. The pd.json_normalize() function is particularly useful in this context. It can flatten the JSON data, including the nested list, into a structured format suitable for analysis. When this function is applied to our JSON data, it produces a normalized table that incorporates the nested list as part of its fields.

Moreover, Pandas offers the capability to further refine this process. By utilizing the record_path parameter in pd.json_normalize(), we can direct the function to specifically normalize the nested list.

This action results in a dedicated table exclusively for the list’s contents. By default, this process will only unfold the elements within the list. However, to enrich this table with additional context, such as retaining an associated ID for each record, we can use the meta parameter.

Image by Author

In summary, the transformation of JSON data into CSV files using Python’s Pandas library is easy and effective.

JSON is still the most common format in modern data storage and exchange, notably in NoSQL databases and REST APIs. However, it presents some important analytic challenges when dealing with data in its raw format.

The pivotal role of Pandas’ pd.json_normalize() emerges as a great way to handle such formats and convert our data into pandas DataFrame.

I hope this guide was useful, and next time you are dealing with JSON, you can do it in a more effective way.

You can go check the corresponding Jupyter Notebook in the following GitHub repo.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the Data Science field applied to human mobility. He is a part-time content creator focused on data science and technology. You can contact him on LinkedIn, Twitter or Medium.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/converting-jsons-to-pandas-dataframes-parsing-them-the-right-way?utm_source=rss&utm_medium=rss&utm_campaign=converting-jsons-to-pandas-dataframes-parsing-them-the-right-way

- :is

- :not

- :where

- 1

- 1.3

- 11

- 2%

- 4

- 7

- 8

- a

- About

- Action

- Additional

- advanced

- All

- allow

- allows

- already

- always

- an

- analysis

- analyst

- Analytic

- analytics

- and

- any

- APIs

- appear

- applied

- Applying

- ARE

- Array

- Art

- AS

- associated

- barcelona

- basic

- BE

- before

- Bit

- both

- but

- by

- CAN

- capability

- case

- challenges

- check

- Choose

- City

- Columns

- Common

- complex

- complications

- contact

- content

- contents

- context

- convert

- converting

- correspond

- Corresponding

- creator

- Currently

- data

- data analyst

- data engineer

- data science

- data scientist

- data storage

- databases

- David

- dealing

- dedicated

- Default

- define

- defining

- delightful

- DICT

- different

- direct

- do

- does

- each

- easily

- easy

- Effective

- effectively

- elements

- emerges

- encounter

- engineer

- Engineering

- enrich

- essentially

- exchange

- exchanging

- exclusively

- Expand

- experience

- explaining

- explore

- familiar

- few

- field

- Fields

- Files

- filter

- Find

- First

- focused

- following

- follows

- For

- form

- format

- formats

- friendly

- from

- function

- fundamental

- further

- GCP

- generate

- get

- GitHub

- Go

- great

- guide

- handle

- Handling

- happens

- Have

- having

- he

- him

- hope

- How

- However

- HTTPS

- human

- i

- I’LL

- ID

- if

- imagine

- import

- important

- in

- in-depth

- include

- Including

- Income

- incorporates

- informed

- instance

- interest

- into

- isn

- IT

- ITS

- JavaScript

- json

- Jupyter Notebook

- just

- KDnuggets

- Key

- keys

- Know

- Last

- learning

- Level

- levels

- Library

- like

- List

- little

- ll

- love

- machine

- machine learning

- magic

- maintained

- Making

- manage

- manager

- many

- means

- Meta

- missing

- mobility

- Modern

- MongoDB

- more

- most

- move

- much

- multiple

- name

- Natural

- Need

- nested

- New

- next

- no

- notably

- notebook

- object

- obtain

- of

- Offers

- often

- on

- ONE

- only

- or

- our

- ourselves

- output

- pandas

- parameter

- part

- particularly

- pending

- perfect

- performed

- Physics

- pivotal

- plato

- Plato Data Intelligence

- PlatoData

- Popular

- presents

- probably

- procedure

- process

- produces

- project

- Python

- question

- quite

- Raw

- RE

- Reading

- ready

- record

- records

- refine

- Respond

- REST

- Results

- retaining

- right

- Role

- s

- same

- Science

- Science and Technology

- Scientist

- seconds

- see

- selecting

- should

- Simple

- simulate

- single

- skills

- small

- So

- some

- something

- specific

- specifically

- SQL

- Still

- storage

- store

- structure

- structured

- such

- suitable

- SUMMARY

- T

- table

- Technology

- terms

- that

- The

- the world

- their

- Them

- then

- These

- this

- those

- time

- to

- together

- top

- Transform

- Transformation

- transforming

- type

- types

- under

- us

- use

- useful

- using

- Utilizing

- value

- Values

- want

- was

- Way..

- we

- What

- when

- whether

- which

- while

- will

- with

- within

- Work

- working

- works

- world

- would

- you

- zephyrnet