Introduction

Retrieval Augmented Generation is the new technology now. RAG is replacing the traditional search-based approaches and creating a chat with a document environment. Since the inception of RAG, various methods have been proposed to enhance the standard RAG approach. The biggest hurdle in RAG is to retrieve the right document. Only when we get the right documents, the LLM will be able to generate the right answers. In this guide, we will be talking about HyDE(Hypothetical Document Embedding), an approach that was created to improve the Retrieval in RAG.

Learning Objectives

- Recognize RAG’s limitations and the need for better document retrieval.

- Understand HyDE’s role in improving retrieval accuracy.

- Learn to generate hypothetical documents for improved retrieval.

- Implement HyDE with LangChain for efficient retrieval.

- Evaluate HyDE’s effectiveness in reducing hallucinations.

This article was published as a part of the Data Science Blogathon.

Table of contents

Challenges Facing RAG Implementation

Retrieval Augmented Generation is very popular and right now is widely used. A simple RAG(Retrieval Augmented Generation) involves taking in raw text, chunking it into smaller pieces, creating embeddings for all the chunks, and storing the embeddings in a vector store. Then when a user provides a query, we compare the similarity between the user query and the chunks and retrieve the similar chunks. Finally, the user query along with similar chunks is sent to the Large Language Model to generate the final answer. This is the regular Retrieval Augmented Generation.

This regular and plain Retrieval Augmented Generation has many flaws. Starting with the chunking itself. There is no one size to chunking. The size of chunking documents largely depends on the type of Large Language Models we are working with and sometimes we have to try a bunch of sizes to get better results. Then comes the Retrieval, the main focus for this guide.

The RAG was developed to prevent the Large Language Models from hallucination. This largely depends on the similar information retrieved through the user query from the vector stores. If the Retrieval is not good, then the Large Language Model will either hallucinate or will not respond to the question provided by the user. One way to improve the Retrieval is Hypothetical Document Embeddings.

What is Hypothetical Document Embedding(HyDE) ?

Hypothetical Document Embeddings (HyDE) is one of the transformative solutions to tackle poor Retrievals faced in RAG-based solutions. As the name suggests, HyDE works by generating Hypothetical Documents, which will help in better retrieval of similar documents so that the Large Language Model can take these inputs and generate a better answer.

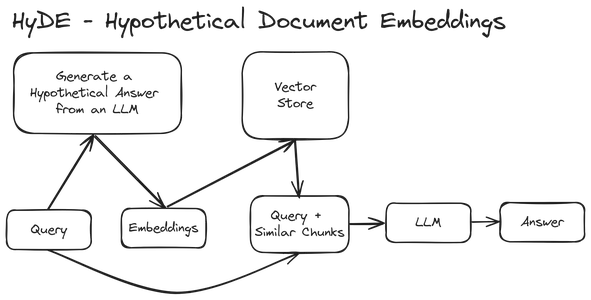

Let’s understand HyDE with the below diagram:

The first step involves taking in a user query. Now in a normal RAG system, we convert the user query into embeddings and send it to the vector store to retrieve similar chunks. But in Hypothetical Document Embeddings, we take in the user query and then pass it to a Large Language Model to generate a Hypothetical Answer to the question. So the LLM takes in the user question and tries to generate a fake Hypothetical Answer/Document with similar textual patterns from the first user query. We then convert this Hypothetical Document into embedding vectors and then use these embeddings to retrieve similar chunks from the vector store. Finally, we bind these similar chunks to the original query and pass on these together to LLM to generate the final answer.

So what we are trying to do here is, that instead of trying to perform a query to answer embedding vectors similarity, we are trying to perform an answer to answer embedding vectors similarity so that it yields better results.

Features of Hypothetical Document Embedding(HyDE)

- Enhanced Retrieval Accuracy: HyDE introduces a new approach where Hypothetical Answers/Documents are created based on the user queries, allowing for a more nuanced understanding of search intent beyond keywords. Thus encoding them to embedding vectors will really improve the retrieval systems in finding more semantically relevant chunks.

- Reduced Hallucinations: We have discussed that the RAG was introduced to mitigate LLM Hallucinations. These will be based on the retrieved context passed to the LLM, so giving them in wrong and not meaningful chunks to the LLM will result in hallucinations thus generating wrong answers. HyDE through its hypothetical documents will try to fetch the best relevant chunks thus reducing the chances of hallucinations.

HyDE in Practice – LangChain

In this section, we will be creating the Hypothetical Document Embeddings from scratch and see how well it retrieves the relevant content. Along with that, we will even look at an implementation in LangChain for the Hypothetical Document Embeddings.

We will start by downloading and installing the Python libraries:

pip install -q langchain langchain-google-genai sentence-transformers chromadbWe install the following libraries:

- langchain: LangChain provides an easy way to work with different LLMs and create applications with them. It allows us to easily switch between different LLM providers and different embedding models.

- langchain-google-genai: This module provides a wrapper around the Google-developed Large Language Models. Langchain allows us to easily integrate its Components with the Google LLMs like the Gemini with this library. The library even contains the wrapper for Google’s Embedding model.

- sentence-transformers: This library provides support for different types of embedding models. All these embedding models are available in the HuggingFace Hub and are open source. This library is necessary so that, we can work with the open-source embedding models from LangChain or even from LlamaIndex.

- chromadb: This library provides support for storing embedding vectors. The chromadb acts like a vector store, which stores the embedding vectors of both the documents that we are fetching and even the user queries. It is necessary for performing a similarity search so that we can retrieve similar documents for the given user query.

Implementation of HyDE

Let us implement HyDE by following certain steps:

Step1: Loading the LLM and the Embedding Models.

Let us start by loading the LLM and the embedding models. For this, we will work with the below code:

# --- Setting API KEY ---

import os

os.environ['GOOGLE_API_KEY']='YOUR GOOGLE API KEY'

# --- Model Loading ---

# Import the necessary modules from the langchain_google_genai package.

from langchain_google_genai import GoogleGenerativeAIEmbeddings

from langchain_google_genai import ChatGoogleGenerativeAI

# Create a ChatGoogleGenerativeAI Object and convert system messages to human-readable format.

llm = ChatGoogleGenerativeAI(model="gemini-pro", convert_system_message_to_human=True)

# Create a GoogleGenerativeAIEmbeddings object for embedding our Prompts and documents

Embeddings = GoogleGenerativeAIEmbeddings(model="models/embedding-001")Explanation

- We start by setting up the API Key.

- Then we import the necessary classes from the langchain_google_genai module, these include the ChatGoogleGenerativeAI and the GoogleGenerativeAIEmbeddings.

- Firstly we create a ChatGoogleGenerativeAI Object, telling the model name, which here is the Gemini-pro, and whether to convert system messages to human-readable format, which we set to True.

- Then we create a GoogleGenerativeAIEmbeddings Object for embedding Prompts and documents. For this, we go with the embedding-001 model.

You can visit this link to get your free API Key. After getting the API Key, paste in the above code in place of “YOUR GOOGLE API KEY”.

Step2: Data Loading

The first step in a general Retrieval Augmented Generation involves data loading. Here is the code created in LangChain to fetch and load data from the given URL.

# --- Data Loading ---

# Import the WebBaseLoader class from the langchain_community.document_loaders module.

from langchain_community.document_loaders import WebBaseLoader

# Create a WebBaseLoader object with the URL of the blog post to load.

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/")

# Load the blog post and store the documents in the `docs` variable.

docs = loader.load()- We import the WebBaseLoader class from the langchain_community.document_loaders module. This class can be worked with to load documents from the web.

- Then we create an Instance of the WebBaseLoader class named loader and pass the URL “https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/” to the constructor of WebBaseLoader.

- We call the load() method on the loader object. This function fetches and loads the documents from the given web URL. The loaded docs are stored in the variable docs.

After running the above code, the variable docs will contain the documents retrieved from the given web URL. After loading the data, we need to chunk them into smaller pieces so that we can extract/retrieve only relevant data when necessary. To perform this, we will be working with the below code.

Step3: Data Splitting/ Creating Chunks

Let us now split the data and create chunks.

# --- Splitting / Creating Chunks ---

# Import the RecursiveCharacterTextSplitter class from the

# langchain.text_splitter module.

from langchain.text_splitter import RecursiveCharacterTextSplitter

# Create a RecursiveCharacterTextSplitter object using the provided

# chunk size and overlap.

text_splitter = RecursiveCharacterTextSplitter(chunk_size=300,

chunk_overlap=50)

# Split the documents in the `docs` variable into smaller chunks and

#store the resulting splits in the `splits` variable.

splits = text_splitter.split_documents(docs)Explanation

- We import the RecursiveCharacterTextSplitter class from the langchain.text_splitter module. This class is useful for creating chunks for the documents that we have downloaded.

- We will then create an Instance of the RecursiveCharacterTextSplitter class named text_splitter. To this object, we pass the chunk_size=300 and chunk_overlap=50. This tells that we create chunks of 300 size and each neighboring chunk will have a chunk overlap of 50 tokens.

- Finally, we call the split_documents() function on the text_splitter object. This function splits the documents stored in the variable docs into chunks based on the given chunk size and overlap.

Step4: Storing Documents

Now we have created our documents and have chunked them, the next step is to store these documents in a vector store so that we can retrieve them later.

The code for this will be:

# --- Creating Embeddings by Passing Hyde Embeddings to Vector Store ---

from langchain_community.vectorstores import Chroma

# passing the hyde embeddings to create and store embeddings

vectorstore = Chroma.from_documents(documents=splits,

collection_name='my-collection',

embedding=Embeddings)

# Creating Retriever

retriever = vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 4})Explanation

- We import the Chroma class from the langchain_community.vectorstore module, which we will work with for creating a ChromaDB vector store for document chunks.

- Now we instantiate a Chroma object named vectorstore and call the from_documents() function to create the vector store, providing splits for vectorization, giving the collection_name ‘my-collection’, and giving in the Embeddings for embedding.

- This will use our Google Embeddings model for creating the embedding vectors for our chunks. Now our vector store is ready and contains the embeddings for all our chunks.

- We will now create a retriever that lets us retrieve similar chunks from our vectorstore.

- For this, we create a retriever object from the vectorstore through the as_retriever() function. Then we configure the retriever for similarity-based searches by setting search_type to “similarity” and specifying search parameters with search_kwargs as {“k”: 4} to retrieve the top 4 similar documents.

Step5: Creating a Prompt Template for Generating HyDE

Now we are finally done with the data loading, preprocessing and storing part. Now we will be creating a Prompt Template for generating hypothetical documents for the user queries. The code for this can be found below:

# Importing the Prompt Template

from langchain.prompts import ChatPromptTemplate

# Creating the Prompt Template

template = """For the given question try to generate a hypothetical answer

Only generate the answer and nothing else:

Question: {question}

"""

Prompt = ChatPromptTemplate.from_template(template)

query = Prompt.format(question = 'What are different Chain of Thought(CoT) Prompting?')

hypothetical_answer = llm.invoke(query).content

print(hypothetical_answer)Explanation

- We define a Prompt Template that contains the Prompt which tells the Large Language Model to generate hypothetical answers based on questions.

- We then pass it on to a ChatPromptTemplate object named Prompt by parsing the defined template string.

- Then create a query by formatting the template with a specific question using Prompt.format(question=’What is Task Decomposition?’).

- Then we call the llm object to invoke the language model with the generated Query Prompt.

- Finally, we retrieve the content of the generated hypothetical answer by accessing .content from the result. Then we print it to display the generated content by the LLM.

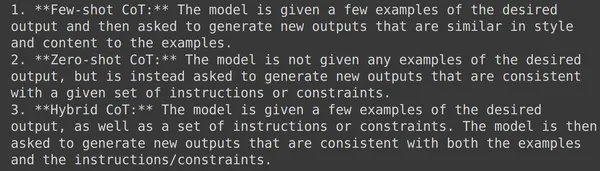

Step6: Running the code for final results

Running the above will result in a Hypothetical Document/Answer generated by the Large Language Model based on the given user query.

We can see that based on the user query, the Large Language Model has generated a possible answer i.e. a Hypothetical Document. Now let’s try to retrieve documents from our vector store that are relevant to this Hypothetical Answer/Document.

# retrieval with hypothetical answer/document

similar_docs = retriever.get_relevant_documents(hypothetical_answer)

for doc in similar_docs:

print(doc.page_content)

print()- In the above code, we call the .get_relevant_documents() function of the retriever object. To this function, we pass the hypothetical_answer that we have just generated.

- This will then retrieve 4 relevant chunks from the vector store and store it in the variable similar_docs.

- We then print the content of each document chunk by iterating through the list of similar chunks.

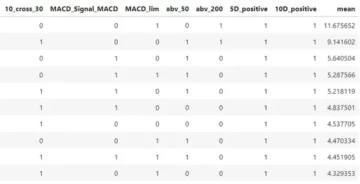

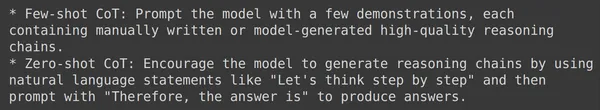

After running the code, below we can see the relevant documents retrieved.

Step7: Getting the Relevant Documents

We can see that all four chunks retrieved seem to have a close relationship to the original query asked by the user. Especially the first 3 chunks have an ample amount of information needed by the Large Language Model to generate the answer. Let us try getting the relevant documents from the plain Prompt. The code for this will be:

# retrieval with original query

similar_docs = retriever.get_relevant_documents('What are different

Chain of Thought(CoT) Prompting?')

for doc in similar_docs:

print(doc.page_content)

print()Outputs :

Types of CoT Prompts#

Two main types of CoT Prompting:

Chain-of-thought (CoT) prompting (Wei et al. 2022) generates a

sequence of short sentences to describe reasoning logics step by

step, known as reasoning chains or rationales, to eventually lead to

the final answer. The benefit of CoT is more pronounced for complicated

reasoning tasks, while using

Chain-of-Thought (CoT)#

Table of Contents

Basic Prompting

Zero-Shot

Few-shot

Tips for Example Selection

Tips for Example Ordering

Instruction Prompting

Self-Consistency Sampling

Chain-of-Thought (CoT)

Types of CoT Prompts

Tips and Extensions

Automatic Prompt Design

Augmented Language ModelsHere we can see that the retrieved documents do not contain in-depth information when compared to the one with the Hypothetical Documents Embeddings Approach. So let us pass these documents retrieved through the Hyde way to the LLM and see the output that it generates.

# Creating the Prompt Template

template = """Answer the following question based on this context:

{context}

Question: {question}

"""

Prompt = ChatPromptTemplate.from_template(template)

# Creating a function to format the retrieved docs

def format_docs(docs):

return "nn".join(doc.page_content for doc in docs)

formatted_docs = format_docs(similar_docs)

Query_Prompt = Prompt.format(context=formatted_docs,

question="What are different Chain of Thought(CoT) Prompting?")

print(Query_Prompt)

response = llm.invoke(Query_Prompt)

print(response.content)Explanation

- We now create a new Prompt Template. This template is designed to take in the documents that were retrieved through the generated Hypothetical Document and the original user query.

- Then we instantiate a ChatPromptTemplate object named Prompt by parsing the defined Prompt Template string.

- Create a function format_docs(docs) to format the retrieved documents. It takes in a list of Langchain Document Objects and then it extracts the text content from each Document Object and joins them

- Then we apply the format_docs() function to similar_docs to create formatted_docs containing the formatted content.

- Generate a query Prompt Query_Prompt by formatting the Prompt template with the formatted context and the question “What are different Chain of Thought(CoT)Promptinh?”.

- Finally, we call the LLM with the .invoke() function and pass in the Query_Prompt that we have just generated. The LLM will take in the Query_Prompt containing the retrieved documents through Hypothetical Answer and generate a final response to the user query and we then print the contents.

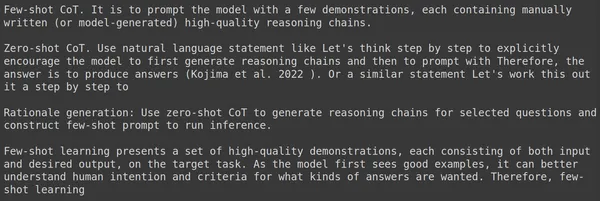

After running the code, the Large Language Model generated the following response to the user query.

We can notice that it has taken in the retrieved documents that we were able to get through the Hypothetical Answer and then generate a correct answer to the user question without any hallucination. Now, this is the manual processing of performing Hypothetical Document Embeddings, where we can do it from scratch by defining a prompt to create a Hypothetical Answer and then performing a similar search for this Answer and the document chunks.

HyDE Using Langchain Predefined Functions

Luckily Langchain comes with a predefined class for HyDE. Let us take a look at it through the below code:

from langchain_google_genai import GoogleGenerativeAI

from langchain_google_genai import GoogleGenerativeAIEmbeddings

llm = GoogleGenerativeAI(model="gemini-pro")

Emebeddings = GoogleGenerativeAIEmbeddings(model="models/embedding-001")

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/")

docs = loader.load()

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=300,chunk_overlap=50)

splits = text_splitter.split_documents(docs)

from langchain.chains import HypotheticalDocumentEmbedder

hyde_embeddings = HypotheticalDocumentEmbedder.from_llm(llm,

Embeddings,

prompt_key = "web_search")

from langchain_community.vectorstores import Chroma

vectorstore = Chroma.from_documents(documents=splits,

collection_name='collection-1',

embedding=hyde_embeddings)

retriever = vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 4})

from langchain.schema.runnable import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

from langchain.prompts import PromptTemplate, ChatPromptTemplate

template = """Answer the following question based on this context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

def format_docs(docs):

return "nn".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

response = rag_chain.invoke("What are different Chain of Thought(CoT) prompting?")

print(response)

The code is the same till the part where we chunk the documents that we have downloaded from the web.

Explanation

- We import the HypotheticalDocumentEmbedder from the langchain.chains module. This class will take care of creating the Hypothetical Answers, embedding them, and retrieving the similar chunks

- Next, we create an object of HypotheticalDocumentEmbedder and call the .from_llm() function, where we pass in the llm, which will be necessary for creating the Hypothetical Answer, Embeddings, which will be necessary for creating the embedding vectors for the Hypothetical Answers and the Prompt Key, i.e. “web search”, where the LLM may refer the internet to get a Hypothetical Answer

- The hyde_embeddings will even have an inbuilt Prompt that will be necessary for generating the Hypothetical Answers

- Next, we store the documents in the Chroma Vector Store. Here instead of giving the Embedding model, we pass in the hyde_embeddings, so that we can retrieve similar chunks of the Hypothetical Answer

- Next, we define a Prompt Template and create our retriever object

- Then using the Prompt, Retriever, LLM, and Output Parser, we create a chain through LCEL (Langchain Expression Language)and assign it to the rag_chain variable

Now we can just call the rag_chain’s invoke() function and pass it the question. The rag_chain will take care of creating the Hypothetical Answers for us from the provided query, then create embedding vectors for them and retrieve similar chunks from the vector store. Then format these chunks to fit in the Prompt Template and pass the final Prompt to the Large Language Model, which will generate an answer based on the retrieved chunks and the user query.

Below is the output generated after running this code:

We can see that the answer generated from the LLM is similar to the answer that we generated when were doing the Hypothetical Document Embeddings from scratch. But do note that this in-built Hyde is not producing good results, so it is better to test both the from-scratch approach and this approach before going forward. So here the HypotheticalDocumentEmbedder takes care of this work so that we can start building efficient RAG applications.

Conclusion

In this guide, we delved into the realm of Hypothetical Document Embeddings (HyDE) a strategy to improve retrieval accuracy in Retrieval Augmented Generation (RAG) systems. By leveraging HyDE, we aimed to overcome the limitations of traditional RAG practices, which include accurately retrieving relevant documents for generating responses. Through the guide and practical implementation of HyDE using LangChain, we explored its potential in improving retrieval accuracy and reducing hallucinations, thereby contributing to more reliable and contextually relevant responses from Large Language Models (LLMs). By understanding the intricacies of HyDE and its practical application, we can pave the way for more efficient and effective RAG systems.

Key Takeaways

- Explored that RAG has become a prominent technology, but traditional approaches face challenges in accurate document retrieval.

- learned that HyDE provides a transformative solution by generating hypothetical documents based on user queries to improve retrieval accuracy.

- By reducing hallucinations through better retrieval of meaningful chunks, HyDE contributes to more reliable responses from Large Language Models (LLMs).

- Practical implementation of HyDE involves steps like data loading, preprocessing, generating hypothetical answers, retrieving relevant documents, and integrating with LLMs.

- LangChain provides tools and libraries for implementing HyDE efficiently, including predefined classes like HypotheticalDocumentEmbedder for streamlined integration into RAG systems

Frequently Asked Questions

A. RAG is a framework/tool for generating text by combining retrieval and generation. It retrieves relevant information from a document store based on a user query and then uses that information to generate a response. However, traditional RAG can struggle if the retrieved information isn’t a good match for the query.

A. The biggest hurdle in RAG is retrieving the right documents. Traditional RAG relies on pure user query matching, which can be inaccurate. HyDE addresses this by creating “hypothetical documents” based on the user query. These hypothetical documents are then used to retrieve more relevant information from the document store.

A. The guide explores implementing HyDE using the LangChain library. It includes creating hypothetical documents, storing them in a vector store, and retrieving relevant documents based on the hypothetical documents.

A. The quality of the generated hypothetical documents can impact the retrieval accuracy. HyDE needs extra computational resources compared to traditional RAG.

A. Langchain provides a built-in class called HypotheticalDocumentEmbedder that simplifies the HyDE process. This class handles generating hypothetical documents, embedding them, and retrieving relevant chunks.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/04/enhancing-rag-with-hypothetical-document-embedding/

- :has

- :is

- :not

- :where

- $UP

- 11

- 2022

- 300

- 4

- 5

- 50

- 8

- a

- Able

- About

- above

- accessing

- accuracy

- accurate

- accurately

- acts

- addresses

- After

- aimed

- AL

- All

- Allowing

- allows

- along

- amount

- an

- analytics

- Analytics Vidhya

- and

- answer

- answers

- any

- api

- Application

- applications

- Apply

- approach

- approaches

- ARE

- around

- article

- AS

- asked

- assign

- At

- augmented

- Automatic

- available

- based

- basic

- BE

- become

- been

- before

- below

- benefit

- BEST

- Better

- between

- Beyond

- Biggest

- bind

- Blog

- blogathon

- both

- Building

- built-in

- Bunch

- but

- by

- call

- called

- CAN

- care

- certain

- chain

- chains

- challenges

- chances

- chat

- class

- classes

- Close

- code

- combining

- comes

- compare

- compared

- complicated

- components

- computational

- contain

- containing

- contains

- content

- contents

- context

- contributes

- contributing

- convert

- correct

- create

- created

- Creating

- data

- def

- define

- defined

- defining

- depends

- describe

- Design

- designed

- developed

- diagram

- different

- discretion

- discussed

- Display

- do

- docs

- document

- documents

- does

- doing

- done

- downloaded

- downloading

- e

- E&T

- each

- easily

- easy

- Effective

- effectiveness

- efficient

- efficiently

- either

- else

- embedding

- encoding

- enhance

- enhancing

- Environment

- especially

- Ether (ETH)

- Even

- eventually

- example

- Explored

- explores

- expression

- extensions

- extra

- Extracts

- Face

- faced

- facing

- fake

- final

- Finally

- finding

- First

- fit

- flaws

- Focus

- following

- For

- format

- Forward

- found

- four

- Free

- from

- function

- Gemini

- General

- generate

- generated

- generates

- generating

- generation

- get

- getting

- GitHub

- given

- Giving

- Go

- going

- good

- Google’s

- guide

- Handles

- Have

- help

- here

- How

- However

- HTTPS

- Hub

- HuggingFace

- human-readable

- hurdle

- i

- if

- Impact

- implement

- implementation

- implementing

- import

- importing

- improve

- improved

- improving

- in

- in-depth

- inaccurate

- inception

- include

- includes

- Including

- information

- inputs

- install

- installing

- instance

- instead

- integrate

- Integrating

- integration

- intent

- Internet

- into

- intricacies

- introduced

- Introduces

- involves

- IT

- iterating

- ITS

- itself

- Joins

- just

- Key

- keywords

- known

- language

- large

- largely

- later

- lead

- let

- Lets

- leveraging

- libraries

- Library

- like

- limitations

- List

- llm

- load

- loader

- loading

- loads

- Look

- Main

- manual

- many

- Match

- matching

- May..

- meaningful

- Media

- messages

- method

- methods

- Mitigate

- model

- models

- module

- Modules

- more

- more efficient

- name

- Named

- necessary

- Need

- needed

- needs

- neighboring

- New

- next

- no

- normal

- note

- nothing

- Notice..

- now

- nuanced

- object

- objects

- of

- on

- ONE

- only

- open

- open source

- or

- ordering

- original

- OS

- our

- output

- Overcome

- overlap

- owned

- package

- parameters

- part

- pass

- passed

- Passing

- patterns

- pave

- perform

- performing

- pieces

- Place

- Plain

- plato

- Plato Data Intelligence

- PlatoData

- poor

- Popular

- possible

- Post

- potential

- Practical

- practice

- practices

- prevent

- Problem

- process

- processing

- producing

- prominent

- prompts

- pronounced

- proposed

- provided

- providers

- provides

- providing

- published

- pure

- Python

- quality

- queries

- query

- question

- Questions

- rag

- Raw

- ready

- really

- realm

- reasoning

- reducing

- refer

- regular

- relationship

- relevant

- reliable

- relies

- Resources

- Respond

- response

- responses

- result

- resulting

- Results

- retrieval

- return

- right

- Role

- running

- same

- Science

- scratch

- Search

- searches

- Section

- see

- seem

- selection

- send

- sent

- Sequence

- set

- setting

- Short

- shown

- similar

- Simple

- simplifies

- since

- Size

- sizes

- smaller

- So

- solution

- Solutions

- SOLVE

- sometimes

- Source

- specific

- specifying

- split

- Splits

- standard

- start

- Starting

- Step

- Steps

- store

- stored

- stores

- storing

- Strategy

- streamlined

- String

- Struggle

- Suggests

- support

- Switch

- system

- Systems

- table

- tackle

- Take

- taken

- takes

- taking

- talking

- Task

- tasks

- Technology

- telling

- tells

- template

- test

- text

- textual

- that

- The

- Them

- then

- There.

- thereby

- These

- this

- Through

- Thus

- till

- tips

- to

- together

- Tokens

- tools

- top

- traditional

- transformative

- tries

- true

- try

- trying

- two

- type

- types

- understand

- understanding

- URL

- us

- use

- used

- useful

- User

- uses

- using

- variable

- various

- vector

- vectors

- very

- Visit

- was

- Way..

- we

- web

- webp

- WELL

- were

- What

- when

- whether

- which

- while

- widely

- will

- with

- without

- Work

- worked

- working

- works

- Wrong

- yields

- Your

- zephyrnet