The timeline of artificial intelligence takes us on a captivating journey through the evolution of this extraordinary field. From its humble beginnings to the present day, AI has captivated the minds of scientists and sparked endless possibilities.

It all began in the mid-20th century, when visionary pioneers delved into the concept of creating machines that could simulate human intelligence. Their groundbreaking work set the stage for the birth of artificial intelligence.

In recent years, AI has become an integral part of our lives. It now possesses the ability to understand human language, recognize objects, and make predictions. Its applications span diverse domains, from healthcare to transportation, transforming the way we live and work.

Join us as we head on to this exciting journey through the timeline of artificial intelligence.

Where does the timeline of artificial intelligence start?

Ever since the 1940s, artificial intelligence (AI) has been a part of our lives. However, some experts argue that the term itself can be misleading because AI technology is still a long way off from achieving true human-like intelligence. It hasn’t reached a stage where it can match the remarkable achievements of mankind. To develop “strong” AI, which only exists in science fiction for now, significant progress in fundamental science would be required to create a model of the entire world.

Nonetheless, starting from around 2010, there has been a renewed surge of interest in the field. This can be attributed primarily to remarkable advancements in computer processing power and the availability of vast amounts of data. Amidst all the excitement, it’s important to approach the topic with an objective perspective, as there have been numerous exaggerated promises and unfounded worries that occasionally make their way into discussions.

In our view, it would be helpful to briefly review the timeline of artificial intelligence as a means to provide context for ongoing debates. Let us start with the ideas which are the foundation of AI, reaching far back to ancient times.

Ancient times

The foundation of today’s AI can be traced back to ancient times when early thinkers and philosophers laid the groundwork for the concepts that underpin this field. While the technological advancements we see today were not present in those eras, the seeds of AI were sown through philosophical musings and theoretical explorations.

One can find glimpses of AI-related ideas in ancient civilizations such as Greece, Egypt, and China. For instance, in ancient Greek mythology, there are tales of automatons, which were mechanical beings capable of performing tasks and even exhibiting intelligence. These tales reflected early notions of creating artificial life, albeit in a mythical context.

In ancient China, the concept of “Yan Shi’s automaton” is often cited as an early precursor to the development of AI. Yan Shi, an engineer, and inventor from the 3rd century BC, is said to have crafted a mechanical figure that could mimic human movements and respond to external stimuli. This can be seen as an early attempt to replicate human-like behavior through artificial means.

Additionally, ancient philosophers such as Aristotle pondered the nature of thought and reasoning, laying the groundwork for the study of cognition that forms a crucial aspect of AI research today. Aristotle’s ideas on logic and rationality have influenced the development of algorithms and reasoning systems in modern AI, creating the foundation of the timeline of artificial intelligence.

AI’s birth

Between 1940 and 1960, a convergence of technological advancements and the exploration of combining machine and organic functions had a profound impact on the development of artificial intelligence (AI).

Norbert Wiener, a pioneering figure in cybernetics, recognized the importance of integrating mathematical theory, electronics, and automation to create a comprehensive theory of control and communication in both animals and machines. Building on this foundation, Warren McCulloch and Walter Pitts formulated the first mathematical and computer model of the biological neuron in 1943. Although they didn’t coin the term “artificial intelligence” initially, John Von Neumann and Alan Turing played pivotal roles in the underlying technologies. They facilitated the transition of computers from 19th-century decimal logic to binary logic, codifying the architecture of modern computers and demonstrating their universal capabilities for executing programmed tasks.

Turing, in particular, introduced the concept of a “game of imitation” in his renowned 1950 article “Computing Machinery and Intelligence“, where he explored whether a person could distinguish between conversing with a human or a machine via teletype communication, making a significant mark in timeline of artificial intelligence. This seminal work sparked discussions on defining the boundaries between humans and machines.

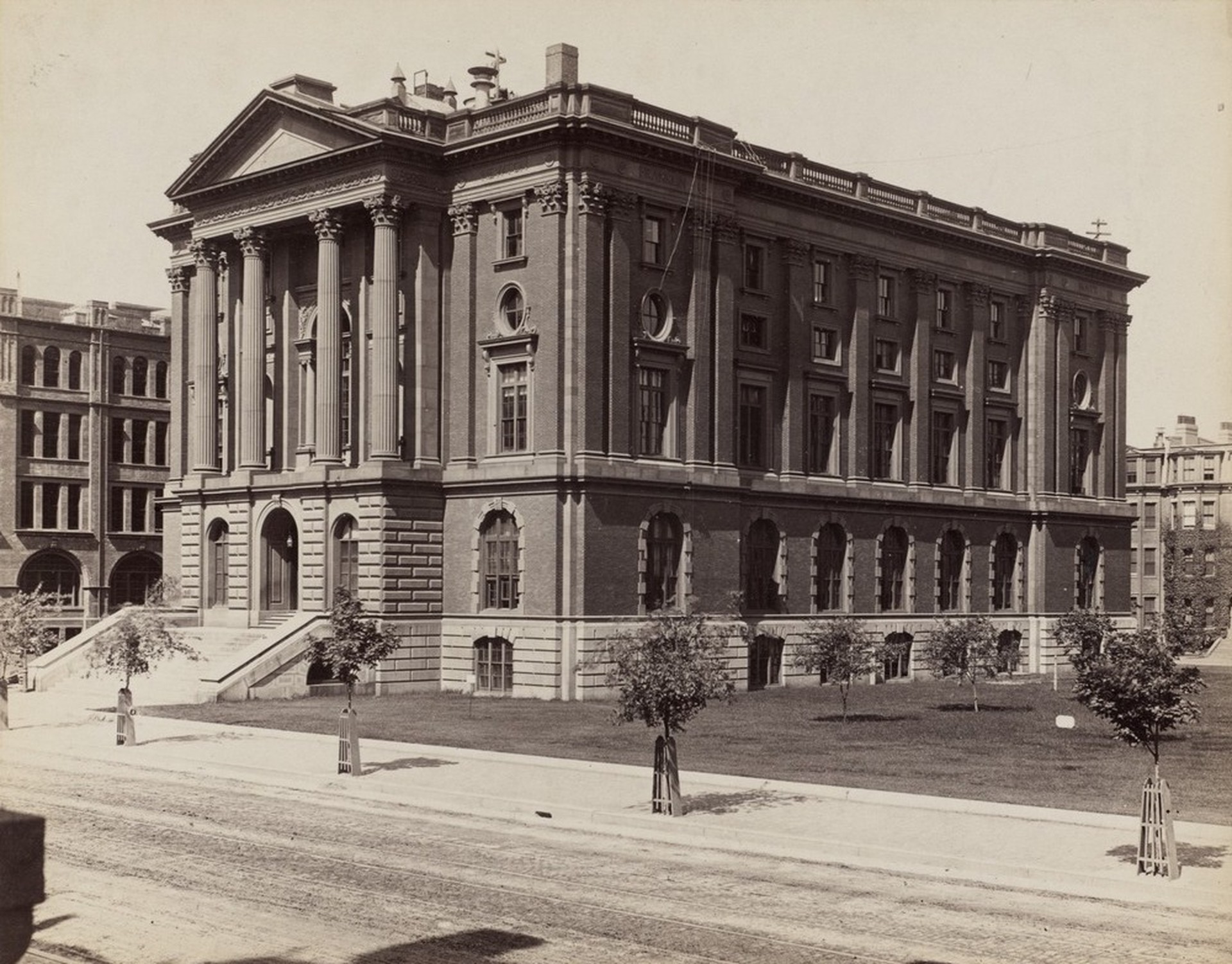

The credit for coining the term “AI” goes to John McCarthy of MIT. Marvin Minsky offers a definition of AI as the development of computer programs that engage in tasks that currently rely on high-level mental processes such as perceptual learning, memory organization, and critical reasoning. The discipline officially began to take shape at a symposium held at Dartmouth College in the summer of 1956, where a workshop played a central role and involved continuous contributions from McCarthy, Minsky, and four others.

In the early 1960s, there was a decline in the enthusiasm surrounding AI despite its ongoing promise and excitement. The limited memory capacity of computers posed challenges in using computer languages effectively. However, even during this period, significant foundational work was being laid.

For instance, the Information Processing Language (IPL) emerged, enabling the development of programs like the Logic Theorist Machine (LTM) in 1956. The LTM aimed to prove mathematical theorems and introduced concepts such as solution trees that continue to be relevant in AI today.

The golden age of AI

Stanley Kubrick’s 1968 film “2001: A Space Odyssey” introduced audiences to a computer named HAL 9000, which encapsulated the ethical concerns surrounding artificial intelligence. The film raised questions about whether AI would be highly sophisticated and beneficial to humanity or pose a potential danger.

While the influence of the movie is not rooted in scientific accuracy, it contributed to raising awareness of these themes, much like science fiction author Philip K. Dick, who constantly pondered whether machines could experience emotions.

The late 1970s marked the introduction of the first microprocessors, coinciding with a resurgence of interest in AI. Expert systems, which aimed to replicate human reasoning, reached their peak during this time. Stanford University unveiled MYCIN in 1972, while MIT introduced DENDRAL in 1965. These systems relied on an “inference engine” that provided logical and knowledgeable responses when given relevant information.

Promising significant advancements, these systems faced challenges by the late 1980s or early 1990s. Implementing and maintaining such complex systems proved labor-intensive, with a “black box” effect obscuring the machine’s underlying logic when dealing with hundreds of rules. Consequently, the creation and upkeep of these systems became increasingly difficult, especially when more affordable and efficient alternatives emerged. It’s worth noting that during the 1990s, the term “artificial intelligence” faded from academic discourse, giving way to more subdued terms like “advanced computing.”

In May 1997, IBM’s supercomputer Deep Blue achieved a significant milestone by defeating chess champion Garry Kasparov. Despite Deep Blue’s limited capability to analyze only a small portion of the game’s complexity, the defeat of a human by a machine remained a symbolically significant event in history.

It is important to recognize that while “2001: A Space Odyssey” and Deep Blue’s victory didn’t directly drive the funding and advancement of AI, they contributed to the broader cultural dialogue surrounding AI’s potential and its implications for humanity, accelerating the timeline of artificial intelligence.

Modern times

AI technologies gained significant attention following Deep Blue’s victory against Garry Kasparov, reaching their peak around the mid-2010s.

Two key factors contributed to the new boom in the field around 2010:

- Access to vast amounts of data: The availability of enormous datasets became crucial for AI advancements. The abundance of data allowed algorithms to learn and make predictions based on extensive information

- Discovery of highly efficient computer graphics card processors: The realization that graphics card processors could run AI algorithms with exceptional efficiency further fueled the progress in the field. This breakthrough enabled faster and more powerful computations, propelling AI research forward

One notable public achievement during this time was IBM’s AI system, Watson, defeating two champions on the game show Jeopardy in 2011. Another significant milestone came in 2012 when Google X’s AI successfully identified cats in videos using over 16,000 processors. This demonstrated the astounding potential of machines to learn and differentiate between various objects.

In 2016, Google’s AI AlphaGo defeated Lee Sedol and Fan Hui, the European and world champions in the game of Go. This victory marked a radical departure from expert systems and highlighted the shift towards inductive learning.

And the best part? You can see it with your eyes in the video below, thanks to Google DeepMind’s Youtube channel.

[embedded content]

Instead of manually coding rules as in expert systems, the focus shifted to allowing computers to independently discover patterns and correlations through large-scale data analysis.

Deep learning emerged as a highly promising machine learning technology for various applications. Researchers such as Geoffrey Hinton, Yoshua Bengio, and Yann LeCun initiated a research program in 2003 to revolutionize neural networks. Their experiments, conducted at institutions like Microsoft, Google, and IBM, demonstrated the success of deep learning in significantly reducing error rates in speech and image recognition tasks.

The adoption of deep learning by research teams rapidly increased due to its undeniable advantages. While there have been significant advancements in text recognition, experts like Yann LeCun acknowledge that there is still a long way to go before creating text understanding systems.

A notable challenge lies in the development of conversational agents. Although our smartphones can transcribe instructions accurately, they currently struggle to properly contextualize the information or discern our intentions, highlighting the complexity of natural language understanding.

What are the current trends and developments in AI?

The timeline of artificial intelligence will never have an end because the field of AI is constantly evolving, and several key trends and developments are shaping its current landscape.

Machine learning

Machine learning is a branch of artificial intelligence (AI) that focuses on enabling machines to learn and improve from experience without being explicitly programmed. Instead of following rigid instructions, machine learning algorithms analyze data, identify patterns, and make predictions or decisions based on that analysis.

By learning from large amounts of data, machines can automatically adapt and improve their performance over time.

Deep learning

Deep learning, powered by neural networks with multiple layers, continues to drive advancements in AI. Researchers are exploring architectures such as convolutional neural networks (CNNs) for image and video processing and recurrent neural networks (RNNs) for sequential data analysis.

Techniques like transfer learning, generative adversarial networks (GANs), and reinforcement learning are also gaining attention.

Big data

Big data, one of the most important things timeline of artificial intelligence brings to your business, refers to the vast amounts of structured and unstructured data that are too large and complex to be effectively processed using traditional data processing methods. Big data encompasses data from various sources such as social media, sensors, transactions, and more. The challenge with big data lies in its volume, velocity, and variety.

Advanced analytics and AI techniques, including machine learning, are employed to extract valuable insights, patterns, and trends from this data, enabling organizations to make data-driven decisions and gain a competitive edge.

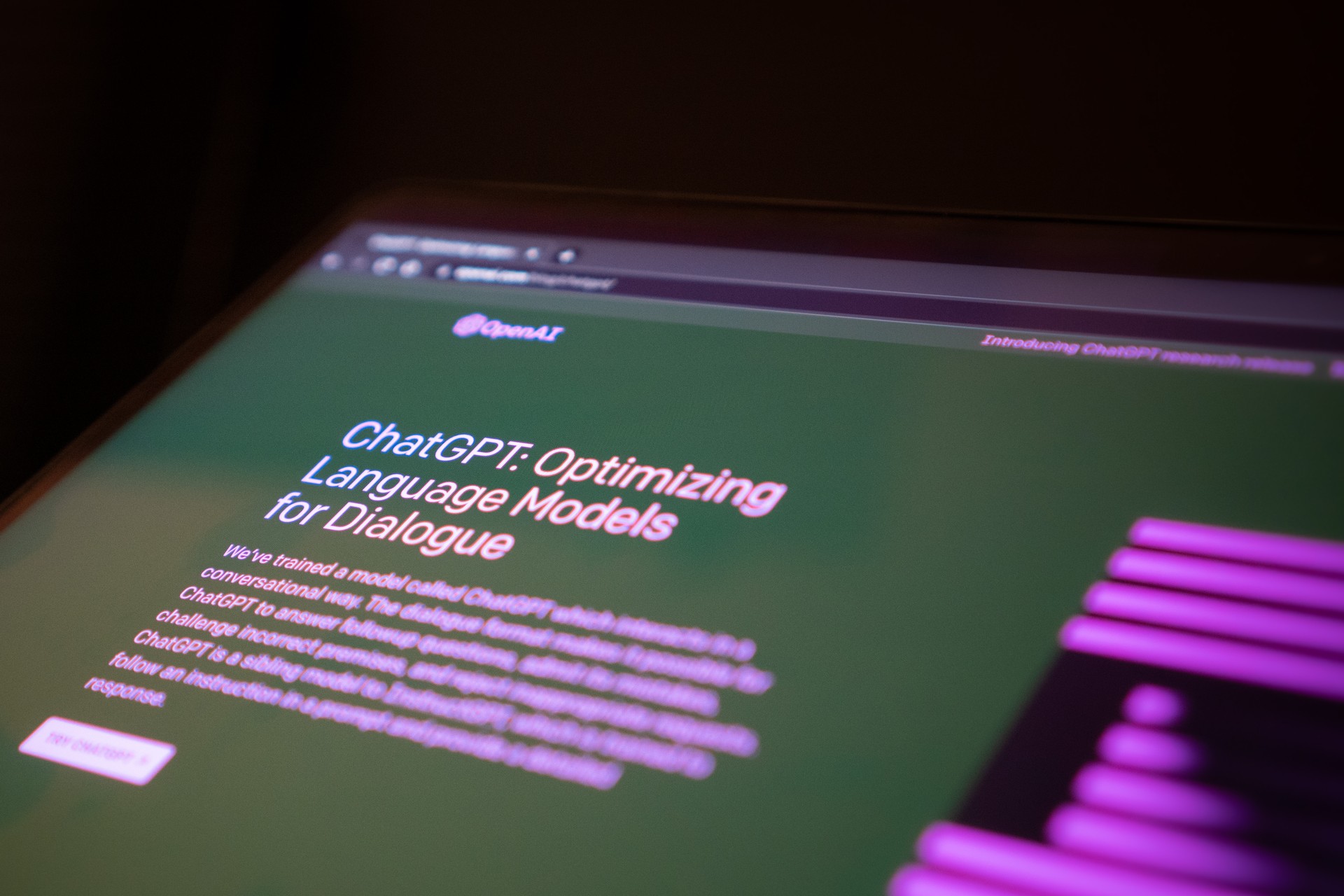

Chatbots

Perhaps the greatest gift of the timeline of artificial intelligence is chatbots. They are AI-powered computer programs designed to simulate human-like conversations and provide interactive experiences for users. They use natural language processing (NLP) techniques to understand and interpret user inputs, respond with relevant information, and carry out tasks or provide assistance.

Chatbots can be integrated into messaging platforms, websites, or applications, and they can handle a wide range of queries, provide recommendations, facilitate transactions, and offer customer support, among other functions.

Robotics

AI robotics combines artificial intelligence with robotics to create intelligent machines that can perform tasks autonomously or with minimal human intervention. AI-powered robots are equipped with sensors, perception systems, and decision-making algorithms to perceive and interact with their environment.

How is artificial intelligence in surgery and healthcare changing our lives?

They can analyze sensory data, make decisions, and execute actions accordingly. AI robotics finds applications in areas such as industrial automation, healthcare, agriculture, and exploration, enabling robots to perform complex tasks, enhance productivity, and assist humans in various domains.

Natural language processing

NLP is a rapidly advancing area of AI, focusing on enabling machines to understand, interpret, and generate human language. Language models like OpenAI’s GPT-3 have shown impressive capabilities in tasks such as text generation, translation, question-answering, and sentiment analysis.

NLP is being applied across various domains, including customer support, virtual assistants, and content generation.

As we reach the end of this captivating timeline of artificial intelligence, we are left in awe of the incredible journey it has undertaken. From its humble beginnings to the present, AI has evolved, transformed, and challenged our perceptions.

Along the timeline of artificial intelligence, we witnessed the dreams of visionaries who dared to imagine machines that could think like humans. We marveled at the pioneering work of brilliant minds who laid the foundation for this extraordinary field. We experienced the ups and downs, the setbacks and triumphs that shaped AI’s trajectory.

Today, AI stands as a testament to human ingenuity and curiosity. It has become an integral part of our lives, revolutionizing industries, powering innovations, and opening doors to endless possibilities.

But the timeline of artificial intelligence doesn’t end here. It carries a sense of anticipation as if whispering to us about the wonders that await in the future. As we step forward into uncharted territory, we are entering a voyage of discovery, where AI’s potential knows no bounds.

Featured image: Photo by noor Younis on Unsplash.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- EVM Finance. Unified Interface for Decentralized Finance. Access Here.

- Quantum Media Group. IR/PR Amplified. Access Here.

- PlatoAiStream. Web3 Data Intelligence. Knowledge Amplified. Access Here.

- Source: https://dataconomy.com/2023/06/15/timeline-of-artificial-intelligence/

- :has

- :is

- :not

- :where

- 000

- 1

- 16

- 2011

- 2012

- 2016

- 3rd

- 500

- a

- ability

- About

- abundance

- academic

- accelerating

- accordingly

- accuracy

- accurately

- achieved

- achievement

- achievements

- achieving

- acknowledge

- across

- actions

- adapt

- Adoption

- advancement

- advancements

- advancing

- advantages

- adversarial

- affordable

- against

- age

- agents

- agriculture

- AI

- ai research

- AI-powered

- aimed

- Alan

- Alan Turing

- algorithms

- All

- Allowing

- also

- alternatives

- Although

- amidst

- among

- amounts

- an

- analysis

- analytics

- analyze

- Ancient

- and

- animals

- Another

- anticipation

- applications

- applied

- approach

- architecture

- ARE

- AREA

- areas

- argue

- around

- article

- artificial

- artificial intelligence

- Artificial intelligence (AI)

- AS

- aspect

- assist

- Assistance

- assistants

- At

- attention

- audiences

- author

- automatically

- Automation

- autonomously

- availability

- await

- awareness

- AWE

- back

- based

- BE

- became

- because

- become

- been

- before

- began

- being

- below

- beneficial

- BEST

- between

- Big

- Big Data

- birth

- Blue

- boom

- both

- boundaries

- Branch

- breakthrough

- briefly

- brilliant

- Brings

- broader

- Building

- business

- by

- came

- CAN

- capabilities

- capable

- Capacity

- captivating

- card

- carry

- Cats

- central

- Century

- challenge

- challenged

- challenges

- champion

- Champions

- changing

- chatbots

- Chess

- China

- cited

- Coding

- Coin

- College

- combines

- combining

- Communication

- competitive

- complex

- complexity

- comprehensive

- computations

- computer

- computer graphics

- computers

- computing

- concept

- concepts

- Concerns

- conducted

- Consequently

- constantly

- content

- context

- contextualize

- continue

- continues

- continuous

- contributed

- contributions

- control

- Convergence

- conversational

- conversations

- correlations

- could

- create

- Creating

- creation

- credit

- critical

- crucial

- cultural

- curiosity

- Current

- Currently

- customer

- Customer Support

- DANGER

- data

- data analysis

- data processing

- data-driven

- datasets

- day

- dealing

- debates

- Decision Making

- decisions

- Decline

- deep

- deep learning

- DeepMind

- defeating

- defining

- demonstrated

- demonstrating

- designed

- Despite

- develop

- Development

- developments

- dialogue

- differentiate

- difficult

- directly

- discover

- discovery

- discussions

- distinguish

- diverse

- does

- Doesn’t

- domains

- doors

- downs

- dreams

- drive

- due

- during

- Early

- Edge

- effect

- effectively

- efficiency

- efficient

- Egypt

- Electronics

- embedded

- embracing

- emerged

- emotions

- employed

- enabled

- enabling

- encapsulated

- encompasses

- end

- Endless

- engage

- engineer

- enhance

- enormous

- entering

- enthusiasm

- Entire

- Environment

- equipped

- Era

- error

- especially

- ethical

- European

- Even

- Event

- evolution

- evolved

- evolving

- exceptional

- Excitement

- exciting

- execute

- executing

- Exhibiting

- exists

- experience

- experienced

- Experiences

- experiments

- expert

- experts

- exploration

- Explored

- Exploring

- extensive

- external

- extract

- extraordinary

- Eyes

- faced

- facilitate

- facilitated

- factors

- fan

- far

- faster

- Fiction

- field

- Figure

- Film

- Find

- finds

- First

- Focus

- focuses

- focusing

- following

- For

- forms

- Forward

- Foundation

- four

- from

- fueled

- functions

- fundamental

- funding

- further

- future

- Gain

- gained

- gaining

- game

- Games

- GANs

- generate

- generation

- generative

- generative adversarial networks

- gift

- given

- Giving

- Glimpses

- Go

- Goes

- Golden

- Google’s

- graphics

- greatest

- Greece

- groundbreaking

- groundwork

- had

- handle

- Have

- he

- head

- healthcare

- Held

- helpful

- here

- high-level

- Highlighted

- highlighting

- highly

- his

- history

- How

- However

- HTTPS

- human

- human intelligence

- Humanity

- Humans

- Hundreds

- IBM

- ideas

- identified

- identify

- if

- image

- Image Recognition

- imagine

- Impact

- implementing

- implications

- importance

- important

- impressive

- improve

- in

- Including

- increased

- increasingly

- incredible

- independently

- industrial

- industrial automation

- industries

- influence

- influenced

- information

- ingenuity

- initially

- innovations

- inputs

- insights

- instance

- instead

- institutions

- instructions

- integral

- integrated

- Integrating

- Intelligence

- Intelligent

- intentions

- interact

- interactive

- interest

- intervention

- into

- introduced

- Introduction

- involved

- IT

- ITS

- itself

- John

- journey

- jpg

- Key

- landscape

- language

- Languages

- large

- large-scale

- Late

- layers

- LEARN

- learning

- Led

- Lee

- left

- lies

- Life

- like

- Limited

- live

- Lives

- logic

- logical

- Long

- machine

- machine learning

- Machine Learning Technology

- machinery

- Machines

- maintaining

- make

- Making

- mankind

- manually

- mark

- marked

- Match

- mathematical

- max-width

- May..

- means

- mechanical

- Media

- Memory

- mental

- messaging

- methods

- Microsoft

- milestone

- minds

- minimal

- misleading

- MIT

- model

- models

- Modern

- more

- most

- movements

- movie

- much

- multiple

- Named

- Natural

- Natural Language

- Natural Language Processing

- Natural Language Understanding

- Nature

- networks

- Neural

- neural networks

- never

- New

- nlp

- no

- notable

- noting

- now

- numerous

- objective

- objects

- occasionally

- of

- off

- offer

- Offers

- Officially

- often

- on

- ONE

- ongoing

- only

- opening

- or

- organic

- organization

- organizations

- Other

- Others

- our

- out

- over

- part

- particular

- patterns

- Peak

- perception

- perform

- performance

- performing

- period

- person

- perspective

- photo

- Pioneering

- pioneers

- pivotal

- Platforms

- plato

- Plato Data Intelligence

- PlatoData

- played

- possibilities

- potential

- power

- powered

- powerful

- Powering

- precursor

- Predictions

- present

- primarily

- processed

- processes

- processing

- Processing Power

- processors

- productivity

- profound

- Program

- programmed

- Programs

- Progress

- promise

- promises

- promising

- propelling

- properly

- Prove

- proved

- provide

- provided

- public

- queries

- Questions

- radical

- raised

- raising

- range

- rapidly

- Rates

- rationality

- reach

- reached

- reaching

- realization

- recent

- recognition

- recognize

- recognized

- recommendations

- reducing

- refers

- reflected

- reinforcement learning

- relevant

- rely

- remained

- remarkable

- renewed

- Renowned

- required

- research

- researchers

- Respond

- responses

- review

- revolutionize

- Revolutionizing

- rigid

- robotics

- robots

- Role

- roles

- rules

- Run

- Said

- Science

- Science Fiction

- scientific

- scientists

- see

- seeds

- seen

- sense

- sensors

- sentiment

- set

- Setbacks

- several

- Shape

- shaped

- shaping

- shift

- shifted

- show

- shown

- significant

- significantly

- since

- small

- smartphones

- Social

- social media

- solution

- some

- sophisticated

- Sources

- Space

- span

- sparked

- speech

- Stage

- stands

- stanford

- Stanford university

- start

- Starting

- Step

- Still

- structured

- structured and unstructured data

- Struggle

- Study

- success

- Successfully

- such

- summer

- supercomputer

- support

- surge

- Surgery

- Surrounding

- Symposium

- system

- Systems

- Take

- takes

- tasks

- teams

- techniques

- technological

- Technologies

- Technology

- term

- terms

- territory

- testament

- text generation

- Text Recognition

- thanks

- that

- The

- The Future

- the information

- their

- theoretical

- theory

- There.

- These

- they

- things

- Think

- thinkers

- this

- those

- thought

- Through

- time

- timeline

- times

- to

- today

- today’s

- tomorrow

- too

- topic

- towards

- traditional

- trajectory

- Transactions

- transfer

- transformed

- transforming

- transition

- Translation

- transportation

- Trees

- Trends

- true

- turing

- two

- underlying

- understand

- understanding

- Universal

- university

- unveiled

- UPS

- us

- use

- User

- users

- using

- Valuable

- variety

- various

- Vast

- VeloCity

- via

- victory

- Video

- Videos

- View

- Virtual

- visionaries

- visionary

- volume

- von

- Voyage

- vs

- warren

- was

- Watson

- Way..

- we

- websites

- went

- were

- when

- whether

- which

- while

- WHO

- wide

- Wide range

- will

- with

- without

- witnessed

- Work

- workshop

- world

- worth

- would

- X’s

- years

- you

- Your

- youtube

- zephyrnet