Data engineers play a crucial role in managing and processing big data. They are responsible for designing, building, and maintaining the infrastructure and tools needed to manage and process large volumes of data effectively. This involves working closely with data analysts and data scientists to ensure that data is stored, processed, and analyzed efficiently to derive insights that inform decision-making.

What is data engineering?

Data engineering is a field of study that involves designing, building, and maintaining systems for the collection, storage, processing, and analysis of large volumes of data. In simpler terms, it involves the creation of data infrastructure and architecture that enable organizations to make data-driven decisions.

Data engineering has become increasingly important in recent years due to the explosion of data generated by businesses, governments, and individuals. With the rise of big data, data engineering has become critical for organizations looking to make sense of the vast amounts of information at their disposal.

In the following sections, we will delve into the importance of data engineering, define what a data engineer is, and discuss the need for data engineers in today’s data-driven world.

Job description of data engineers

Data engineers play a critical role in the creation and maintenance of data infrastructure and architecture. They are responsible for designing, developing, and maintaining data systems that enable organizations to efficiently collect, store, process, and analyze large volumes of data. Let’s take a closer look at the job description of data engineers:

Designing, developing, and maintaining data systems

Data engineers are responsible for designing and building data systems that meet the needs of their organization. This involves working closely with stakeholders to understand their requirements and developing solutions that can scale as the organization’s data needs grow.

Collecting, storing, and processing large datasets

Data engineers are also responsible for collecting, storing, and processing large volumes of data. This involves working with various data storage technologies, such as databases and data warehouses, and ensuring that the data is easily accessible and can be analyzed efficiently.

Implementing data security measures

Data security is a critical aspect of data engineering. Data engineers are responsible for implementing security measures that protect sensitive data from unauthorized access, theft, or loss. They must also ensure that data privacy regulations, such as GDPR and CCPA, are followed.

Ensuring data quality and integrity

Data quality and integrity are essential for accurate data analysis. Data engineers are responsible for ensuring that the data collected is accurate, consistent, and reliable. This involves creating data validation rules, monitoring data quality, and implementing processes to correct any errors that are identified.

Creating data pipelines and workflows

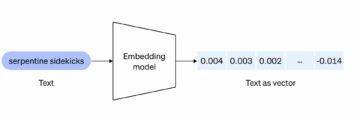

Data engineers create data pipelines and workflows that enable data to be collected, processed, and analyzed efficiently. This involves working with various tools and technologies, such as ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes, to move data from its source to its destination. By creating efficient data pipelines and workflows, data engineers enable organizations to make data-driven decisions quickly and accurately.

How does workflow automation help different departments?

Challenges faced by data engineers in managing and processing big data

As data continues to grow at an exponential rate, it has become increasingly challenging for organizations to manage and process big data. This is where data engineers come in, as they play a critical role in the development, deployment, and maintenance of data infrastructure. However, data engineering is not without its challenges. In this section, we will discuss the top challenges faced by data engineers in managing and processing big data.

Data engineers are responsible for designing and building the systems that make it possible to store, process, and analyze large amounts of data. These systems include data pipelines, data warehouses, and data lakes, among others. However, building and maintaining these systems is not an easy task. Here are some of the challenges that data engineers face in managing and processing big data:

- Data volume: With the explosion of data in recent years, data engineers are tasked with managing massive volumes of data. This requires robust systems that can scale horizontally and vertically to accommodate the growing data volume.

- Data variety: Big data is often diverse in nature and comes in various formats such as structured, semi-structured, and unstructured data. Data engineers must ensure that the systems they build can handle all types of data and make it available for analysis.

- Data velocity: The speed at which data is generated, processed, and analyzed is another challenge that data engineers face. They must ensure that their systems can ingest and process data in real-time or near-real-time to keep up with the pace of business.

- Data quality: Data quality is crucial to ensure the accuracy and reliability of insights generated from big data. Data engineers must ensure that the data they process is of high quality and conforms to the standards set by the organization.

- Data security: Data breaches and cyberattacks are a significant concern for organizations that deal with big data. Data engineers must ensure that the data they manage is secure and protected from unauthorized access.

Volume: Dealing with large amounts of data

One of the most significant challenges that data engineers face in managing and processing big data is dealing with large volumes of data. With the growing amount of data being generated, organizations are struggling to keep up with the storage and processing requirements. Here are some ways in which data engineers can tackle this challenge:

Impact on infrastructure and resources

Large volumes of data put a strain on the infrastructure and resources of an organization. Storing and processing such vast amounts of data requires significant investments in hardware, software, and other resources. It also requires a robust and scalable infrastructure that can handle the growing data volume.

Solutions for managing and processing large volumes of data

Data engineers can use various solutions to manage and process large volumes of data. Some of these solutions include:

- Distributed computing: Distributed computing systems, such as Hadoop and Spark, can help distribute the processing of data across multiple nodes in a cluster. This approach allows for faster and more efficient processing of large volumes of data.

- Cloud computing: Cloud computing provides a scalable and cost-effective solution for managing and processing large volumes of data. Cloud providers offer various services such as storage, compute, and analytics, which can be used to build and operate big data systems.

- Data compression and archiving: Data engineers can use data compression and archiving techniques to reduce the amount of storage space required for large volumes of data. This approach helps in reducing the costs associated with storage and allows for faster processing of data.

Velocity: Managing high-speed data streams

Another challenge that data engineers face in managing and processing big data is managing high-speed data streams. With the increasing amount of data being generated in real-time, organizations need to process and analyze data as soon as it is available. Here are some ways in which data engineers can manage high-speed data streams:

Impact on infrastructure and resources

High-speed data streams require a robust and scalable infrastructure that can handle the incoming data. This infrastructure must be capable of handling the processing of data in real-time or near-real-time, which can put a strain on the resources of an organization.

Solutions for managing and processing high velocity data

Data engineers can use various solutions to manage and process high-speed data streams. Some of these solutions include:

- Stream processing: Stream processing systems, such as Apache Kafka and Apache Flink, can help process high-speed data streams in real-time. These systems allow for the processing of data as soon as it is generated, enabling organizations to respond quickly to changing business requirements.

- In-memory computing: In-memory computing systems, such as Apache Ignite and SAP HANA, can help process high-speed data streams by storing data in memory instead of on disk. This approach allows for faster access to data, enabling real-time processing of high-velocity data.

- Edge computing: Edge computing allows for the processing of data at the edge of the network, closer to the source of the data. This approach reduces the latency associated with transmitting data to a central location for processing, enabling faster processing of high-speed data streams.

Variety: Processing different types of data

One of the significant challenges that data engineers face in managing and processing big data is dealing with different types of data. In today’s world, data comes in various formats and structures, such as structured, unstructured, and semi-structured. Here are some ways in which data engineers can tackle this challenge:

Impact on infrastructure and resources

Processing different types of data requires a robust infrastructure and resources capable of handling the varied data formats and structures. It also requires specialized tools and technologies for processing and analyzing the data, which can put a strain on the resources of an organization.

Solutions for managing and processing different types of data

Data engineers can use various solutions to manage and process different types of data. Some of these solutions include:

- Data integration: Data integration is the process of combining data from various sources into a single, unified view. It helps in managing and processing different types of data by providing a standardized view of the data, making it easier to analyze and process.

- Data warehousing: Data warehousing involves storing and managing data from various sources in a central repository. It provides a structured and organized view of the data, making it easier to manage and process different types of data.

- Data virtualization: Data virtualization allows for the integration of data from various sources without physically moving the data. It provides a unified view of the data, making it easier to manage and process different types of data.

Veracity: Ensuring data accuracy and consistency

Another significant challenge that data engineers face in managing and processing big data is ensuring data accuracy and consistency. With the increasing amount of data being generated, it is essential to ensure that the data is accurate and consistent to make informed decisions. Here are some ways in which data engineers can ensure data accuracy and consistency:

Impact on infrastructure and resources

Ensuring data accuracy and consistency requires a robust infrastructure and resources capable of handling the data quality checks and validations. It also requires specialized tools and technologies for detecting and correcting errors in the data, which can put a strain on the resources of an organization.

Solutions for managing and processing accurate and consistent data

Data engineers can use various solutions to manage and process accurate and consistent data. Some of these solutions include:

- Data quality management: Data quality management involves ensuring that the data is accurate, consistent, and complete. It includes various processes such as data profiling, data cleansing, and data validation.

- Master data management: Master data management involves creating a single, unified view of master data, such as customer data, product data, and supplier data. It helps in ensuring data accuracy and consistency by providing a standardized view of the data.

- Data governance: Data governance involves establishing policies, procedures, and controls for managing and processing data. It helps in ensuring data accuracy and consistency by providing a framework for managing the data lifecycle and ensuring compliance with regulations and standards.

Security: Protecting sensitive data

One of the most critical challenges faced by data engineers in managing and processing big data is ensuring the security of sensitive data. As the amount of data being generated continues to increase, it is essential to protect the data from security breaches that can compromise the data’s integrity and reputation. Here are some ways in which data engineers can tackle this challenge:

Impact of security breaches on data integrity and reputation

Security breaches can have a significant impact on an organization’s data integrity and reputation. They can lead to the loss of sensitive data, damage the organization’s reputation, and result in legal and financial consequences.

Solutions for managing and processing data securely

Data engineers can use various solutions to manage and process data securely. Some of these solutions include:

- Encryption: Encryption involves converting data into a code that is difficult to read without the proper decryption key. It helps in protecting sensitive data from unauthorized access and is an essential tool for managing and processing data securely.

- Access controls: Access controls involve restricting access to sensitive data based on user roles and permissions. It helps in ensuring that only authorized personnel have access to sensitive data.

- Auditing and monitoring: Auditing and monitoring involve tracking and recording access to sensitive data. It helps in detecting and preventing security breaches by providing a record of who accessed the data and when.

In addition to these solutions, data engineers can also follow best practices for data security, such as regular security assessments, vulnerability scanning, and threat modeling.

Cyberpsychology: The psychological underpinnings of cybersecurity risks

Best practices for overcoming challenges in big data management and processing

To effectively manage and process big data, data engineers need to adopt certain best practices. These best practices can help overcome the challenges discussed in the previous section and ensure that data processing and management are efficient and effective.

Data engineers play a critical role in managing and processing big data. They are responsible for ensuring that data is available, secure, and accessible to the right people at the right time. To perform this role successfully, data engineers need to follow best practices that enable them to manage and process data efficiently.

Adopting a data-centric approach to big data management

Adopting a data-centric approach is a best practice that data engineers should follow to manage and process big data successfully. This approach involves putting data at the center of all processes and decisions, focusing on the data’s quality, security, and accessibility. Data engineers should also ensure that data is collected, stored, and managed in a way that makes it easy to analyze and derive insights.

Investing in scalable infrastructure and cloud-based solutions

Another best practice for managing and processing big data is investing in scalable infrastructure and cloud-based solutions. Scalable infrastructure allows data engineers to handle large amounts of data without compromising performance or data integrity. Cloud-based solutions offer the added benefit of providing flexibility and scalability, allowing data engineers to scale up or down their infrastructure as needed.

In addition to these best practices, data engineers should also prioritize the following:

- Data Governance: Establishing data governance policies and procedures that ensure the data’s quality, security, and accessibility.

- Automation: Automating repetitive tasks and processes to free up time for more complex tasks.

- Collaboration: Encouraging collaboration between data engineers, data analysts, and data scientists to ensure that data is used effectively.

Leveraging automation and machine learning for data processing

Another best practice for managing and processing big data is leveraging automation and machine learning. Automation can help data engineers streamline repetitive tasks and processes, allowing them to focus on more complex tasks that require their expertise. Machine learning, on the other hand, can help data engineers analyze large volumes of data and derive insights that might not be immediately apparent through traditional analysis methods.

Implementing strong data governance and security measures

Implementing strong data governance and security measures is crucial to managing and processing big data. Data governance policies and procedures can ensure that data is accurate, consistent, and accessible to the right people at the right time. Security measures, such as encryption and access controls, can prevent unauthorized access or data breaches that could compromise data integrity or confidentiality.

Establishing a culture of continuous improvement and learning

Finally, data engineers should establish a culture of continuous improvement and learning. This involves regularly reviewing and refining data management and processing practices to ensure that they are effective and efficient. Data engineers should also stay up-to-date with the latest tools, technologies, and industry trends to ensure that they can effectively manage and process big data.

In addition to these best practices, data engineers should also prioritize the following:

- Collaboration: Encouraging collaboration between data engineers, data analysts, and data scientists to ensure that data is used effectively.

- Scalability: Investing in scalable infrastructure and cloud-based solutions to handle large volumes of data.

- Flexibility: Being adaptable and flexible to changing business needs and data requirements.

Conclusion

Managing and processing big data can be a daunting task for data engineers. The challenges of dealing with large volumes, high velocity, different types, accuracy, and security of data can make it difficult to derive insights that inform decision-making and drive business success. However, by adopting best practices, data engineers can successfully overcome these challenges and ensure that data is effectively managed and processed.

In conclusion, data engineers face several challenges when managing and processing big data. These challenges can impact data integrity, accessibility, and security, which can ultimately hinder successful data-driven decision-making. It is crucial for data engineers and organizations to prioritize best practices such as adopting a data-centric approach, investing in scalable infrastructure and cloud-based solutions, leveraging automation and machine learning, implementing strong data governance and security measures, establishing a culture of continuous improvement and learning, and prioritizing collaboration, scalability, and flexibility.

By addressing these challenges and prioritizing best practices, data engineers can effectively manage and process big data, providing organizations with the insights they need to make informed decisions and drive business success. If you want to learn more about data engineers, check out article called: “Data is the new gold and the industry demands goldsmiths.”

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://dataconomy.com/2023/02/how-data-engineers-tame-big-data/

- 1

- a

- About

- access

- Access to data

- accessed

- accessibility

- accessible

- accommodate

- accuracy

- accurate

- accurately

- across

- added

- addition

- addressing

- adopt

- Adopting

- All

- Allowing

- allows

- among

- amount

- amounts

- analysis

- Analysts

- analytics

- analyze

- analyzing

- and

- Another

- Apache

- Apache Kafka

- apparent

- approach

- architecture

- article

- aspect

- assessments

- associated

- auditing

- automating

- Automation

- available

- based

- become

- being

- benefit

- BEST

- best practices

- between

- Big

- Big Data

- breaches

- build

- Building

- business

- businesses

- CA

- called

- capable

- Center

- central

- certain

- challenge

- challenges

- challenging

- changing

- check

- Checks

- closely

- closer

- Cloud

- cloud computing

- Cluster

- code

- collaboration

- collect

- Collecting

- collection

- combining

- come

- complete

- complex

- compliance

- compromise

- compromising

- Compute

- computing

- Concern

- conclusion

- confidentiality

- Consequences

- consistent

- continues

- continuous

- controls

- cost-effective

- Costs

- could

- create

- Creating

- creation

- critical

- critical aspect

- crucial

- Culture

- customer

- customer data

- cyberattacks

- Cybersecurity

- data

- data analysis

- Data Breaches

- data engineer

- data infrastructure

- data integration

- data management

- data privacy

- data processing

- data quality

- data security

- data storage

- data warehouses

- data-driven

- databases

- deal

- dealing

- Decision Making

- decisions

- demands

- departments

- deployment

- description

- designing

- destination

- developing

- Development

- different

- difficult

- discuss

- discussed

- distribute

- distributed

- distributed computing

- diverse

- down

- drive

- easier

- easily

- Edge

- edge computing

- Effective

- effectively

- efficient

- efficiently

- enable

- enabling

- encouraging

- encryption

- engineer

- Engineering

- Engineers

- ensure

- ensuring

- Errors

- essential

- establish

- establishing

- Ether (ETH)

- expertise

- exponential

- extract

- Face

- faced

- faster

- field

- financial

- Flexibility

- flexible

- Focus

- focusing

- follow

- followed

- following

- Framework

- Free

- from

- generated

- Gold

- governance

- Governments

- Grow

- Growing

- Hadoop

- hand

- handle

- Handling

- Hardware

- help

- helps

- here

- High

- hinder

- How

- However

- HTTPS

- identified

- Ignite

- immediately

- Impact

- implementing

- importance

- important

- improvement

- in

- include

- includes

- Incoming

- Increase

- increasing

- increasingly

- individuals

- industry

- information

- informed

- Infrastructure

- insights

- instead

- integration

- integrity

- investing

- Investments

- involve

- involves

- IT

- Job

- kafka

- Keep

- Key

- large

- Latency

- latest

- lead

- LEARN

- learning

- Legal

- leveraging

- lifecycle

- load

- location

- Look

- looking

- loss

- machine

- machine learning

- maintenance

- make

- MAKES

- Making

- manage

- managed

- management

- managing

- massive

- master

- max-width

- measures

- Meet

- Memory

- methods

- might

- modeling

- monitoring

- more

- more efficient

- most

- move

- moving

- multiple

- Nature

- Need

- needed

- needs

- network

- New

- nodes

- offer

- operate

- organization

- organizations

- Organized

- Other

- Others

- Overcome

- Pace

- People

- perform

- performance

- permissions

- Personnel

- Physically

- plato

- Plato Data Intelligence

- PlatoData

- Play

- policies

- possible

- practice

- practices

- prevent

- preventing

- previous

- Prioritize

- prioritizing

- privacy

- procedures

- process

- processes

- processing

- Product

- profiling

- proper

- protect

- protected

- protecting

- providers

- provides

- providing

- put

- Putting

- quality

- quickly

- Rate

- Read

- real-time

- recent

- record

- recording

- reduce

- reduces

- reducing

- regular

- regularly

- regulations

- reliability

- reliable

- repetitive

- repository

- reputation

- require

- required

- Requirements

- requires

- Resources

- Respond

- responsible

- restricting

- result

- reviewing

- Rise

- robust

- Role

- roles

- rules

- sap

- Scalability

- scalable

- Scale

- scanning

- scientists

- Section

- sections

- secure

- securely

- security

- security breaches

- sense

- sensitive

- Services

- set

- several

- should

- significant

- single

- Software

- solution

- Solutions

- some

- Soon

- Source

- Sources

- Space

- Spark

- specialized

- speed

- stakeholders

- standards

- stay

- storage

- store

- stored

- stream

- streamline

- streams

- strong

- structured

- Struggling

- Study

- success

- successful

- Successfully

- such

- Systems

- Take

- Task

- tasks

- techniques

- Technologies

- terms

- The

- The Source

- theft

- their

- threat

- Through

- time

- to

- today’s

- tool

- tools

- top

- Tracking

- traditional

- Transform

- Trends

- types

- Ultimately

- underpinnings

- understand

- unified

- up-to-date

- use

- User

- validation

- variety

- various

- Vast

- VeloCity

- vertically

- View

- volume

- volumes

- vulnerability

- vulnerability scanning

- Warehousing

- ways

- What

- which

- WHO

- will

- without

- workflow

- workflow automation

- workflows

- working

- world

- years

- zephyrnet