Say you are a vertical manager at a logistics company. Knowing the value of proactive anomaly detection, you implement a real-time IoT system that generates streaming data, not just occasional batch reports. Now you’ll be able to get aggregated analytics data in real time.

But can you really trust the data?

If some of your data looks odd, it’s possible that something went wrong in your IoT data pipeline. Often, these errors are the result of out-of-order data, one of the most vexing IoT data issues in today’s streaming systems.

Business insight can only tell an accurate story when it relies on quality data that you can trust. The meaning depends not just on a series of events, but on the order in which they occur. Get the order wrong, and the story changes—and false reports won’t help you optimize asset utilization or discover the source of anomalies. That’s what makes out-of-order data such a problem as IoT data feeds your real-time systems.

So why does streaming IoT data tend to show up out of order? More importantly, how do you build a system that offers better IoT data quality? Keep reading to find out.

The Causes of Out-of-Order Data in IoT Platforms

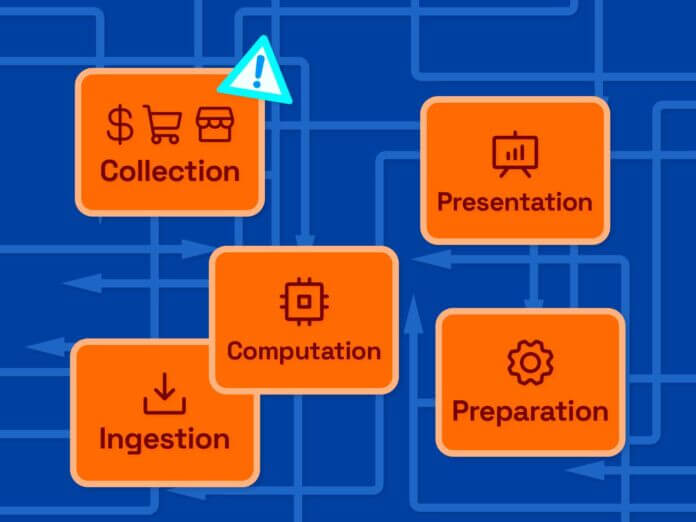

In an IoT system, data originates with devices. It travels over some form of connectivity. Finally, it arrives at a centralized destination, like a data warehouse that feeds into applications or IoT data analytics platforms.

The most common cause of out-of-order data relates to the first two links of this IoT chain. The IoT device may send data out of order because it’s operating in battery-save mode, or due to poor-quality design. The device may also lose connectivity for a period of time.

It might travel outside of a cellular network’s coverage area (think “high seas” or “military areas jamming all signals”), or it might simply crash and then reboot. Either way, it’s programmed to send data when it re-establishes a connection and gets this command. That might not be anywhere near the time that it recorded a measurement or GPS position. You end up with an event timestamped hours or more after it actually occurred.

But connectivity lapses aren’t the only cause of out-of-order (and otherwise noisy) data. Many devices are programmed to extrapolate when they fail to capture real-world readings. When you’re looking at a database, there’s no indication of which entries reflect actual measurements and which are just the device’s best guess. This is an unfortunately common problem. To comply with service level agreements, device manufacturers may program their products to send data according to a set schedule—whether there’s an accurate sensor reading or not.

The bad news is that you can’t prevent these data-flow interruptions, at least not in today’s IoT landscape. But there’s good news, too. There are methods of processing streaming data that limit the impact of out-of-order data. That brings us to the solution for this persistent data-handling challenge.

Fixing Data Errors Caused by Out-of-Order Logging

You can’t build a real-time IoT system without a real-time data processing engine—and not all of these engines offer the same suite of services. As you compare data processing frameworks for your streaming IoT pipeline, look for three features that keep out-of-order data from polluting your logs:

- Bitemporal modeling. This is a fancy term for the ability to track an IoT device’s event readings along two timelines at once. The system applies one timestamp at the moment of the measurement. It applies a second the instant the data gets recorded in your database. That gives you (or your analytics applications) the ability to spot lapses between a device recording a measurement and that data reaching your database.

- Support for data backfilling. Your data processing engine should support later corrections to data entries in a mutable database (i.e., one that allows rewriting over data fields). To support the most accurate readings, your data processing framework should also accept multiple sources, including streams and static data.

- Smart data processing logic. The most advanced data processing engine doesn’t just create a pipeline; it also layers machine learning capabilities onto streaming data. That allows the streaming system to simultaneously debug and process data as it moves from the device to your warehouse.

With these three capabilities operating in tandem, you can build an IoT system that flags—or even corrects—out-of-order data before it can cause problems. All you have to do is choose the right tool for the job.

What kind of tool, you ask? Look for a unified real-time data processing engine with a rich ML library covering the unique needs of the type of data you are processing. That may sound like a big ask, but the real-time IoT framework you’re looking for is available now, at this very moment—the one time that’s never out of order.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.iotforall.com/how-to-handle-out-of-order-data-in-your-iot-pipeline

- :is

- :not

- $UP

- 1

- a

- ability

- Able

- Accept

- According

- accurate

- actual

- actually

- advanced

- After

- agreements

- All

- allows

- along

- also

- an

- analytics

- and

- anomaly detection

- anywhere

- applications

- ARE

- AREA

- areas

- Arrives

- AS

- asset

- At

- available

- Bad

- BE

- because

- before

- BEST

- Better

- between

- Big

- Brings

- build

- but

- by

- CAN

- capabilities

- capture

- Cause

- caused

- causes

- cellular

- centralized

- chain

- challenge

- Choose

- Common

- company

- compare

- comply

- connection

- Connectivity

- Corrections

- coverage

- covering

- Crash

- create

- data

- data processing

- data warehouse

- Database

- depends

- Design

- destination

- Detection

- device

- Devices

- discover

- do

- does

- Doesn’t

- due

- e

- either

- end

- Engine

- Engines

- Errors

- Even

- Event

- events

- FAIL

- false

- Features

- Fields

- Finally

- Find

- First

- For

- form

- Framework

- frameworks

- from

- generates

- get

- gives

- good

- gps

- handle

- Have

- help

- HOURS

- How

- How To

- HTTPS

- i

- Impact

- implement

- in

- Including

- indication

- insight

- instant

- into

- iot

- IoT Device

- issues

- IT

- Job

- jpg

- just

- Keep

- Kind

- Knowing

- landscape

- later

- layers

- learning

- least

- Level

- Library

- like

- LIMIT

- links

- logic

- logistics

- Look

- looking

- LOOKS

- lose

- machine

- machine learning

- MAKES

- manager

- Manufacturers

- many

- max-width

- May..

- meaning

- measurement

- measurements

- methods

- might

- Mode

- modeling

- moment

- more

- most

- moves

- multiple

- Near

- needs

- never

- news

- no

- now

- occasional

- occur

- occurred

- of

- offer

- Offers

- often

- on

- once

- ONE

- only

- onto

- operating

- Optimize

- or

- order

- otherwise

- out

- outside

- over

- period

- pipeline

- Platforms

- plato

- Plato Data Intelligence

- PlatoData

- position

- possible

- prevent

- Proactive

- Problem

- problems

- process

- processing

- Products

- Program

- programmed

- quality

- quality data

- reaching

- Reading

- real world

- real-time

- real-time data

- really

- recorded

- recording

- reflect

- Reports

- result

- rewriting

- Rich

- right

- same

- Second

- send

- Series

- service

- Services

- set

- should

- show

- simply

- simultaneously

- solution

- some

- something

- Sound

- Source

- Sources

- Spot

- Story

- streaming

- streams

- such

- suite

- support

- system

- Systems

- Tandem

- tell

- term

- that

- The

- The Source

- their

- then

- There.

- These

- they

- Think

- this

- three

- time

- timelines

- timestamp

- to

- today’s

- too

- tool

- track

- travel

- travels

- Trust

- two

- type

- unfortunately

- unified

- unique

- us

- value

- vertical

- very

- Warehouse

- Way..

- went

- What

- when

- which

- why

- with

- without

- Wrong

- you

- Your

- zephyrnet