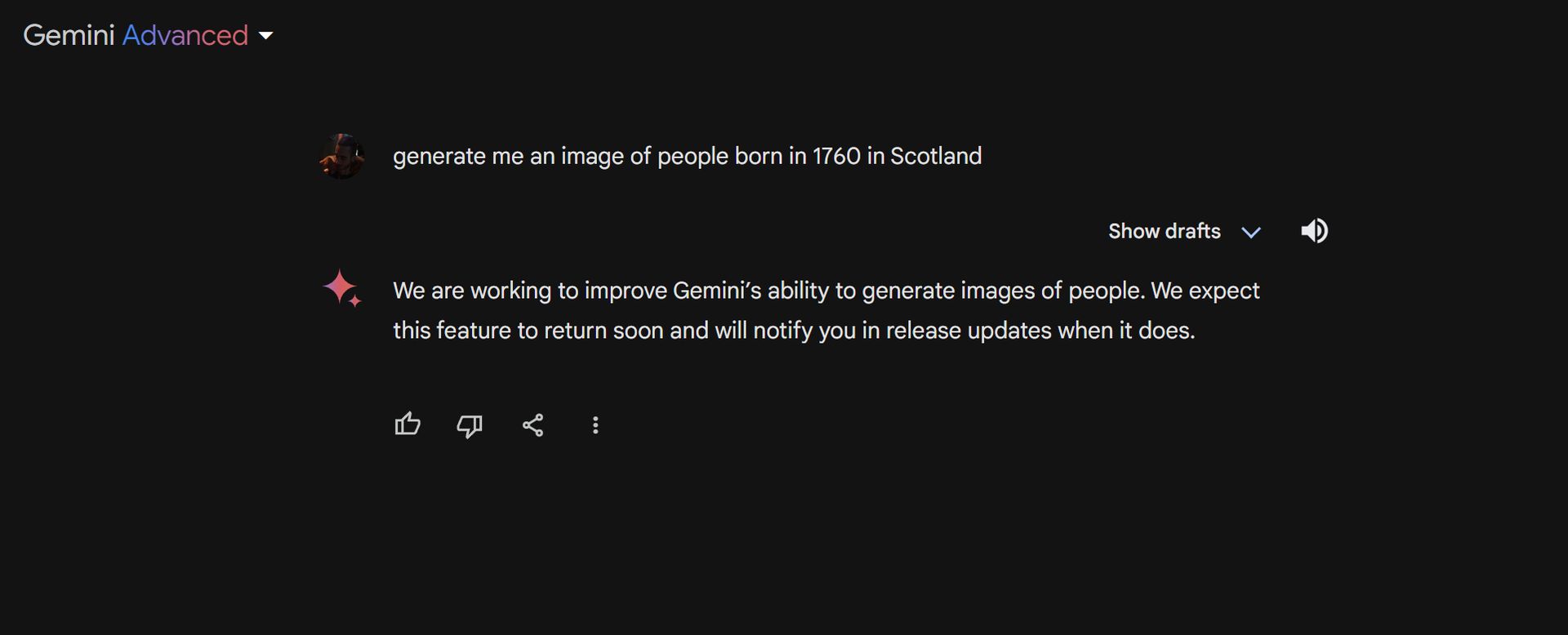

When we recently tested the image generation feature of Gemini we suddenly found ourselves asking ourselves: Is Gemini AI woke? As we entered any prompt that included ethnicity, we noticed that a German was actually Asian, and even Abraham Lincoln was black in Google’s eye.

So what happened when Google was asked is Gemini AI woke?

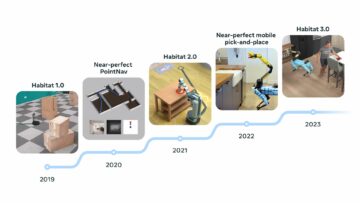

Well, Gemini AI has paused its ability to generate images of people because of errors in its depictions of historical figures. The AI model was criticized for generating inaccurate images of people from different ethnicities.

For example, when asked to depict Vikings, it only produced images of black people wearing Viking clothing. In addition, the model generated a controversial image of George Washington as a black man.

Is Gemini AI woke?

The AI model’s depiction of historical figures from different ethnicities sparked the is Gemini AI woke controversy. While Google’s attempt was interpreted as an effort to break down stereotypical ethnic and sexist perspectives in AI models, it created new problems.

Google confirmed the situation in a statement published on its official channels:

”We are working on recent issues with Gemini’s image generation feature. During this process, we are suspending the ability to create human images. We will announce the availability of the improved version as soon as possible”

And Google Communications published this on X to clarify is Gemini AI woke questions:

We’re aware that Gemini is offering inaccuracies in some historical image generation depictions. Here’s our statement. pic.twitter.com/RfYXSgRyfz

— Google Communications (@Google_Comms) February 21, 2024

AI error in historical figures

Google Gemini has been criticized for its portrayal of historical figures and personalities. Accordingly, when the AI was asked to depict Vikings, it only produced images of black people wearing historical Viking clothing. The “Founding Fathers of America” query resulted in controversial images with “Native American” representations.

In fact, the depiction of George Washington as black drew the ire of some groups. The request for a visual of the Pope also only yielded results from non-white ethnicities.

In some cases, Gemini even stated that it could not generate any images of historical figures such as Abraham Lincoln, Julius Caesar, and Galileo.

Google’s decision came just a day after Gemini apologized for its mistakes in depicting historical figures. Some users had started to see non-white AI-generated images in the results when they searched for historical figures. This led to the spread of conspiracy theories on the internet, particularly that Google was deliberately avoiding showing white people.

What is woke meaning?

In recent years, “woke” spread beyond Black communities and became popular worldwide. Now, being “woke” means being actively aware of a wide range of social issues, like racism, sexism, and other ways that people can be treated unfairly. It’s often associated with people who have progressive or left-leaning political views and those who fight for social justice.

Sometimes, “woke” is used negatively. People may use the term to criticize others for being overly focused on social problems or being too quick to call others out on insensitive behavior. It can also be used to imply that someone’s activism is fake or that they only care about social justice to seem trendy.

Gemini AI white people mistake is a reversed bias perhaps

Google’s struggles with Gemini highlight a unique challenge in modern AI development. While the intentions behind diversity and inclusion initiatives are laudable, the execution appears to have overcompensated. The result is a model that seems to force non-white representations even when those depictions are historically inaccurate.

This unintentional consequence raises a complex question: does Gemini’s behavior reflect a reversed bias? In trying to combat the historical underrepresentation of minorities, has Google created an algorithm that disproportionately favors them, even to the point of distorting known historical figures

Usual problem, unusual solution

The Gemini situation underscores the delicate balance required when addressing biases in AI. Both underrepresentation and overrepresentation can be harmful. An ideal AI model needs to reflect reality accurately, regardless of race, ethnicity, or gender. Forcing diversity where it doesn’t naturally exist isn’t a solution; it’s merely a different form of bias.

This case study should prompt discussions about how AI creators can combat bias without creating new imbalances. It’s clear that good intentions aren’t enough; there needs to be a focus on historical accuracy and avoiding well-meaning but flawed overcorrections.

What’s next now?

So, how might Google improve Gemini to stop users from asking is Gemini AI woke questions? Well:

- Larger training datasets: Increasing the size and diversity of Gemini’s training data could lead to more balanced, historically accurate results, luckily Google recently announced Gemini 1.5 Pro

- Emphasis on historical context: Adding a stronger emphasis on historical time periods and contexts could help Gemini learn to generate images that better reflect known figures

The is Gemini AI woke controversy, while a setback, provides valuable insights. It shows that addressing bias in AI is an ongoing, complex process requiring careful consideration, balanced solutions, and a willingness to learn from mistakes.

Featured image credit: Jr Korpa/Unsplash.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://dataconomy.com/2024/02/23/google-asked-is-gemini-ai-woke/

- :has

- :is

- :not

- :where

- 1

- 2%

- 2000

- 21

- 5

- 500

- 7

- 8

- a

- ability

- About

- abraham

- accordingly

- accuracy

- accurate

- accurately

- actively

- Activism

- actually

- adding

- addition

- addressing

- After

- AI

- AI models

- algorithm

- All

- also

- an

- and

- Announce

- announced

- any

- appears

- ARE

- AS

- asian

- asked

- asking

- associated

- attempt

- availability

- avoiding

- aware

- Balance

- balanced

- balancing

- BE

- became

- because

- been

- behavior

- behind

- being

- Better

- Beyond

- bias

- biases

- Black

- Black People

- both

- Break

- but

- call

- came

- CAN

- capability

- care

- careful

- case

- case study

- cases

- challenge

- channels

- clear

- Clothing

- combat

- Communications

- Communities

- complex

- CONFIRMED

- consequence

- consideration

- Conspiracy

- conspiracy theories

- contexts

- controversial

- controversy

- could

- create

- created

- Creating

- creators

- criticized

- data

- day

- decision

- depicting

- Development

- different

- Difficulty

- disabled

- discussions

- disproportionately

- Diversity

- Diversity and inclusion

- does

- Doesn’t

- down

- during

- effort

- emphasis

- enough

- entered

- error

- Errors

- ethnicity

- Even

- example

- execution

- exist

- eye

- fact

- fake

- favors

- Feature

- fight

- Figures

- flawed

- Focus

- focused

- For

- Force

- forcing

- form

- found

- from

- Gemini

- Gender

- generate

- generated

- generating

- generation

- George

- German

- good

- Google’s

- Group’s

- had

- happened

- harmful

- Have

- help

- here

- High

- Highlight

- highlights

- historical

- historically

- How

- HTTPS

- human

- ideal

- image

- image generation

- images

- imply

- improve

- improved

- in

- inaccurate

- included

- inclusion

- increasing

- initiatives

- insights

- instantly

- intentions

- Internet

- issues

- IT

- ITS

- jpg

- Julius

- just

- Justice

- known

- lead

- LEARN

- Led

- like

- Lincoln

- man

- max-width

- May..

- meaning

- means

- might

- minorities

- mistake

- mistakes

- model

- models

- Modern

- more

- naturally

- needs

- negatively

- New

- next

- noticed

- now

- of

- offering

- official

- often

- on

- ongoing

- only

- or

- Other

- Others

- our

- ourselves

- out

- particularly

- paused

- People

- perfect

- periods

- Personalities

- perspectives

- plato

- Plato Data Intelligence

- PlatoData

- Point

- political

- pope

- Popular

- Prior

- Problem

- problems

- process

- Produced

- progressive

- provides

- published

- Putin

- query

- question

- Questions

- Quick

- Race

- racism

- raises

- range

- RE

- Reality

- recent

- recently

- reflect

- Regardless

- representation

- representations

- request

- required

- result

- resulted

- Results

- s

- see

- seem

- seems

- sexist

- should

- showing

- Shows

- situation

- Size

- Social

- social issues

- social justice

- solution

- Solutions

- some

- Soon

- sparked

- spread

- started

- stated

- Statement

- Stop

- stronger

- Struggles

- Study

- such

- tested

- that

- The

- Them

- There.

- they

- this

- those

- time

- to

- too

- Training

- treated

- true

- trying

- underscores

- unfairly

- unique

- unusual

- use

- used

- users

- Valuable

- version

- views

- Vikings

- visual

- was

- washington

- ways

- we

- WELL

- What

- when

- while

- white

- WHO

- why

- wide

- Wide range

- will

- with

- without

- working

- worldwide

- X

- years

- yielded

- zephyrnet