Image by Author

LSTMs were initially introduced in the early 1990s by authors Sepp Hochreiter and Jurgen Schmidhuber. The original model was extremely compute-expensive and it was in the mid-2010s when RNNs and LSTMs gained attention. With more data and better GPUs available, LSTM networks became the standard method for language modeling and they became the backbone for the first large language model. That was the case until the release of Attention-Based Transformer Architecture in 2017. LSTMs were gradually outdone by the Transformer architecture which is now the standard for all recent Large Language Models including ChatGPT, Mistral, and Llama.

However, the recent release of the xLSTM paper by the original LSTM author Sepp Hochreiter has caused a major stir in the research community. The results show comparative pre-training results to the latest LLMs and it has raised a question if LSTMs can once again take over Natural Language Processing.

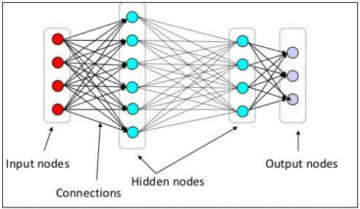

High-Level Architecture Overview

The original LSTM network had some major limitations that limited its usability for larger contexts and deeper models. Namely:

- LSTMs were sequential models that made it hard to parallelize training and inference.

- They had limited storage capabilities and all information had to be compressed into a single cell state.

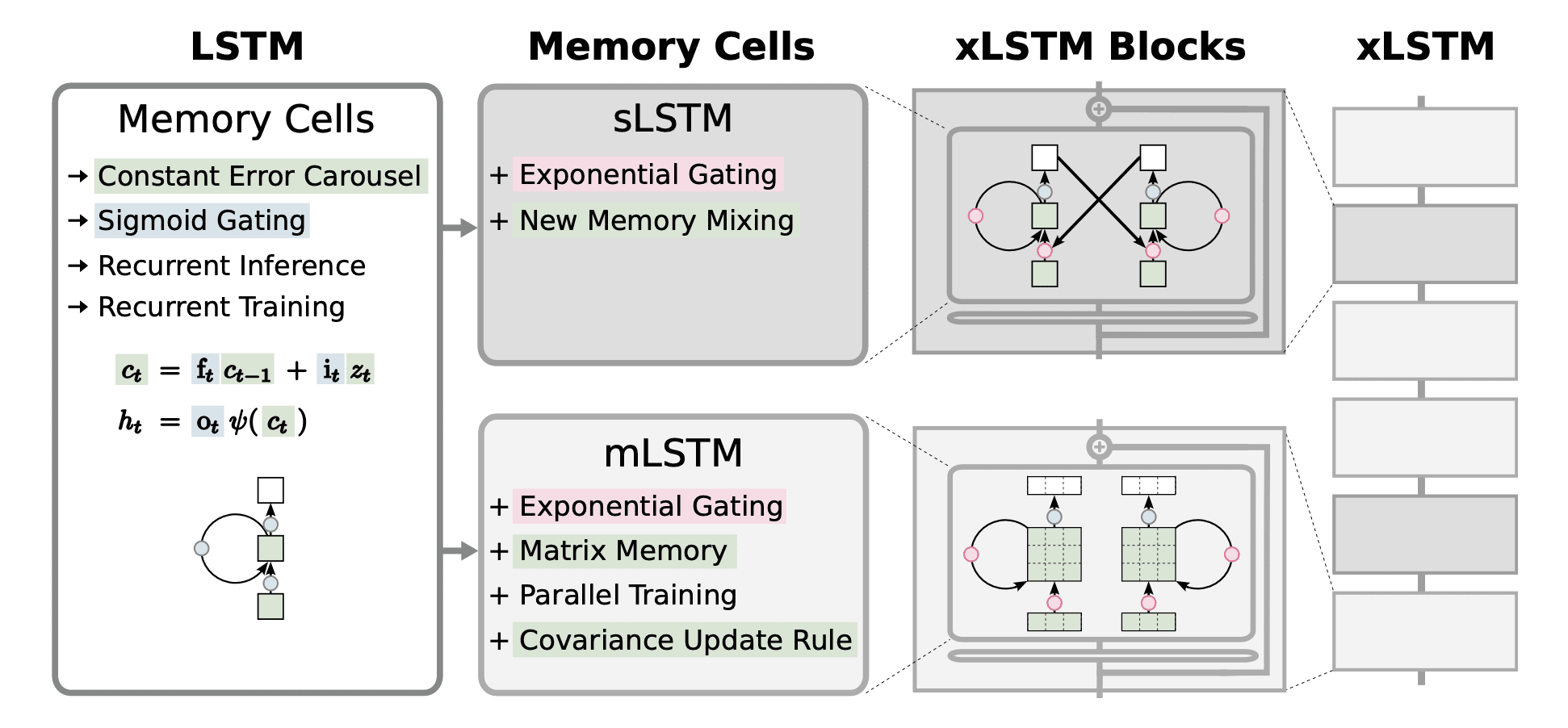

The recent xLSTM network introduces new sLSTM and mLSTM blocks to address both these shortcomings. Let us take a birds-eye view of the model architecture and see the approach used by the authors.

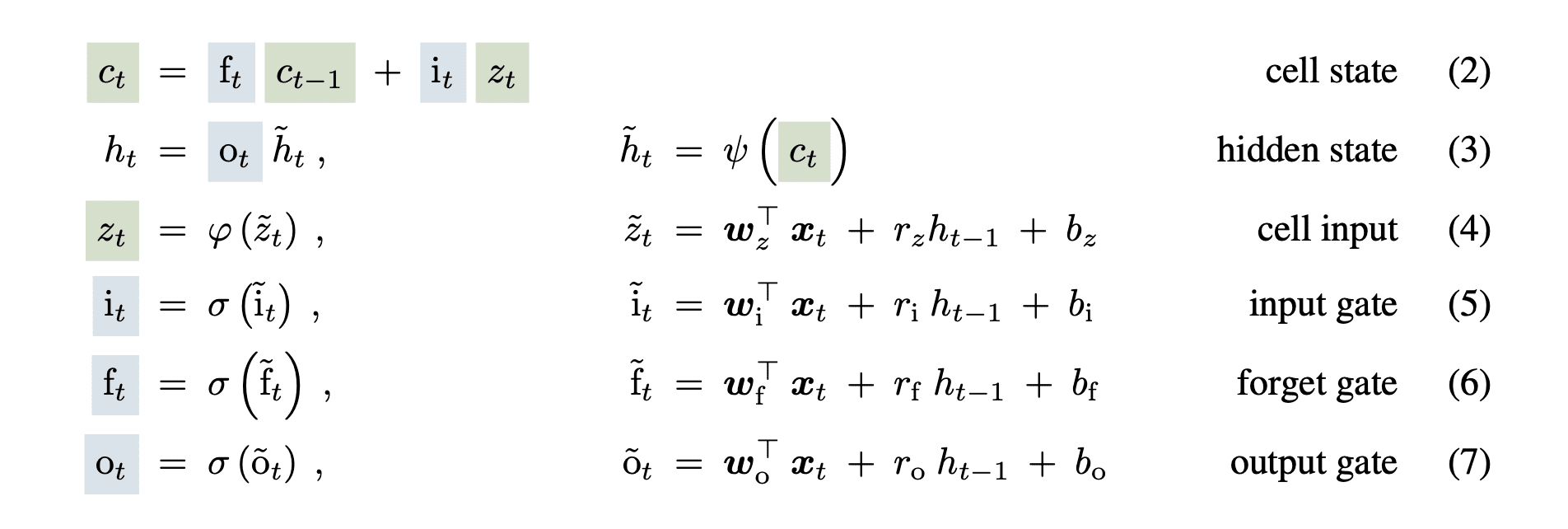

Short Review of Original LSTM

The LSTM network used a hidden state and cell state to counter the vanishing gradient problem in the vanilla RNN networks. They also added the forget, input and output sigmoid gates to control the flow of information. The equations are as follows:

Image from Paper

The cell state (ct) passed through the LSTM cell with minor linear transformations that helped preserve the gradient across large input sequences.

The xLSTM model modifies these equations in the new blocks to remedy the known limitations of the model.

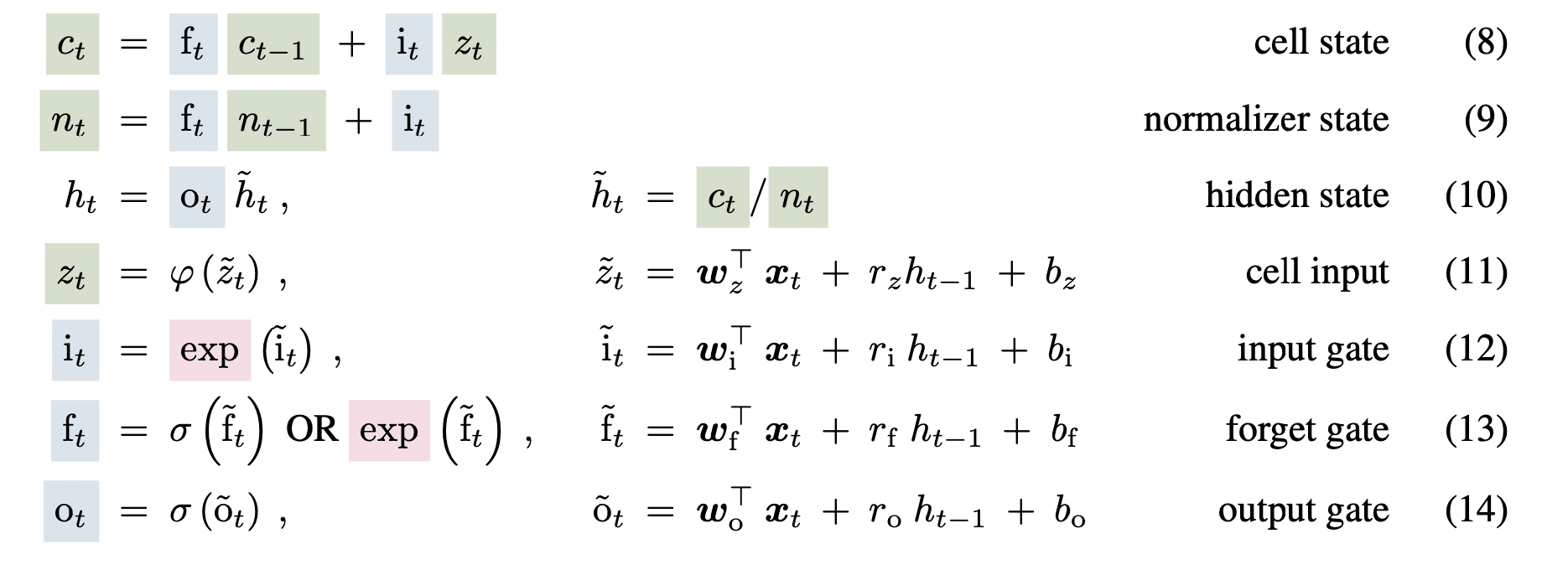

sLSTM Block

The block modifies the sigmoid gates and uses the exponential function for the input and forget gate. As quoted by the authors, this can improve the storage issues in LSTM and still allow multiple memory cells allowing memory mixing within each head but not across head. The modified sLSTM block equation is as follows:

Image from Paper

Moreover, as the exponential function can cause large values, the gate values are normalized and stabilized using log functions.

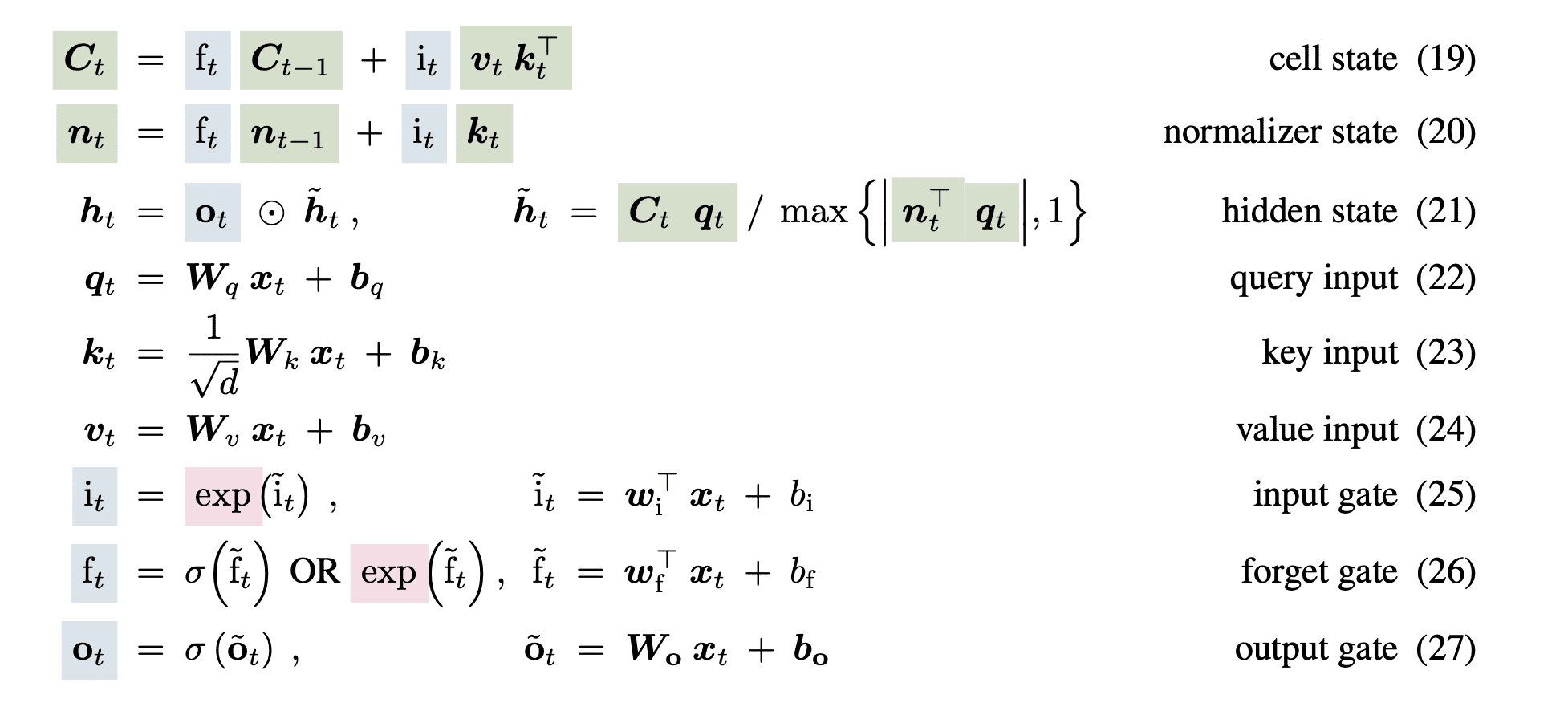

mLSTM Block

To counter the parallelizability and storage issues in the LSTM network, the xLSTM modifies the cell state from a 1-dimensional vector to a 2-dimensional square matrix. They store a decomposed version as key and value vectors and use the same exponential gating as the sLSTM block. The equations are as follows:

Image from Paper

Architecture Diagram

Image from Paper

The overall xLSTM architecture is a sequential combination of mLSTM and sLSTM blocks in different proportions. As the diagram shows, the xLSTM block can have any memory cell. The different blocks are stacked together with layer normalizations to form a deep network of residual blocks.

Evaluation Results and Comparison

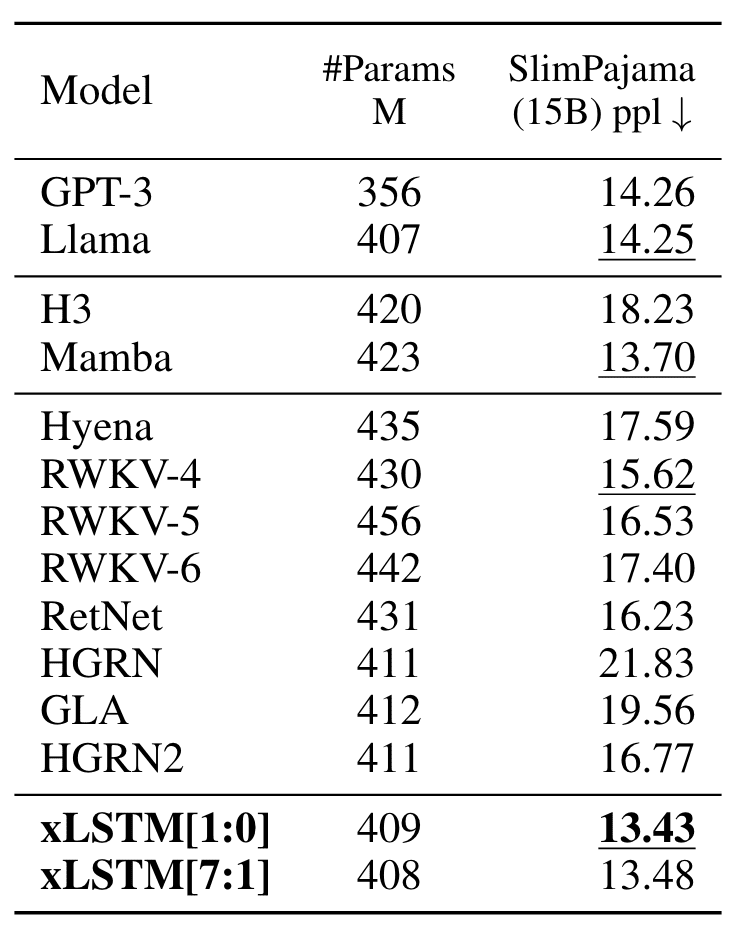

The authors train the xLSTM network on language model tasks and compare the perplexity (lower is better) of the trained model with the current Transformer-based LLMs.

The authors first train the models on 15B tokens from SlimPajama. The results showed that xLSTM outperform all other models in the validation set with the lowest perplexity score.

Image from Paper

Sequence Length Extrapolation

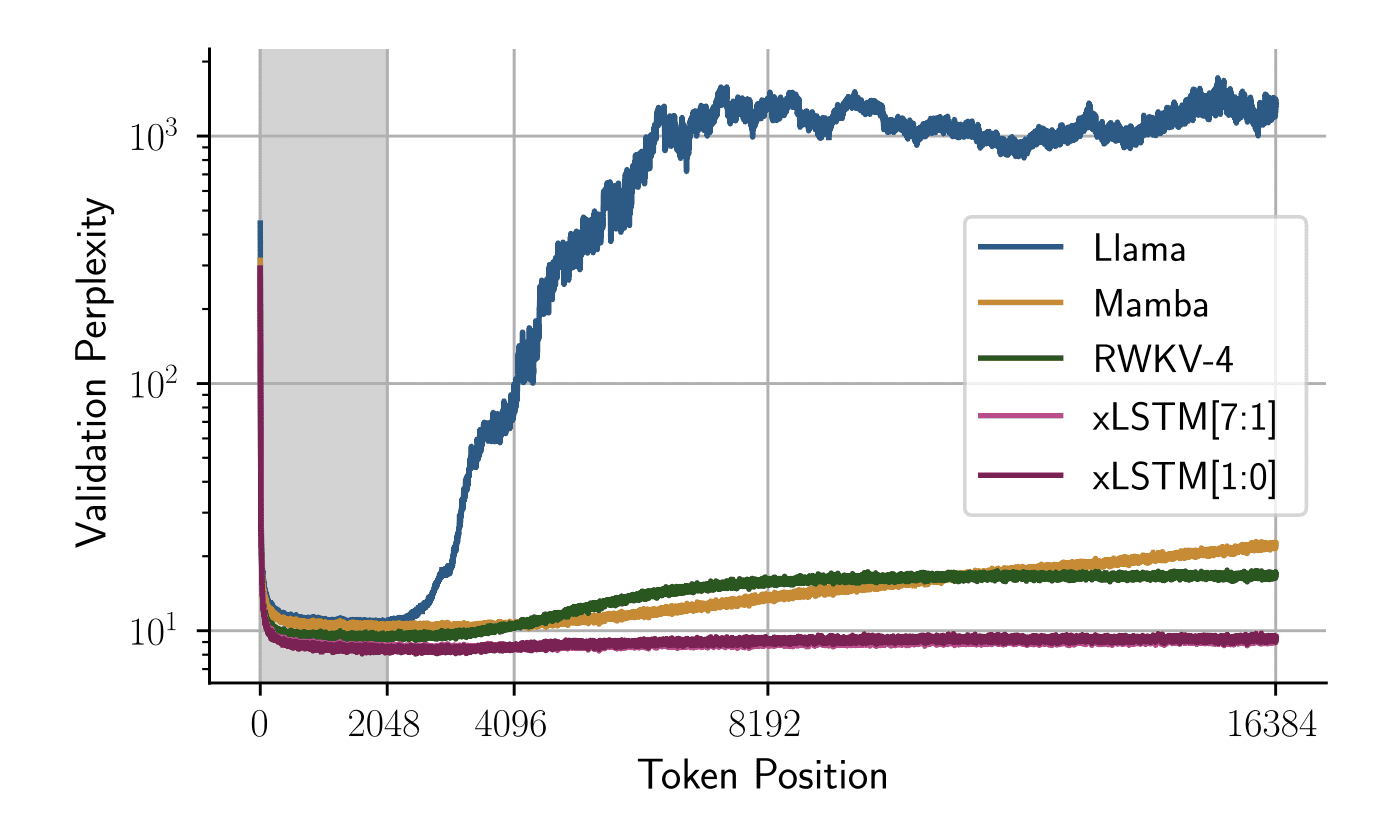

The authors also analyze performance when the test time sequence length exceeds the context length the model was trained on. They trained all models on a sequence length of 2048 and the below graph shows the validation perplexity with changes in token position:

Image from Paper

The graph shows that even for much longer sequences, xLSTM networks maintain a stable perplexity score and perform better than any other model for much longer context lengths.

Scaling xLSMT to Larger Model Sizes

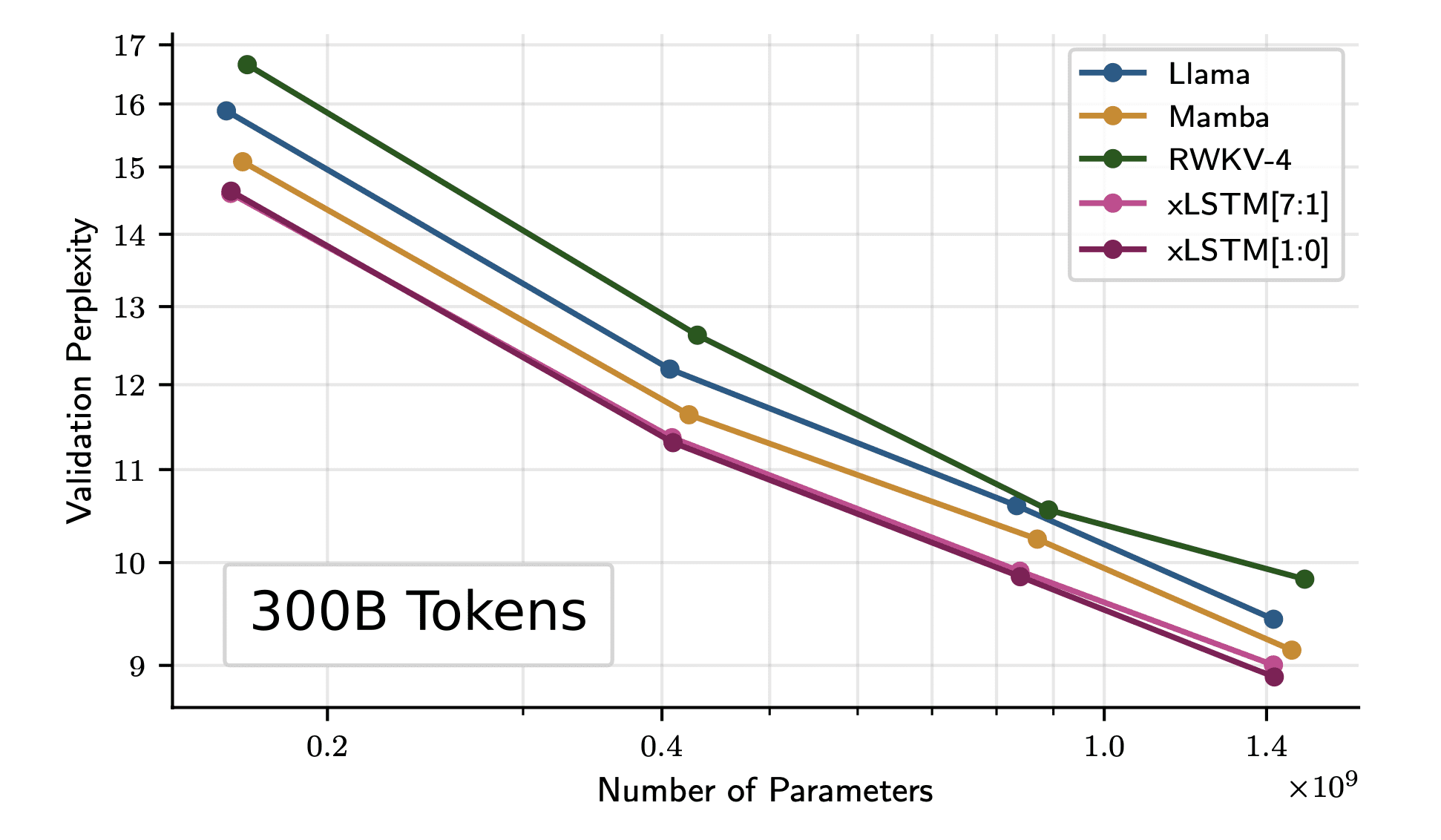

The authors further train the model on 300B tokens from the SlimPajama dataset. The results show that even for larger model sizes, xLSTM scales better than the current Transformer and Mamba architecture.

Image from Paper

Wrapping Up

That might have been difficult to understand and that is okay! Nonetheless, you should now understand why this research paper has got all the attention recently. It has been shown to perform at least as well as the recent large language models if not better. It is proven to be scalable for larger models and can be a serious competitor for all recent LLMs built on Transformers. Only time will tell if LSTMs will regain their glory once again, but for now, we know that the xLSTM architecture is here to challenge the superiority of the renowned Transformers architecture.

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/lstms-rise-again-extended-lstm-models-challenge-the-transformer-superiority?utm_source=rss&utm_medium=rss&utm_campaign=lstms-rise-again-extended-lstm-models-challenge-the-transformer-superiority

- :has

- :is

- :not

- 2017

- 2022

- a

- academic

- across

- added

- address

- advocate

- again

- AI

- All

- allow

- Allowing

- also

- an

- analyze

- and

- any

- APAC

- approach

- architecture

- ardent

- ARE

- AS

- At

- attention

- author

- authors

- available

- Backbone

- BE

- became

- been

- below

- Better

- Block

- Blocks

- both

- built

- but

- by

- CAN

- capabilities

- case

- Cause

- caused

- cell

- Cells

- challenge

- Champions

- change

- Changes

- ChatGPT

- combination

- community

- compare

- comparison

- competitor

- context

- contexts

- control

- Counter

- Current

- data

- data science

- deep

- deeper

- diagram

- different

- difficult

- Diversity

- diversity in tech

- each

- Early

- eBook

- empower

- engineer

- equation

- equations

- evaluation

- Even

- exceeds

- Excellence

- exponential

- extremely

- Fields

- First

- flow

- follows

- For

- form

- Founded

- from

- function

- functions

- further

- gained

- gate

- Gates

- gating

- generation

- glory

- got

- GPUs

- gradually

- graph

- had

- Hard

- harvard

- Have

- having

- head

- helped

- here

- Hidden

- HTTPS

- if

- improve

- in

- Including

- information

- initially

- input

- intersection

- into

- introduced

- Introduces

- issues

- IT

- ITS

- KDnuggets

- Key

- Know

- known

- language

- large

- larger

- latest

- layer

- learning

- least

- Length

- let

- limitations

- Limited

- linear

- Llama

- log

- longer

- lower

- lowest

- machine

- machine learning

- made

- maintain

- major

- Matrix

- maximizing

- medicine

- Memory

- method

- might

- minor

- Mixing

- model

- modeling

- models

- modified

- more

- much

- multiple

- namely

- Natural

- Natural Language

- Natural Language Processing

- network

- networks

- New

- nonetheless

- now

- of

- on

- once

- only

- original

- Other

- outdone

- Outperform

- output

- over

- overall

- Paper

- passed

- passion

- perform

- performance

- plato

- Plato Data Intelligence

- PlatoData

- position

- preserve

- Problem

- processing

- productivity

- profound

- proven

- question

- quoted

- raised

- recent

- recently

- recognized

- regain

- release

- Renowned

- research

- Research Community

- Results

- review

- Rise

- s

- same

- scalable

- scales

- scaling

- Scholar

- Science

- score

- see

- Sequence

- serious

- set

- she

- shortcomings

- should

- show

- showed

- shown

- Shows

- single

- sizes

- some

- square

- stable

- stacked

- standard

- State

- Stem

- Still

- Stir

- storage

- store

- superiority

- Take

- tasks

- tech

- Technical

- tell

- test

- than

- that

- The

- their

- These

- they

- this

- Through

- time

- to

- together

- token

- Tokens

- Train

- trained

- Training

- transformations

- transformer

- transformers

- understand

- until

- us

- usability

- use

- used

- uses

- using

- validation

- value

- Values

- vector

- vectors

- version

- View

- was

- we

- WELL

- were

- when

- which

- why

- will

- with

- within

- Women

- writer

- you

- zephyrnet