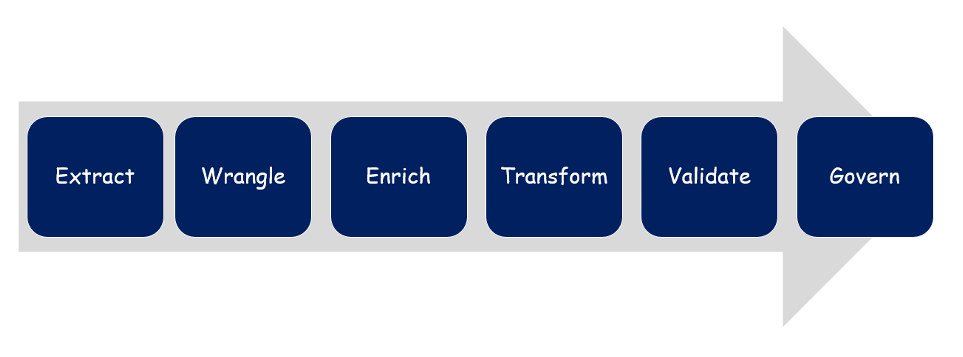

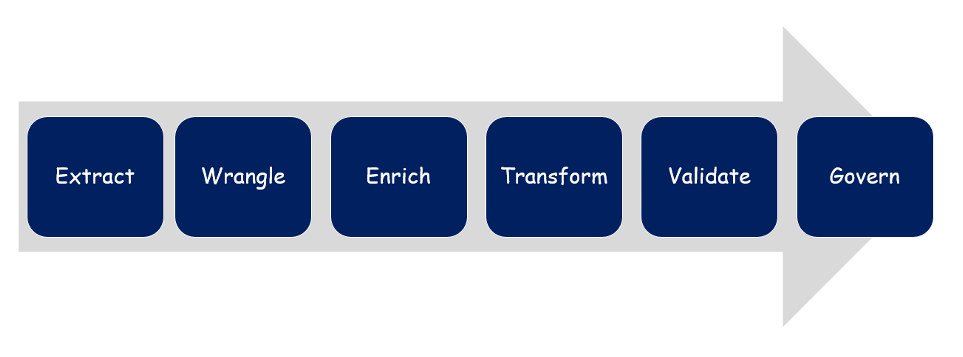

Today, corporate boards and executives understand the importance of data and analytics for improved business performance. However, most of the data in enterprises is of poor quality, hence the majority of the data and analytics fail. To improve the quality of data, more than 80% of the work in data analytics projects is on data engineering. Data engineering is the extraction, cleansing, enriching, transformation, validation, and ingestion (and governance) of quality data into the consolidated system, commonly known as the data warehouse (or data mart or data lake). The data in the data warehouse is often the system of record from which the data scientists derive insights. Typical data engineering activities include purging duplicates and unnecessary values, ingesting new records and attributes, transforming data values – including normalizing and standardizing – and finally, handling the missing data.

Data Engineering Process

Missing data is defined as the value that is not captured and stored for a specific data variable, attribute, or field. Missing, lost, or incomplete data presents various problems to the business, such as:

- Reducing the utility and relevance of data for operations, compliance, and analytics.

- Reducing the statistical power of the insights derived. Statistical power or sensitivity is the likelihood of a significance test detecting an effect when there is one.

- Causing bias in the insights derived. Data bias occurs when the data set is inaccurate and fails to represent the entire population. This, in turn, can lead to incomplete responses and skewed outcomes.

Missing Data Categories

Missing data, technically known as NULL, indicates a lack of a value. Missing data can fall into three main categories:

- Missing Completely at Random (MCAR): Here, the data is missing across all the observations. For example, the customer email address is missing in all the customer records.

- Missing Not at Random (MNAR): The missing data has a structure or defined pattern. For example, income values are missing for the student category of customer records.

- Missing at Random (MAR): Here, the data is missing relative to the observed data. The data is missing randomly and there is no pattern to the missing data. For example, the customer’s date of birth is missing in 12% of the customer records.

Solutions for Missing Data in Analytics

So, what are the solutions for addressing MCAR, MNAR, and MAR missing data categories? Basically, the solution for missing data can fall into three main categories:

- For addressing issues pertaining to MCAR, the solution is improved digitization, including deployment of data capture technologies such as optical character recognition (OCR), intelligent document processing (IDP), Bar codes, QR codes, web scraping, and more. However, all the digital solutions need to be supplemented by user training for better adoption.

- For addressing issues pertaining to MNAR, the solution is improved Data Management solutions, such as Master Data Management (MDM), data integration methods such as ETL (extract/transform/load) and EAI (enterprise application integration), Data Governance, and more. The goal of Data Management is improved reliability, accuracy, security, and compliance, and reduced cost.

- For addressing issues pertaining to MAR, the solutions can involve data imputation methods. Imputation is the process of replacing missing data with substituted values. Common data imputation methods include Langrage’s Interpolation, Gregory Newton’s forward and backward interpolation algorithms, and Regression.

MAR Data Imputation Techniques

Missing at Random (MAR) is a very common missing data situation encountered by data scientists and machine learning engineers. This is mainly because MCAR and MNAR-related problems are handled by the IT department, and data issues are addressed by the data team. MAR data imputation is a method of substituting missing data with a suitable value. Some commonly used data imputation methods for MAR are:

- In hot-deck imputation, a missing value is imputed from a randomly selected record coming from a pool of similar data records. In hot-deck imputation, the probabilities of selecting the data are assumed equal due to the random function used to impute the data.

- In cold-deck imputation, the random function is not used to impute the value. Instead, other functions, such as arithmetic mean, median, and mode, are used.

- With regression data imputation, for example, multiple linear regression (MLR), the values of the independent variables are used to predict the missing values in the dependent variable by using a regression model. Here, first the regression model is derived, then the model is validated, and finally the new values, i.e., the missing values, are predicted and imputed.

- Interpolation is a data imputation technique used to predict the value of the dependent variable for an independent variable that is in between the given data. Key data interpolation techniques include Gregory Newton’s forward interpolation technique, Gregory Newton’s backward interpolation technique, LaGrange’s interpolation technique, and more.

- Extrapolation is the imputation of an extended value from a known set of values. It is the estimation of something by way of the assumption that existing trends will continue. Popular data extrapolation techniques are trend line and Lagrange extrapolation. While interpolation techniques derive a value between two points in a data set, extrapolation techniques estimate a value that is outside the data set.

The strategy for managing MAR missing data in data analytics is illustrated in the image below.

Missing Data Categories, Solutions, and Techniques

Although data imputation can improve the quality of the data, care must be taken to choose an appropriate data imputation technique. Some data imputation techniques do not preserve the relationship between variables, some can distort the underlying data distribution, some might be dependent on a specific data type, and so on. So, instead of relying on just one data imputation technique, the strategy should be to use multiple techniques to impute the value. In this regard, ensemble techniques can be leveraged by adopting multiple data imputation algorithms to produce an optimal model for better performance.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.dataversity.net/managing-missing-data-in-analytics/

- :has

- :is

- :not

- 1

- a

- accuracy

- across

- activities

- address

- addressed

- addressing

- Adopting

- Adoption

- algorithms

- All

- an

- analytics

- and

- Application

- appropriate

- ARE

- AS

- assumed

- assumption

- At

- attributes

- bar

- Basically

- BE

- because

- below

- Better

- between

- bias

- birth

- business

- business performance

- by

- CAN

- capture

- captured

- care

- categories

- Category

- character

- character recognition

- Choose

- Codes

- coming

- Common

- commonly

- completely

- compliance

- continue

- Corporate

- Cost

- customer

- data

- Data Analytics

- data bias

- data integration

- Data Lake

- data management

- data set

- data warehouse

- DATAVERSITY

- Date

- defined

- Department

- dependent

- deployment

- derive

- Derived

- digital

- digitization

- distribution

- do

- document

- due

- duplicates

- e

- effect

- Engineering

- Engineers

- enriching

- Enterprise

- enterprises

- Entire

- equal

- estimate

- example

- executives

- existing

- extraction

- FAIL

- fails

- Fall

- field

- Finally

- First

- For

- Forward

- from

- function

- functions

- given

- goal

- governance

- handled

- Handling

- hence

- here

- However

- HTTPS

- i

- image

- importance

- improve

- improved

- in

- inaccurate

- include

- Including

- Income

- independent

- indicates

- insights

- instead

- integration

- Intelligent

- Intelligent document processing

- into

- involve

- issues

- IT

- just

- just one

- Key

- known

- Lack

- lake

- lead

- learning

- learning engineers

- leveraged

- likelihood

- Line

- lost

- machine

- machine learning

- Main

- mainly

- Majority

- management

- managing

- master

- max-width

- mdm

- mean

- method

- methods

- might

- missing

- Mode

- model

- more

- most

- multiple

- must

- Need

- New

- no

- observations

- observed

- OCR

- of

- often

- on

- ONE

- Operations

- optical

- optical character recognition

- optimal

- or

- Other

- outcomes

- outside

- Pattern

- performance

- pertaining

- plato

- Plato Data Intelligence

- PlatoData

- points

- pool

- Popular

- population

- power

- predict

- predicted

- presents

- problems

- process

- processing

- produce

- projects

- qr-codes

- quality

- quality data

- random

- recognition

- record

- records

- Reduced

- regard

- regression

- relationship

- relative

- relevance

- reliability

- relying

- represent

- responses

- scientists

- scraping

- security

- selected

- selecting

- Sensitivity

- set

- should

- significance

- similar

- situation

- So

- solution

- Solutions

- some

- something

- specific

- standardizing

- statistical

- stored

- Strategy

- structure

- Student

- such

- suitable

- system

- system of record

- taken

- team

- technically

- technique

- techniques

- Technologies

- test

- than

- that

- The

- then

- There.

- this

- three

- to

- Training

- Transformation

- transforming

- Trend

- Trends

- TURN

- two

- type

- typical

- underlying

- understand

- unnecessary

- use

- used

- User

- using

- utility

- validated

- validation

- value

- Values

- variable

- variables

- various

- very

- Warehouse

- Way..

- web

- web scraping

- What

- when

- which

- while

- will

- with

- Work

- zephyrnet