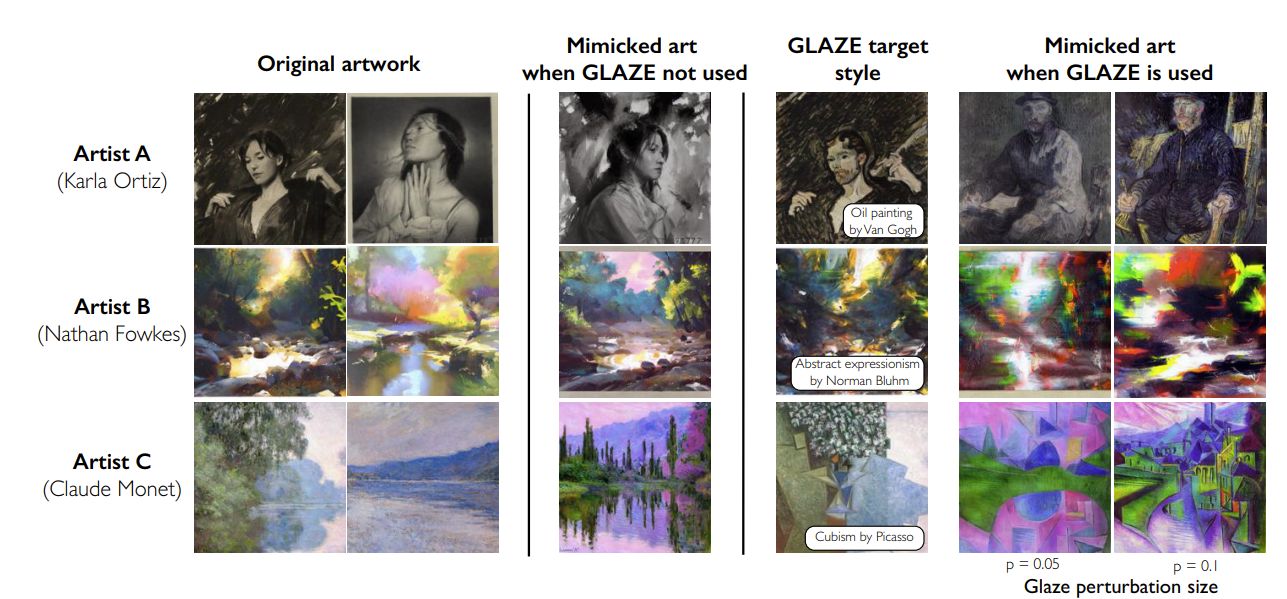

With the rise of machine-generated art we have also seen a major discussion begin about the ethics of using existing, human-made art to train these art models. Their defenders will often claim that the original art cannot be reproduced by the generator, but this is belied by the fact that one possible query to these generators is to produce art in the style of a specific artist. This is where feature extraction comes into play, and the Glaze tool as a potential obfuscation tool.

Developed by researchers at the University of Chicago, the theory behind this tool is covered in their preprint paper. The essential concept is that an artist can pick a target ‘cloak style’, which is used by Glaze to calculate specific perturbations which are added to the original image. These perturbations are not easily detected by the human eye, but will be picked up by the feature extraction algorithms of current machine-generated art models.

As a result, when this model is then asked to generate art in the style of this artist who cloaked their art, the result will be art in the style of the cloak, not that of the artist. The tool is available for download as a Beta release for MacOS and Windows (10+). The FAQ does not detail the possibility of a Linux version, but does helpfully point out the answer to questions such as whether the cloaking is easy to defeat using filters or screenshots (in short: no).

Regardless of what your views are on whether the content in training data sets require consent from the original creator, it’s hard to argue with the rights of artists to protect their style using such cloaks when posting content online.

(Thanks to [Tina Belmont] for the tip)

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://hackaday.com/2023/03/20/modifying-artwork-with-glaze-to-interfere-with-art-generating-algorithms/

- :is

- $UP

- a

- About

- added

- algorithms

- and

- answer

- ARE

- argue

- Art

- artist

- Artists

- artwork

- AS

- At

- BE

- begin

- behind

- beta

- by

- calculate

- CAN

- cannot

- chicago

- claim

- concept

- consent

- content

- covered

- creator

- cs

- Current

- data

- data sets

- Defenders

- detail

- detected

- discussion

- easily

- easy

- essential

- ethics

- existing

- extraction

- eye

- Feature

- filters

- For

- from

- generate

- generating

- generator

- generators

- Hard

- Have

- HTML

- http

- HTTPS

- human

- image

- in

- interfere

- jpg

- linux

- macos

- major

- model

- models

- of

- on

- ONE

- online

- original

- pick

- picked

- plato

- Plato Data Intelligence

- PlatoData

- Play

- Point

- possibility

- possible

- potential

- produce

- protect

- Questions

- release

- require

- researchers

- result

- rights

- Rise

- screenshots

- Sets

- Short

- specific

- style

- such

- Target

- that

- The

- their

- These

- tip

- to

- tool

- Train

- Training

- university

- University of Chicago

- version

- views

- What

- whether

- which

- WHO

- will

- windows

- with

- Your

- zephyrnet