The number and volume of the warnings about a post-quantum cryptography (PQC) world are rising, as governments, banks, and other entities prepare for a rash of compromised data and untrustworthy digital signatures.

Exactly when this will become a genuine threat is still somewhat fuzzy, because it depends on progress in developing robust qubits. A report by McKinsey & Co. estimates that by 2030, about 5,000 quantum computers will be operational, but the hardware and software needed to solve complex problems may not exist until about 2035. Others say that coherency between qubits and modular architectures could speed things up.

In the interim, facing a looming threat with an uncertain deadline, the security community is racing to build up defenses. If early cryptography was about symbols and substitutions, at least part of modern cryptography will be about using algorithms to fight algorithms.

“Cryptographers and mathematicians have been talking about this since about 2017, which is when they first sat down and said, ‘This is bad. We need to update these algorithms,’” noted Scott Best, senior director, silicon security products at Rambus. “Terrifically, they’ve made a lot of progress.”

The power of quantum computing comes from the rich physics in the quantum domain, where there is superposition, when the 0 and 1 are in the same state.

“Think about a coin flipping,” said Mohamed Hassan, quantum solutions and planning lead for Keysight, which is already working on a quantum EDA tool. “Instead of being tail or head, that coin is actually spinning on both ends at the same time. If you stop it and measure it, it will become one of the two, and it will collapse the quantum state. At the same time there is quantum entanglement, where no matter how far apart they are, if you measure one of them, you could guess the state of the other.”

RSA, ECC

When it comes to the most widely used encryption algorithms, first and foremost is RSA (Rivest–Shamir–Adleman), an asymmetric form of cryptography in which a sender encodes a message with a public key that can be widely distributed, knowing that only a recipient with the corresponding private key can decode it. This means that even if the message is hijacked, it is still safe from decryption. Its strength stems from the key length, or the number of bits in the key. The current NIST-recommended RSA key length is 2,048-bits.

RSA uses a complex formula to determine secure keys. At its heart is the deceptively simple process of multiplying two primes, the product of which becomes the basis of further refinements to create the keys. What made RSA apparently rock-solid safe was the difficulty of “defactorization,” i.e., discovering what the original prime factors were. If a product is small enough, most people could defactorize it just by knowing basic multiplication, but if the primes used are large enough, trying to discover the original factors can take thousands of years.

Or so it was thought. In 1994, MIT mathematician Peter Shor created an algorithm that solved the discrete log problem, which opened the way to breaking RSA encryption. Essentially, discrete logarithms are functions that are easy to perform, but difficult to reverse, such as defactorization. Using Shor’s algorithm on a quantum computer, finding RSA factors could go from being nearly impossible to merely a question of computational time and power.

“The sorts of things that quantum computers are good at is fairly limited,” said Michael Osborne, manager of the Security Research Group at IBM Research in Zurich, Switzerland. “They’re not very good at what we call exact problems, because they’re very probabilistic in how they perform. Unfortunately, Shor’s algorithm benefits from quantum speed-up, because essentially it fits a different way of looking at the problem.”

Another popular kind of asymmetric encryption is elliptic curve cryptography (ECC), which is based on manipulating elliptic curves. (The initialism should not be confused for error correcting codes, which are part of information theory.) ECC is both faster and more complicated than RSA, and underpins blockchain security. Nevertheless, the protective complexity of its calculations also could be undone by the speed of quantum computers, because they share mathematical roots in the Diffie–Hellman key exchange.

In addition, there is symmetric encryption, which most often is used for authentication. Symmetric encryption uses only a single key for both encryption and decryption. Its best-known example is the NSA-approved AES. Because they are based on hashes, they are less vulnerable to Shor’s algorithm, but potentially vulnerable to other approaches.

“Shor’s algorithm breaks pretty much all of the public key cryptosystems that we use today, which includes RSA and Diffie-Hellman, and the elliptic curve versions,” said Dustin Moody, a mathematician and leader of the post-quantum cryptography project at NIST. “With the symmetric algorithms like AES, and hash functions like SHA2 and SHA3, we won’t have to swap out the algorithm because they’re not broken. At worst, we just use a little bit longer key links.”

Shor’s algorithm may be performed even faster due to research by Oded Regev, a professor in the Courant Institute of Mathematical Sciences at New York University, which extended Shor’s work on period finding. (A more technical discussion may be found here.)

Harvest now, decrypt later

These vulnerabilities have led attackers to adopt what has been dubbed a “Harvest Now, Decrypt Later” (HNDL) strategy, in which hackers break in, steal as much encrypted data as they can, and wait for fault-tolerant quantum computers to come online so they can glean information worth acting on or holding for ransom.

Despite the concerns, Osborne questions whether we should worry about HNDL attacks ahead of other attacks, based on the traditional approach to security, which is very pragmatic. “You’re never going to be able to protect against all attacks, so you need to prioritize both attacker capability and attack cost to understand the relevant risk,” he said.

While HDNL may seem like a vague and distant threat, there are others. Consider Grover’s algorithm, for example, which can be used to find patterns in data. Grover’s algorithm could realize its full power with quantum computing, thus becoming a supercharged search tool that could make sense of all the decrypted harvested data. Like Shor’s, it has the potential to break cryptographic keys.

Quantum errors may buy time for security measures

In the current noisy, intermediate-scale quantum era, quantum computers have far below the number of qubits to break the RSA’s recommended 2,048 keys. In 2019, the estimate was 20 million physical qubits, a number that is often challenged. Last winter, Chinese researchers claimed to have factored integers up to 48 bits with 10 qubits. However, many researchers are skeptical of the work. Shor himself tweeted, “There are apparently possible problems with this paper.”

Still, the clock is ticking. How fast it’s ticking is unknown. Like their classical counterparts, quantum computers are vulnerable to both internal and environmental noise, which can lead to reliability problems. It’s arguably worse with quantum computers, because noise can cause qubits to decohere (lose the state of superposition), blurring the quantum states that are necessary to sustain computations, and thus not just degrading performance, as might happen in a classical computer, but destroying it entirely.

Many discussions about the number of qubits needed to break encryption are based on logical qubits, a far smaller number than the physical qubits that will be required to make the computer actually work. Any claims about the number of qubits in a device have to be balanced against the number of physical qubits that can survive decoherence. The current estimate is that 1 logical qubit will require 1,000 physical qubits, an overhead that severely cuts into projections about when quantum computers will fully come online.

To push the field forward, IBM, AWS, Google, and several start-ups, such as QuEra, Infleqtion, and Quantinuum, are striving to achieve high coherence times through improvements in error correction. Most of the refinements are based on quantum low-density parity check (qLDPC) codes, which are, in essence, quantum level-updates on classical Shannon parity checks. Last month, IBM led the pack, with a cover story in Nature that used a qLDPC variant to drop error correction overhead by 90%.

“The Nature article moves the date to the left because fewer logical qubits are required to achieve the same attack. This heightens the urgency of getting ready,” IBM’s Osborne said.

Putting even more pressure on security researchers, the date may go even farther left, due to research announced this week by Quantinuum and Microsoft. Together, they have demonstrated “the most reliable logical qubits on record with an error rate 800x better than physical qubits,” having run more than 14,000 qubits without an error. The achievement uses active syndrome extraction, which allows for error diagnosis and correction without destroying logical qubits, thus drastically reducing the 1/1000 ratio. For all the excitement, it is worth noting their paper hasn’t been peer reviewed.

The quantum menagerie

In addition to their eponymous algorithm, Rivest, Shamir, and Adleman also created the cryptography celebrity couple, “Alice and Bob,” embodiments of the “A” and “B” used in equations. It’s such an insider cryptography joke that a French quantum start-up took it as their name. The Alice and Bob approach reduces errors by creating a more robust qubit, which they named for the most famous feline in quantum physics. Combined with qLDPC error correction, “cat qubits” are resistant to bit-flipping, and the company has shown they could potentially reduce the number of qubits needed to run Shor’s algorithm by up to 200 times.

AWS also has adopted the cat qubit idea and laid out its own approach. However, like all qubits, cat qubits are still vulnerable to phase transition errors.

Cat qubits are actually part of a menagerie of qubit designs. The top five, as described in detail in a Science review paper, are superconducting circuits, of which cat qubits are a subset. In different flavors, superconducting circuits also are favored by Google, IBM, and AWS. The next four approaches are quantum dots, color centers, trapped ions (used by Quantinuum in their partnership with Microsoft), and topological qubits, also favored by Microsoft.

“There are so many hardware technologies proposed for making a quantum computer,” Keysight’s Hassan remarked. “All of them have one common feature, which is to have a quantum object as a code element to do the computation — to act as the qubit. For superconducting qubits, it’s basically a Josephson junction. What’s fascinating is that superconducting qubits under the hood are microwave circuits.”

The variety of qubit ideas and the bespoke nature of the work may also buy security researchers time. “There are many gaps between the tools,” said Hassan. “People use point tools and homegrown workflows to complete their design and that leads to long costly development cycles and non-standard engineering procedures. Things will need to move from the space of research and development to production and that’s when the EDA tools are needed to enable the systematic engineering of those systems.”

If Microsoft, IBM, Alice & Bob, or other players succeed at error correction, Schrödinger’s Cat may spring out of the box as a tiger that could rip apart asymmetric cryptography. However, attention must still be paid to progress in the quantity of physical qubits. Last December, IBM released a breakthrough 1,000 qubit chip, still far below most legitimate code-breaking estimates.

Countermeasures

Nevertheless, security professionals are not willing to risk that it’s all hype and everything will be all right. RSA has been holding a competition to find the best preventive measures, only to discover that many promising approaches are still vulnerable.

“There were 69 proposals that started the competition,” said Jayson Bethurem, vice president of marketing and business development at Flex Logix. “Five years later, there are four remaining.” The final results are expected to be released sometime this summer. There are still some hash-based algorithms moving forward. The ones that have survived the longest are the ones where they just keep increasing the key length. The data path gets wider, so of course that makes it harder because true to the power of the data path, creating all those combinations just takes longer to hack.”

The approaches that seem most robust against known attacks while offering practical usability are lattice-based algorithms.

“Quantum computers are not very good at some black-box combinatorial problems,” said IBM’s Osborne. “And lattices are a combinatorial problem. Everyone can picture a two-dimensional lattice. If it’s a regular lattice, and you pick a point somewhere in your two-dimensional lattice, which is close to one of the other points, but not on it, it’s very intuitive — you can just see that in your head. But if you have a lattice that has hundreds of dimensions, the problem is extremely difficult, because you have to try out so many combinations to figure out which of the points in this many-dimensional lattice is close to your point. It’s a combinatorial explosion of things you have to try, which is what makes this effective for cryptography. You could try a brute force approach, but it wouldn’t even be a drop in the ocean of what’s required.”

The currently favored lattice-based algorithms are standardizations of older techniques. “There’s an updated key exchange mechanism called the CRYSTALS-Kyber algorithm, and an updated digital signature algorithm called the CRYSTALS-Dilithium algorithm that have now been standardized enough that people expect they’re going to live for a long time, especially Dilithium,” according to Rambus’ Best.

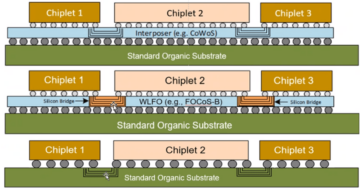

Fig.1: Rambus’s approach to post-quantum security. Source: Rambus

“Even so, we’re not putting all our eggs in the lattice basket,” NIST’s Moody said. “While lattice-based cryptography is definitely the most promising, we selected four algorithms for standardization. Three of them are based on lattices. The second most promising area is what’s called code-based cryptography, which uses error correcting codes. It’s similar in intuition to lattice-based cryptography. The codes are represented using vectors. When you add two code words, you get another code word. Part of what goes on with error correcting codes is if you get a string of bits, that’s an arbitrary string. You’re often wanting to find the code word that’s closest, because that’s the correction that you want to make. You assume that a few bits got garbled, but it’s supposed to be this nearest code word. That’s very analogous to the situation with the lattices. So an idea for a crypto system is you have a matrix, which generates your code, you scramble it to hide the structure that you designed it with, and you make that your public key, which is what attackers will have to work with. Whereas you design your error correcting code, the matrix to generate it, with some special structure that enables you to decode efficiently. This means if there’s an arbitrary string of bits in your vector space, you can decode and find the nearest codeword in an efficient fashion.”

For companies looking for in-house measures, many companies emphasize the necessity for crypto-agility. “Large ASIC manufacturers and networking manufacturers, all are starting to come around to crypto-agility,” said Flex Logix’s Bethurem. In the future, all manufacturers are expected to have a core cryptography engine that does a lot of the major functions and major processing of these complex algorithms. “They’ll probably also have to include a smaller cryptography engine that is the adaptability piece. If you don’t have some kind of flexibility, at some point, the assumptions you made to protect your product today will likely be challenged and broken in the future.”

Conclusion

While everyone’s trying to maintain their optimism about taming PQC, Dana Neustadter, director of product management for security IP solutions at Synopsys, urges designers to think realistically about what it’s going to mean to implement these solutions.

“The risk of introducing flawed implementations and solutions is very high because these are new algorithms, which are themselves in transit,” Neustadter said. “We’ve just seen with NIST that they were about to select a group of algorithms to be standardized, and at the last minute, the particular algorithm was compromised. How do you transition the whole system and devices to algorithms that are totally different than what we’re dealing with today? Even though these are alternative algorithms it doesn’t mean they might not be broken, because they weren’t studied enough.”

The other risk, Neustadter noted, is that newness and unfamiliarity alone can increase the likelihood of flawed implementations. “If you look back in time, the situation is similar to when we moved from symmetric algorithms, like DES to AES, except it will be another order of magnitude more complex and take years. We’re transitioning to totally new types of algorithms where you deal with bigger keys, which affects systems memory availability. You need to have agility because algorithms change. It’s not going to be stable overnight. Eventually, these problems can be solved in time, but it’s a serious threat — at least for a while.”

Others agree, and are sounding the warning. “It’s our professional obligation, perhaps our patriotic obligation, to push the adoption of quantum-safe encryption,” said Rambus’ Best. “This technology is coming.”

Related Stories

Quantum Plus AI Widens Cyberattack Threat Concerns

Post-quantum cryptography must be applied now to prevent hackers from decoding today’s data when quantum computers become available.

Quantum Computing: Top 5 Questions Answered

Quantum error detection, suppression, and correction strategies are critical to realizing fault-tolerant quantum computers.

References

Pfeiffer, S. Chinese researchers: RSA is breakable. Others: Do not panic! Help Net Security Accessed 28 March 2024, https://www.helpnetsecurity.com/2023/01/25/chinese-researchers-rsa-is-breakable-do-not-panic/

Bravyi, S., Cross, A.W., Gambetta, J.M. et al. High-threshold and low-overhead fault-tolerant quantum memory. Nature 627, 778–782 (2024). https://doi.org/10.1038/s41586-024-07107-7

Lescanne, R., Villiers, M., Peronnin, T. et al. Exponential suppression of bit-flips in a qubit encoded in an oscillator. Nat. Phys. 16, 509–513 (2020). https://doi.org/10.1038/s41567-020-0824-x

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://semiengineering.com/quantum-computing-challenged-by-security-error-correction/

- :has

- :is

- :not

- :where

- $UP

- 000

- 1

- 10

- 14

- 1994

- 2%

- 200

- 2017

- 2019

- 2020

- 2024

- 2030

- 2035

- 28

- 48

- 5

- 5 Questions

- a

- Able

- About

- According

- Achieve

- achievement

- Act

- acting

- actually

- adaptability

- add

- addition

- adopt

- adopted

- Adoption

- AES

- affects

- against

- agree

- ahead

- AI

- AL

- algorithm

- algorithms

- alice

- All

- allows

- alone

- already

- also

- alternative

- Amazon

- an

- and

- announced

- Another

- any

- apart

- applied

- approach

- approaches

- arbitrary

- architectures

- ARE

- AREA

- arguably

- around

- article

- AS

- asic

- assume

- assumptions

- At

- attack

- attacker

- attackers

- Attacks

- attention

- Authentication

- availability

- available

- AWS

- back

- Bad

- balanced

- Banks

- based

- basic

- Basically

- basis

- basket

- BE

- because

- become

- becomes

- becoming

- been

- being

- below

- benefits

- bespoke

- BEST

- Better

- between

- bigger

- Bit

- bits

- Black-box

- blockchain

- Blockchain security

- bob

- both

- Box

- Break

- Breaking

- breaks

- breakthrough

- Broken

- brute force

- build

- business

- business development

- but

- buy

- by

- calculations

- call

- called

- CAN

- capability

- CAT

- Cause

- Celebrity

- Centers

- challenged

- change

- check

- Checks

- chinese

- circuits

- claims

- Clock

- Close

- closest

- CO

- code

- Codes

- Coin

- Collapse

- color

- combinations

- come

- comes

- coming

- Common

- community

- Companies

- company

- competition

- complete

- complex

- complexity

- complicated

- Compromised

- computation

- computational

- computations

- computer

- computers

- computing

- Concerns

- confused

- Consider

- Core

- Corresponding

- Cost

- costly

- could

- counterparts

- Couple

- course

- cover

- Cover Story

- create

- created

- Creating

- critical

- Cross

- crypto

- cryptographic

- cryptography

- Current

- Currently

- curve

- curves

- cuts

- Cyberattack

- cycles

- Dana

- data

- Date

- deadline

- deal

- dealing

- December

- Decoding

- Decrypt

- decryption

- defenses

- definitely

- depends

- described

- Design

- designed

- designers

- designs

- detail

- Detection

- Determine

- developing

- Development

- device

- Devices

- diagnosis

- different

- difficult

- Difficulty

- digital

- dimensions

- Director

- discover

- discovering

- discrete

- discussion

- discussions

- Distant

- distributed

- do

- does

- Doesn’t

- domain

- Dont

- down

- drastically

- Drop

- dubbed

- due

- e

- E&T

- ecc

- Effective

- efficient

- efficiently

- Eggs

- element

- Elliptic

- emphasize

- enable

- enables

- encrypted

- encryption

- ends

- Engine

- Engineering

- enough

- entanglement

- entirely

- entities

- environmental

- equations

- Era

- error

- Errors

- especially

- essence

- essentially

- estimate

- estimates

- Ether (ETH)

- Even

- eventually

- everyone

- everyone’s

- everything

- exact

- example

- Except

- exchange

- Excitement

- exist

- expect

- expected

- explosion

- exponential

- extended

- extremely

- facing

- factored

- factors

- fairly

- famous

- far

- fascinating

- Fashion

- FAST

- faster

- favored

- Feature

- few

- fewer

- field

- Fig

- fight

- Figure

- final

- Find

- finding

- First

- fits

- five

- flavors

- flawed

- Flexibility

- flipping

- For

- Force

- foremost

- Forward

- found

- four

- French

- from

- full

- fully

- functions

- further

- future

- gaps

- generate

- generates

- genuine

- get

- gets

- getting

- Go

- Goes

- going

- good

- got

- Governments

- Group

- guess

- hack

- hackers

- happen

- harder

- Hardware

- hash

- Have

- having

- he

- head

- Heart

- Hide

- High

- himself

- holding

- homegrown

- hood

- How

- However

- HTTPS

- Hundreds

- Hype

- i

- IBM

- idea

- ideas

- if

- implement

- implementations

- impossible

- improvements

- in

- include

- includes

- Increase

- increasing

- information

- Insider

- Institute

- integers

- interim

- internal

- into

- introducing

- intuition

- intuitive

- IP

- IT

- ITS

- just

- Keep

- Key

- keys

- Kind

- Knowing

- known

- laid

- large

- Last

- later

- lead

- leader

- Leads

- least

- Led

- left

- legitimate

- Length

- less

- like

- likelihood

- likely

- Limited

- links

- little

- live

- log

- logarithms

- logical

- Long

- long time

- longer

- longest

- Look

- looking

- looming

- lose

- Lot

- made

- magnitude

- maintain

- major

- make

- MAKES

- Making

- management

- manager

- manipulating

- Manufacturers

- many

- March

- March 2024

- Marketing

- mathematical

- mathematicians

- Matrix

- Matter

- max-width

- May..

- McKinsey

- mean

- means

- measure

- measures

- mechanism

- Memory

- merely

- message

- Michael

- Microsoft

- might

- million

- minute

- MIT

- Modern

- modular

- Mohamed

- Month

- more

- most

- move

- moved

- moves

- moving

- much

- multiplication

- multiplying

- must

- name

- Named

- Nature

- nearly

- necessary

- necessity

- Need

- needed

- net

- networking

- never

- Nevertheless

- New

- New York

- New York University

- next

- nist

- no

- Noise

- noted

- noting

- now

- number

- object

- obligation

- ocean

- of

- offering

- often

- older

- on

- ONE

- ones

- online

- only

- opened

- operational

- Optimism

- or

- order

- original

- Other

- Others

- our

- out

- overhead

- overnight

- Pack

- paid

- Paper

- parity

- part

- particular

- Partnership

- path

- patterns

- peer

- People

- perform

- performance

- performed

- perhaps

- period

- Peter

- peter shor

- phase

- physical

- Physics

- pick

- picture

- piece

- planning

- plato

- Plato Data Intelligence

- PlatoData

- players

- plus

- Point

- points

- Popular

- possible

- potential

- potentially

- power

- PQC

- Practical

- pragmatic

- Prepare

- president

- pressure

- pretty

- prevent

- Prime

- Prioritize

- private

- Private Key

- probably

- Problem

- problems

- procedures

- process

- processing

- Product

- product management

- Production

- Products

- professional

- professionals

- Professor

- Progress

- project

- projections

- promising

- Proposals

- proposed

- protect

- Protective

- public

- Public Key

- Push

- Putting

- Quantamagazine

- Quantinuum

- quantity

- Quantum

- Quantum Computer

- quantum computers

- quantum computing

- Quantum dots

- quantum entanglement

- quantum physics

- Qubit

- qubits

- question

- Questions

- R

- racing

- Ransom

- rash

- Rate

- ratio

- ready

- realize

- realizing

- recommended

- record

- reduce

- reduces

- reducing

- regular

- released

- relevant

- reliability

- reliable

- remaining

- remarked

- represented

- require

- required

- research

- research and development

- research group

- researchers

- resistant

- Results

- review

- reviewed

- Rich

- Rid

- right

- rising

- Risk

- robust

- roots

- rsa

- Run

- s

- safe

- Said

- same

- say

- Science

- SCIENCES

- scott

- Search

- Second

- secure

- security

- security researchers

- see

- seem

- seen

- select

- selected

- sender

- senior

- sense

- serious

- several

- severely

- sha3

- shannon

- Share

- should

- signature

- Signatures

- Silicon

- similar

- Simple

- since

- single

- situation

- small

- smaller

- So

- Software

- Solutions

- SOLVE

- some

- somewhat

- somewhere

- Source

- Space

- special

- speed

- spring

- stable

- standardization

- standardized

- Start-up

- start-ups

- started

- Starting

- State

- States

- steal

- stems

- Still

- Stop

- Story

- strategies

- Strategy

- strength

- String

- striving

- structure

- studied

- succeed

- such

- summer

- supercharged

- superposition

- supposed

- suppression

- survive

- Survived

- swap

- switzerland

- symbols

- system

- Systems

- T

- Take

- takes

- talking

- Technical

- techniques

- Technologies

- Technology

- than

- that

- The

- The Future

- the security

- The State

- their

- Them

- themselves

- theory

- There.

- These

- they

- things

- Think

- this

- this week

- those

- though?

- thought

- thousands

- threat

- three

- Through

- Thus

- ticking

- Tiger

- time

- times

- to

- today

- today’s

- together

- took

- tool

- tools

- top

- top 5

- TOTALLY

- traditional

- transit

- transition

- transitioning

- trapped

- true

- try

- trying

- two

- types

- Uncertain

- under

- understand

- unfortunately

- university

- unknown

- until

- Update

- updated

- urgency

- urges

- usability

- use

- used

- uses

- using

- Variant

- variety

- vector

- vectors

- versions

- very

- vice

- Vice President

- volume

- Vulnerabilities

- Vulnerable

- W

- wait

- want

- wanting

- warning

- was

- Way..

- we

- week

- were

- What

- when

- whereas

- whether

- which

- while

- whole

- widely

- wider

- Wikipedia

- will

- willing

- Winter

- with

- without

- Word

- words

- Work

- workflows

- working

- world

- worry

- worse

- Worst

- worth

- years

- york

- you

- Your

- youtube

- zephyrnet

- Zurich