1Center for Quantum Science and Technology, IIIT Hyderabad, Telangana 500032, India

2Center for Security, Theory and Algorithmic Research, IIIT Hyderabad, Telangana 500032, India

3Center for Computational Natural Sciences and Bioinformatics, IIIT Hyderabad, Telangana 500032, India

4Faculty of Computer Science, Ruhr University Bochum, 44801 Bochum, Germany

Find this paper interesting or want to discuss? Scite or leave a comment on SciRate.

Abstract

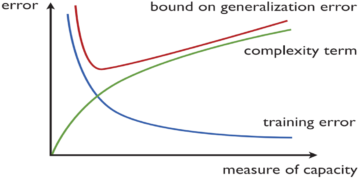

Linear regression is a widely used technique to fit linear models and finds widespread applications across different areas such as machine learning and statistics. In most real-world scenarios, however, linear regression problems are often ill-posed or the underlying model suffers from $overfitting$, leading to erroneous or trivial solutions. This is often dealt with by adding extra constraints, known as regularization. In this paper, we use the frameworks of block-encoding and quantum singular value transformation (QSVT) to design the first quantum algorithms for quantum least squares with general $ell_2$-regularization. These include regularized versions of quantum ordinary least squares, quantum weighted least squares, and quantum generalized least squares. Our quantum algorithms substantially improve upon prior results on $textit{quantum ridge regression}$ (polynomial improvement in the condition number and an exponential improvement in accuracy), which is a particular case of our result.

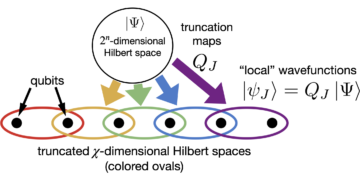

To this end, we assume approximate block-encodings of the underlying matrices as input and use robust QSVT algorithms for various linear algebra operations. In particular, we develop a variable-time quantum algorithm for matrix inversion using QSVT, where we use quantum eigenvalue discrimination as a subroutine instead of gapped phase estimation. This ensures that substantially fewer ancilla qubits are required for this procedure than prior results. Owing to the generality of the block-encoding framework, our algorithms are applicable to a variety of input models and can also be seen as improved and generalized versions of prior results on standard (non-regularized) quantum least squares algorithms.

Popular summary

In this article, we develop the first quantum algorithms for ordinary, weighted, and generalized least squares, with general $ell_2$-regularization. As with most problems in quantum machine learning, our quantum algorithms output a quantum state proportional to the corresponding classical solution of the problem. A particular case of our method is the problem of quantum ridge regression, for which our approach offers an exponential advantage in precision over prior quantum algorithms. Our results can also be seen as improved and generalized versions of prior results on quantum linear regression.

The main technical tools we make use of are robust versions of state-of-the-art techniques from quantum linear algebra, such as quantum singular value transformation and the framework of block-encoding. Additionally, we also develop quantum algorithmic primitives that are of independent interest. For instance, we develop a robust procedure for quantum singular value discrimination: this procedure determines whether the singular values of a matrix is above or below a certain threshold. We show that this subroutine drastically reduces the number of ancilla qubits required to perform variable-time amplitude amplification and, consequently, to solve quantum linear systems with optimal complexity. Our work serves as a concrete example of how techniques from quantum linear algebra can help solve useful problems in machine learning.

► BibTeX data

► References

[1] Scott Aaronson. Read the fine print. Nature Physics, 11(4):291–293, Apr 2015. doi:10.1038/nphys3272.

https://doi.org/10.1038/nphys3272

[2] Dong An and Lin Lin. Quantum linear system solver based on time-optimal adiabatic quantum computing and quantum approximate optimization algorithm. ACM Transactions on Quantum Computing, 3(2), mar 2022. doi:10.1145/3498331.

https://doi.org/10.1145/3498331

[3] Andris Ambainis. Variable time amplitude amplification and quantum algorithms for linear algebra problems. In Christoph Dürr and Thomas Wilke, editors, 29th International Symposium on Theoretical Aspects of Computer Science (STACS 2012), volume 14 of Leibniz International Proceedings in Informatics (LIPIcs), pages 636–647, Dagstuhl, Germany, 2012. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik. doi:10.4230/LIPIcs.STACS.2012.636.

https://doi.org/10.4230/LIPIcs.STACS.2012.636

[4] Chris M. Bishop. Training with noise is equivalent to tikhonov regularization. Neural Computation, 7(1):108–116, 1995. doi:10.1162/neco.1995.7.1.108.

https://doi.org/10.1162/neco.1995.7.1.108

[5] Yanlin Chen and Ronald de Wolf. Quantum algorithms and lower bounds for linear regression with norm constraints. arXiv preprint, 2021. doi:10.48550/ARXIV.2110.13086.

https://doi.org/10.48550/ARXIV.2110.13086

[6] Shantanav Chakraborty, András Gilyén, and Stacey Jeffery. The power of block-encoded matrix powers: Improved regression techniques via faster hamiltonian simulation. arXiv preprint, 2018. doi:10.48550/arXiv.1804.01973.

https://doi.org/10.48550/arXiv.1804.01973

[7] Shantanav Chakraborty, András Gilyén, and Stacey Jeffery. The Power of Block-Encoded Matrix Powers: Improved Regression Techniques via Faster Hamiltonian Simulation. In Christel Baier, Ioannis Chatzigiannakis, Paola Flocchini, and Stefano Leonardi, editors, 46th International Colloquium on Automata, Languages, and Programming (ICALP 2019), volume 132 of Leibniz International Proceedings in Informatics (LIPIcs), pages 33:1–33:14, Dagstuhl, Germany, 2019. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik. doi:10.4230/LIPIcs.ICALP.2019.33.

https://doi.org/10.4230/LIPIcs.ICALP.2019.33

[8] Nai-Hui Chia, András Gilyén, Tongyang Li, Han-Hsuan Lin, Ewin Tang, and Chunhao Wang. Sampling-Based Sublinear Low-Rank Matrix Arithmetic Framework for Dequantizing Quantum Machine Learning, page 387–400. Association for Computing Machinery, New York, NY, USA, 2020. doi:10.1145/3357713.3384314.

https://doi.org/10.1145/3357713.3384314

[9] Andrew M. Childs, Robin Kothari, and Rolando D. Somma. Quantum algorithm for systems of linear equations with exponentially improved dependence on precision. SIAM Journal on Computing, 46(6):1920–1950, Jan 2017. doi:10.1137/16M1087072.

https://doi.org/10.1137/16M1087072

[10] Menghan Chen, Chaohua Yu, Gongde Guo, and Song Lin. Faster quantum ridge regression algorithm for prediction. International Journal of Machine Learning and Cybernetics, Apr 2022. doi:10.1007/s13042-022-01526-6.

https://doi.org/10.1007/s13042-022-01526-6

[11] H.W. Engl, M. Hanke, and A. Neubauer. Regularization of Inverse Problems. Mathematics and Its Applications. Springer Netherlands, 1996. URL: https://link.springer.com/book/9780792341574.

https://link.springer.com/book/9780792341574

[12] Gene H. Golub, Per Christian Hansen, and Dianne P. O’Leary. Tikhonov regularization and total least squares. SIAM Journal on Matrix Analysis and Applications, 21(1):185–194, 1999. doi:10.1137/S0895479897326432.

https://doi.org/10.1137/S0895479897326432

[13] András Gilyén, Yuan Su, Guang Hao Low, and Nathan Wiebe. Quantum singular value transformation and beyond: exponential improvements for quantum matrix arithmetics. arXiv preprint, jun 2018. doi:10.48550/arXiv.1806.01838.

https://doi.org/10.48550/arXiv.1806.01838

[14] András Gilyén, Yuan Su, Guang Hao Low, and Nathan Wiebe. Quantum singular value transformation and beyond: Exponential improvements for quantum matrix arithmetics. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, STOC 2019, page 193–204, New York, NY, USA, 2019. Association for Computing Machinery. doi:10.1145/3313276.3316366.

https://doi.org/10.1145/3313276.3316366

[15] András Gilyén, Zhao Song, and Ewin Tang. An improved quantum-inspired algorithm for linear regression. Quantum, 6:754, June 2022. doi:10.22331/q-2022-06-30-754.

https://doi.org/10.22331/q-2022-06-30-754

[16] Yimin Ge, Jordi Tura, and J. Ignacio Cirac. Faster ground state preparation and high-precision ground energy estimation with fewer qubits. Journal of Mathematical Physics, 60(2):022202, 2019. doi:10.1063/1.5027484.

https://doi.org/10.1063/1.5027484

[17] William J. Hemmerle. An explicit solution for generalized ridge regression. Technometrics, 17(3):309–314, 1975. URL: http://www.jstor.org/stable/1268066, doi:10.2307/1268066.

https://doi.org/10.2307/1268066

http://www.jstor.org/stable/1268066

[18] Martin Hanke and Per Christian Hansen. Regularization methods for large-scale problems. Surv. Math. Ind, 3(4):253–315, 1993.

[19] Aram W. Harrow, Avinatan Hassidim, and Seth Lloyd. Quantum algorithm for linear systems of equations. Physical Review Letters, 103(15), Oct 2009. doi:10.1103/physrevlett.103.150502.

https://doi.org/10.1103/physrevlett.103.150502

[20] Arthur E. Hoerl and Robert W. Kennard. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics, 42(1):80–86, 2000. URL: http://www.jstor.org/stable/1271436, doi:10.2307/1271436.

https://doi.org/10.2307/1271436

http://www.jstor.org/stable/1271436

[21] Julia Kempe, Alexei Kitaev, and Oded Regev. The complexity of the local hamiltonian problem. SIAM Journal on Computing, 35(5):1070–1097, 2006. doi:10.1137/S0097539704445226.

https://doi.org/10.1137/S0097539704445226

[22] Iordanis Kerenidis and Anupam Prakash. Quantum Recommendation Systems. In 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), volume 67 of Leibniz International Proceedings in Informatics (LIPIcs), pages 49:1–49:21, Dagstuhl, Germany, 2017. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik. doi:10.4230/LIPIcs.ITCS.2017.49.

https://doi.org/10.4230/LIPIcs.ITCS.2017.49

[23] Iordanis Kerenidis and Anupam Prakash. Quantum gradient descent for linear systems and least squares. Phys. Rev. A, 101:022316, Feb 2020. doi:10.1103/PhysRevA.101.022316.

https://doi.org/10.1103/PhysRevA.101.022316

[24] Guang Hao Low and Isaac L Chuang. Hamiltonian simulation by uniform spectral amplification. arXiv:1707.05391, 2017. doi:10.48550/ARXIV.1707.05391.

https://doi.org/10.48550/ARXIV.1707.05391

arXiv:1707.05391

[25] Guang Hao Low and Isaac L. Chuang. Optimal hamiltonian simulation by quantum signal processing. Phys. Rev. Lett., 118:010501, Jan 2017. doi:10.1103/PhysRevLett.118.010501.

https://doi.org/10.1103/PhysRevLett.118.010501

[26] Guang Hao Low and Isaac L. Chuang. Hamiltonian simulation by qubitization. Quantum, 3:163, Jul 2019. doi:10.22331/q-2019-07-12-163.

https://doi.org/10.22331/q-2019-07-12-163

[27] Seth Lloyd, Masoud Mohseni, and Patrick Rebentrost. Quantum principal component analysis. Nature Physics, 10(9):631–633, Sep 2014. doi:10.1038/nphys3029.

https://doi.org/10.1038/nphys3029

[28] Guang Hao Low. Quantum signal processing by single-qubit dynamics. PhD thesis, Massachusetts Institute of Technology, 2017. URL: http://hdl.handle.net/1721.1/115025.

http://hdl.handle.net/1721.1/115025

[29] Lin Lin and Yu Tong. Near-optimal ground state preparation. Quantum, 4:372, December 2020. doi:10.22331/q-2020-12-14-372.

https://doi.org/10.22331/q-2020-12-14-372

[30] Lin Lin and Yu Tong. Optimal polynomial based quantum eigenstate filtering with application to solving quantum linear systems. Quantum, 4:361, November 2020. doi:10.22331/q-2020-11-11-361.

https://doi.org/10.22331/q-2020-11-11-361

[31] Guang Hao Low and Nathan Wiebe. Hamiltonian simulation in the interaction picture. arXiv preprint, 2018. doi:10.48550/arXiv.1805.00675.

https://doi.org/10.48550/arXiv.1805.00675

[32] Guang Hao Low, Theodore J. Yoder, and Isaac L. Chuang. Methodology of resonant equiangular composite quantum gates. Phys. Rev. X, 6:041067, Dec 2016. doi:10.1103/PhysRevX.6.041067.

https://doi.org/10.1103/PhysRevX.6.041067

[33] Donald W. Marquardt. Generalized inverses, ridge regression, biased linear estimation, and nonlinear estimation. Technometrics, 12(3):591–612, 1970. URL: http://www.jstor.org/stable/1267205, doi:10.2307/1267205.

https://doi.org/10.2307/1267205

http://www.jstor.org/stable/1267205

[34] John M. Martyn, Zane M. Rossi, Andrew K. Tan, and Isaac L. Chuang. Grand unification of quantum algorithms. PRX Quantum, 2:040203, Dec 2021. doi:10.1103/PRXQuantum.2.040203.

https://doi.org/10.1103/PRXQuantum.2.040203

[35] Kevin P Murphy. Machine learning: a probabilistic perspective. MIT press, 2012. URL: https://mitpress.mit.edu/books/machine-learning-1.

https://mitpress.mit.edu/books/machine-learning-1

[36] Anupam Prakash. Quantum Algorithms for Linear Algebra and Machine Learning. PhD thesis, EECS Department, University of California, Berkeley, Dec 2014. URL: http://www2.eecs.berkeley.edu/Pubs/TechRpts/2014/EECS-2014-211.html.

http://www2.eecs.berkeley.edu/Pubs/TechRpts/2014/EECS-2014-211.html

[37] Zane M. Rossi and Isaac L. Chuang. Multivariable quantum signal processing (M-QSP): prophecies of the two-headed oracle. Quantum, 6:811, September 2022. doi:10.22331/q-2022-09-20-811.

https://doi.org/10.22331/q-2022-09-20-811

[38] Patrick Rebentrost, Masoud Mohseni, and Seth Lloyd. Quantum support vector machine for big data classification. Phys. Rev. Lett., 113:130503, Sep 2014. doi:10.1103/PhysRevLett.113.130503.

https://doi.org/10.1103/PhysRevLett.113.130503

[39] Maria Schuld, Ilya Sinayskiy, and Francesco Petruccione. Prediction by linear regression on a quantum computer. Phys. Rev. A, 94:022342, Aug 2016. doi:10.1103/PhysRevA.94.022342.

https://doi.org/10.1103/PhysRevA.94.022342

[40] Changpeng Shao and Hua Xiang. Quantum regularized least squares solver with parameter estimate. Quantum Information Processing, 19(4):113, Feb 2020. doi:10.1007/s11128-020-2615-9.

https://doi.org/10.1007/s11128-020-2615-9

[41] Ewin Tang. A quantum-inspired classical algorithm for recommendation systems. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, STOC 2019, page 217–228, New York, NY, USA, 2019. Association for Computing Machinery. doi:10.1145/3313276.3316310.

https://doi.org/10.1145/3313276.3316310

[42] Hrishikesh D. Vinod. A survey of ridge regression and related techniques for improvements over ordinary least squares. The Review of Economics and Statistics, 60(1):121–131, 1978. URL: http://www.jstor.org/stable/1924340, doi:10.2307/1924340.

https://doi.org/10.2307/1924340

http://www.jstor.org/stable/1924340

[43] Wessel N. van Wieringen. Lecture notes on ridge regression, 2015. doi:10.48550/ARXIV.1509.09169.

https://doi.org/10.48550/ARXIV.1509.09169

[44] Guoming Wang. Quantum algorithm for linear regression. Phys. Rev. A, 96:012335, Jul 2017. doi:10.1103/PhysRevA.96.012335.

https://doi.org/10.1103/PhysRevA.96.012335

[45] Nathan Wiebe, Daniel Braun, and Seth Lloyd. Quantum algorithm for data fitting. Phys. Rev. Lett., 109:050505, Aug 2012. doi:10.1103/PhysRevLett.109.050505.

https://doi.org/10.1103/PhysRevLett.109.050505

[46] Chao-Hua Yu, Fei Gao, and Qiao-Yan Wen. An improved quantum algorithm for ridge regression. IEEE Transactions on Knowledge and Data Engineering, 33(3):858–866, 2021. doi:10.1109/TKDE.2019.2937491.

https://doi.org/10.1109/TKDE.2019.2937491

Cited by

[1] Yanlin Chen and Ronald de Wolf, “Quantum Algorithms and Lower Bounds for Linear Regression with Norm Constraints”, arXiv:2110.13086, (2021).

[2] Tong Ning, Youlong Yang, and Zhenye Du, “Quantum kernel logistic regression based Newton method”, Physica A Statistical Mechanics and its Applications 611, 128454 (2023).

[3] Changpeng Shao, “An improved quantum algorithm for low-rank rigid linear regressions with vector solution outputs”, arXiv:2301.06107, (2023).

[4] Shantanav Chakraborty, “Implementing Linear Combination of Unitaries on Intermediate-term Quantum Computers”, arXiv:2302.13555, (2023).

The above citations are from SAO/NASA ADS (last updated successfully 2023-04-30 00:25:12). The list may be incomplete as not all publishers provide suitable and complete citation data.

On Crossref’s cited-by service no data on citing works was found (last attempt 2023-04-30 00:25:10).

This Paper is published in Quantum under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. Copyright remains with the original copyright holders such as the authors or their institutions.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoAiStream. Web3 Data Intelligence. Knowledge Amplified. Access Here.

- Minting the Future w Adryenn Ashley. Access Here.

- Source: https://quantum-journal.org/papers/q-2023-04-27-988/

- :is

- :not

- :where

- ][p

- 1

- 10

- 11

- 12

- 13

- 14

- 1996

- 1999

- 20

- 2012

- 2014

- 2015

- 2016

- 2017

- 2018

- 2019

- 2020

- 2021

- 2022

- 2023

- 22

- 23

- 24

- 26

- 27

- 28

- 30

- 39

- 49

- 67

- 7

- 8

- 9

- a

- above

- ABSTRACT

- access

- accuracy

- ACM

- across

- adding

- Additionally

- ADvantage

- affiliations

- algorithm

- algorithmic

- algorithms

- All

- also

- Amplification

- an

- analysis

- and

- Andrew

- annual

- applicable

- Application

- applications

- approach

- apr

- ARE

- areas

- Arthur

- article

- AS

- aspects

- Association

- Aug

- author

- authors

- based

- BE

- below

- Berkeley

- BEST

- Beyond

- Big

- Big Data

- Break

- by

- california

- CAN

- case

- certain

- Changpeng

- chen

- Chris

- classification

- combination

- comment

- Commons

- complete

- complexity

- component

- computation

- computer

- computer science

- computers

- computing

- condition

- Conference

- Consequently

- constraints

- copyright

- Corresponding

- Daniel

- data

- data points

- December

- Den

- Department

- dependence

- Design

- determines

- develop

- different

- Discrimination

- discuss

- donald

- drastically

- dynamics

- e

- Economics

- end

- energy

- Engineering

- ensures

- equations

- Equivalent

- Errors

- estimate

- Ether (ETH)

- example

- exponential

- exponentially

- extra

- faster

- Feb

- fei

- fewer

- filtering

- finds

- fine

- First

- fit

- fitting

- For

- found

- Framework

- frameworks

- frequent

- from

- GAO

- Gates

- ge

- General

- Germany

- Ground

- handle

- harvard

- Have

- help

- holders

- How

- However

- HTML

- http

- HTTPS

- IEEE

- implementing

- improve

- improved

- improvement

- improvements

- in

- include

- independent

- information

- innovations

- input

- instance

- instead

- Institute

- institutions

- interaction

- interest

- interesting

- International

- into

- inversion

- ITS

- Jan

- JavaScript

- John

- journal

- june

- knowledge

- known

- Languages

- large

- large-scale

- Last

- leading

- learning

- Leave

- Lecture

- License

- List

- literature

- local

- Low

- machine

- machine learning

- machinery

- Main

- make

- Martin

- massachusetts

- Massachusetts Institute of technology

- math

- mathematical

- mathematics

- Matrix

- May..

- mechanics

- method

- Methodology

- methods

- minimizing

- MIT

- model

- models

- Month

- most

- murphy

- Natural

- Nature

- Netherlands

- Neural

- New

- New York

- Newton

- no

- Noise

- Notes

- November

- number

- NY

- Oct

- of

- Offers

- often

- on

- ONE

- open

- Operations

- optimal

- optimization

- or

- oracle

- ordinary

- original

- our

- output

- over

- page

- Paper

- parameter

- particular

- perform

- perspective

- phase

- physical

- Physics

- picture

- plato

- Plato Data Intelligence

- PlatoData

- points

- Popular

- power

- powers

- Practical

- Prakash

- Precision

- prediction

- press

- Principal

- Prior

- Problem

- problems

- Proceedings

- processing

- Programming

- provide

- published

- publisher

- publishers

- Quantum

- quantum algorithms

- Quantum Computer

- quantum computers

- quantum computing

- quantum information

- quantum machine learning

- qubits

- ranging

- Read

- real world

- Recommendation

- reduces

- references

- regression

- related

- remains

- required

- research

- result

- Results

- review

- rigid

- ROBERT

- Robin

- robust

- runs

- s

- scenarios

- Science

- Science and Technology

- SCIENCES

- scott aaronson

- security

- seen

- September

- Series

- serves

- set

- show

- siam

- Signal

- simulation

- singular

- solution

- Solutions

- SOLVE

- Solving

- Spectral

- Squared

- squares

- STACS

- standard

- State

- state-of-the-art

- statistical

- statistics

- Successfully

- such

- Suffers

- suitable

- support

- Survey

- Symposium

- system

- Systems

- Technical

- techniques

- Technology

- than

- that

- The

- their

- theoretical

- These

- this

- threshold

- time

- Title

- to

- tools

- Total

- Training

- Transactions

- Transformation

- under

- underlying

- university

- University of California

- updated

- upon

- URL

- USA

- use

- used

- using

- value

- Values

- variety

- various

- via

- volume

- W

- want

- was

- we

- whether

- which

- while

- wide

- widely

- widespread

- with

- Wolf

- Work

- works

- X

- year

- york

- Yuan

- zephyrnet

- Zhao