Introduction

As Artificial Intelligence (AI) grows continuously, the demand for faster and more efficient computing power is increasing. Machine learning (ML) models can be computationally intensive, and training the models can take longer. However, by using GPU parallel processing capabilities, it is possible to accelerate the training process significantly. Data scientists can iterate faster, experiment with more models, and build better-performing models in less time.

There are several libraries available to use. Today we will learn about RAPIDS, an easy solution to use our GPU to accelerate ML models without any knowledge of GPU programming.

Learning Objectives

In this article, we will learn about:

- A high-level overview of how RAPIDS.ai works

- Libraries found in RAPIDS.ai

- Using these libraries

- Installation and System Requirements

This article was published as a part of the Data Science Blogathon.

Table of contents

RAPIDS.AI

RAPIDS is a suite of open-source software libraries and APIs for executing data science pipelines entirely on GPUs. RAPIDS provides exceptional performance and speed with familiar APIs that match the most popular PyData libraries. It is developed on NVIDIA CUDA and Apache Arrow, which is the reason behind its unparalleled performance.

How does RAPIDS.AI work?

RAPIDS uses GPU-accelerated machine learning to speed up data science and analytics workflows. It has a GPU-optimized core data frame that helps build databases and machine learning applications and is designed to be similar to Python. RAPIDS offers a collection of libraries for running a data science pipeline entirely on GPUs. It was created in 2017 by the GPU Open Analytics Initiative (GoAI) and partners in the machine learning community to accelerate end-to-end data science and analytics pipelines on GPUs using a GPU Dataframe based on the Apache Arrow columnar memory platform. RAPIDS also includes a Dataframe API that integrates with machine learning algorithms.

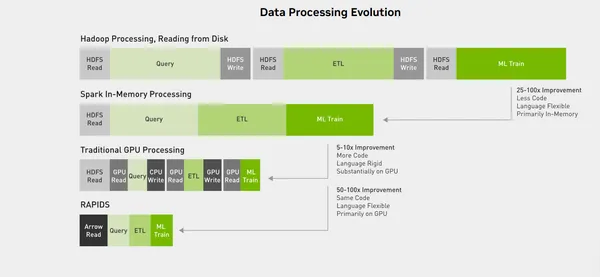

Faster Data Access with Less Data Movement

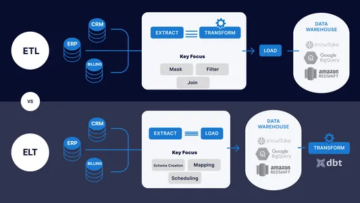

Hadoop had limitations in handling complex data pipelines efficiently. Apache Spark addressed this issue by keeping all data in memory, allowing for more flexible and complex data pipelines. However, this introduced new bottlenecks, and analyzing even a few hundred gigabytes of data could take a long time on Spark clusters with hundreds of CPU nodes. To fully realize the potential of data science, GPUs must be at the core of data center design, including five elements: computing, networking, storage, deployment, and software. In general, end-to-end data science workflows on GPUs are 10 times faster than on CPUs.

Libraries

We will learn about 3 libraries in the RAPIDS ecosystem.

- cuDF

- cuML

- cuGraph

cuDF: A Faster Pandas Alternative

cuDF is a GPU DataFrame library alternative to the pandas’ data frame. It is built on the Apache Arrow columnar memory format and offers a similar API to pandas for manipulating data on the GPU. cuDF can be used to speed up pandas’ workflows by using the parallel computation capabilities of GPUs. It can be used for tasks such as loading, joining, aggregating, filtering, and manipulating data.

cuDF is an easy alternative to Pandas DataFrame in terms of programming also.

import cudf # Create a cuDF DataFrame

df = cudf.DataFrame({'a': [1, 2, 3], 'b': [4, 5, 6]}) # Perform some basic operations

df['c'] = df['a'] + df['b']

df = df.query('c > 4') # Convert to a pandas DataFrame

pdf = df.to_pandas()

Using cuDF is also easy, as you must replace your Pandas DataFrame object with a cuDF object. To use it, we just have to replace “pandas” with “cudf” and that’s it. Here’s an example of how you might use cuDF to create a DataFrame object and perform some operations on it:

cuML: A Faster Scikit Learn Alternative

cuML is a collection of fast machine learning algorithms accelerated by GPUs and designed for data science and analytical tasks. It offers an API similar to sci-kit-learn’s, allowing users to use the familiar fit-predict-transform approach without knowing how to program GPUs.

Like cuDF, using cuML is also very easy for anyone to understand. A code snippet is provided for example.

import cudf

from cuml import LinearRegression # Create some example data

X = cudf.DataFrame({'x': [1, 2, 3, 4, 5]})

y = cudf.Series([2, 4, 6, 8, 10]) # Initialize and fit the model

model = LinearRegression()

model.fit(X, y) # Make predictions

predictions = model.predict(X)

print(predictions)You can see I have replaced the “sklearn” with “cuml” and “pandas” with “cudf” and that’s it. Now this code will use GPU, and the operations will be much faster.

cuGRAPH: A Faster Networkx alternative

cuGraph is a library of graph algorithms that seamlessly integrates into the RAPIDS data science ecosystem. It allows us to easily call graph algorithms using data stored in GPU DataFrames, NetworkX Graphs, or even CuPy or SciPy sparse Matrices. It offers scalable performance for 30+ standard algorithms, such as PageRank, breadth-first search, and uniform neighbor sampling.

Like cuDf and cuML, cuGraph is also very easy to use.

import cugraph

import cudf # Create a DataFrame with edge information

edge_data = cudf.DataFrame({ 'src': [0, 1, 2, 2, 3], 'dst': [1, 2, 0, 3, 0]

}) # Create a Graph using the edge data

G = cugraph.Graph()

G.from_cudf_edgelist(edge_data, source='src', destination='dst') # Compute the PageRank of the graph

pagerank_df = cugraph.pagerank(G) # Print the result

print(pagerank_df)#Yes, using cuGraph is this simple. Just replace “networkx” with “cugraph” and that’s all.

Requirements

Now the best part of using RAPIDS is, you don’t need to own a professional GPU. You can use your gaming or notebook GPU if it matches the system requirements.

To use RAPIDS, it is necessary to have the minimum system requirements.

Installation

Now, coming to installation, please check the system requirements, and if it matches, you are good to go.

Go to this link, select your system, choose your configuration, and install it.

Download link: https://docs.rapids.ai/install

Performance Benchmarks

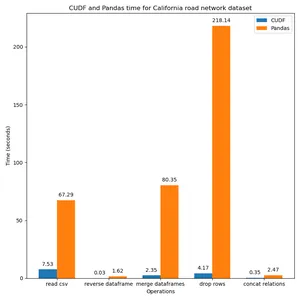

The below picture contains a performance benchmark of cuDF and Pandas for Data Loading and Manipulation of the “California road network dataset.” You can check out more about the code from this website: https://arshovon.com/blog/cudf-vs-df.

You can check all the benchmarks by visiting the official website: https://rapids.ai.

Experience Rapids in Online Notebooks

Rapids has provided several online notebooks to check out these libraries. Go to https://rapids.ai to check all these notebooks.

Advantages

Some benefits of RAPIDS are :

- Minimal code changes

- Acceleration using GPU

- Faster model deployment

- Iterations to increase machine learning model accuracy

- Improve data science productivity

Conclusion

RAPIDS is a collection of open-source software libraries and APIs that allows you to execute end-to-end data science and analytics pipelines entirely on NVIDIA GPUs using familiar PyData APIs. It can be used without any hassles or need for GPU programming, making it much easier and faster.

Here is a summary of what we have learned so far:

- How can we significantly use our GPU to accelerate ML models without GPU programming?

- It is a perfect alternative to various widely available libraries like Pandas, Scikit-Learn, etc.

- To use RAPIDS.ai, we just have to change some minimal code.

- It is faster than traditional CPU-based ML model training.

- How to install RAPIDS.ai in our system.

For any questions or feedback, you can email me at: [email protected]

Frequently Asked Questions

A. RAPIDS.ai is a suite of open-source software libraries that enables end-to-end data science and analytics pipelines to be executed entirely on NVIDIA GPUs using familiar PyData APIs.

A. RAPIDS.ai offers a collection of libraries for running a data science pipeline entirely on GPUs. These libraries include cuDF for DataFrame processing, cuML for machine learning, cuGraph for graph processing, cuSpatial for spatial analytics, and more.

A. RAPIDS.ai offers significant speed improvements over traditional CPU-based data science tools by leveraging the parallel computation capabilities of GPUs. It also offers seamless integration with minimal code changes and familiar APIs that match the most popular PyData libraries.

A. Yes, It is very easy and very similar to other libraries. You just need to make some minimal changes in your Python code.

A. No, As AMD GPU doesn’t have CUDA cores, we can’t use Rapids.AI in AMD GPU.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/07/rapids-use-gpu-to-accelerate-ml-models-easily/

- :has

- :is

- :not

- $UP

- 1

- 10

- 13

- 16

- 2017

- 8

- 9

- a

- About

- accelerate

- accelerated

- access

- AI

- algorithms

- All

- Allowing

- allows

- also

- alternative

- AMD

- an

- Analytical

- analytics

- Analytics Vidhya

- analyzing

- and

- any

- anyone

- Apache

- Apache Spark

- api

- APIs

- applications

- approach

- ARE

- article

- AS

- asked

- At

- available

- based

- basic

- BE

- behind

- below

- Benchmark

- benchmarks

- benefits

- BEST

- bottlenecks

- build

- built

- by

- call

- CAN

- capabilities

- Center

- change

- Changes

- check

- Choose

- code

- collection

- COM

- coming

- community

- compare

- complex

- computation

- Compute

- computing

- computing power

- Configuration

- contains

- continuously

- convert

- Core

- could

- CPU

- create

- created

- data

- data access

- Data Center

- data science

- databases

- Demand

- deployment

- Design

- designed

- developed

- discretion

- does

- Doesn’t

- Dont

- easier

- easily

- easy

- ecosystem

- Edge

- efficient

- efficiently

- elements

- enables

- end-to-end

- entirely

- etc

- Even

- example

- exceptional

- execute

- executed

- executing

- experiment

- familiar

- far

- FAST

- faster

- Features

- feedback

- few

- filtering

- fit

- five

- flexible

- For

- format

- found

- FRAME

- from

- fully

- gaming

- General

- Go

- good

- GPU

- GPUs

- graph

- graphs

- Grows

- had

- Handling

- Have

- helps

- high-level

- How

- How To

- However

- http

- HTTPS

- hundred

- Hundreds

- i

- if

- import

- improvements

- in

- include

- includes

- Including

- Increase

- increasing

- information

- Initiative

- install

- installation

- Integrates

- integration

- into

- introduced

- issue

- IT

- ITS

- joining

- just

- keeping

- Knowing

- knowledge

- LEARN

- learned

- learning

- less

- leveraging

- libraries

- Library

- like

- limitations

- LINK

- loading

- Long

- long time

- longer

- machine

- machine learning

- make

- Making

- manipulating

- Manipulation

- Match

- me

- Media

- Memory

- might

- minimal

- minimum

- ML

- model

- models

- more

- more efficient

- most

- Most Popular

- much

- must

- necessary

- Need

- network

- networking

- New

- no

- nodes

- notebook

- notebooks

- now

- Nvidia

- object

- of

- Offers

- official

- Official Website

- on

- online

- open

- open source

- Open-source Software

- Operations

- or

- Other

- our

- out

- over

- overview

- own

- owned

- pandas

- Parallel

- part

- partners

- perfect

- perform

- performance

- picture

- pipeline

- platform

- plato

- Plato Data Intelligence

- PlatoData

- please

- Popular

- possible

- potential

- power

- Predictions

- process

- processing

- professional

- Program

- Programming

- protected

- provided

- provides

- published

- Python

- Questions

- realize

- reason

- replace

- replaced

- Requirements

- result

- road

- running

- scalable

- Science

- scientists

- scikit-learn

- seamless

- seamlessly

- Search

- see

- several

- shown

- significant

- significantly

- similar

- Simple

- So

- so Far

- Software

- solution

- some

- Source

- Spark

- Spatial

- speed

- standard

- storage

- stored

- such

- suite

- SUMMARY

- system

- Take

- tasks

- terms

- than

- that

- The

- The Graph

- These

- this

- time

- times

- to

- today

- tools

- traditional

- Training

- understand

- unparalleled

- us

- use

- used

- users

- uses

- using

- various

- very

- was

- we

- webp

- Website

- What

- What is

- widely

- will

- with

- without

- Work

- workflows

- X

- yes

- you

- Your

- zephyrnet