Introduction

Satellite imagery has become an indispensable asset in our modern world, offering invaluable insights into our environment, climate, and land usage. These images serve many purposes, from disaster management and agriculture to urban planning and environmental monitoring. As the volume of satellite imagery continues to grow, there is an increasing need for efficient and precise methods to process and categorize these images.

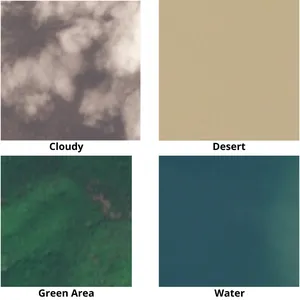

In this article, we embark on a journey into satellite image classification, leveraging cutting-edge deep learning models known as Vision Transformers (ViTs). What makes this exploration particularly intriguing is the dataset at our disposal: 5631 satellite images, meticulously sorted into four distinct categories—cloudy, desert, green area, and water. These categories encompass various environmental conditions and scenarios, making our dataset a valuable resource for training and testing our model.

Learning Outcomes

- Understanding Vision Transformers and their significance in satellite image classification.

- Exploring the advantages of ViTs, including their self-attention mechanisms that excel at capturing complex image patterns.

- Real-world applications of satellite image classification, demonstrating its benefits across diverse domains.

This article was published as a part of the Data Science Blogathon.

Table of contents

Satellite Imagery: A Valuable Resource

Satellite imagery is a powerful tool that helps us understand and manage our planet. It provides a unique vantage point, offering precise and consistent snapshots of Earth’s surface. This rich data source profoundly impacts our lives and the environment. In environmental monitoring, satellite imagery contributes to our understanding of climate change. These images enable scientists to track glacier changes, deforestation, and weather patterns. Our chosen dataset mirrors the critical role of satellite imagery, offering a diverse array of environmental conditions that align with real-world climate challenges.

Additionally, satellite imagery plays a pivotal role in urban planning and development. It assists city planners in assessing urban sprawl, infrastructure expansion, and land use changes over time. By working with a dataset that mirrors urban landscapes, our ViT-based model gains insights into the complexities of urban growth and land management. Furthermore, satellite imagery becomes indispensable for rapid response and recovery efforts in natural disasters. Whether assessing flood damage, monitoring forest fires, or tracking hurricanes, satellite images provide critical information for disaster management agencies. Our curated dataset represents a collection of pictures and the real-world challenges and opportunities that satellite imagery presents. Through our exploration of Vision Transformers, we aim to harness the full potential of this valuable resource for the betterment of our world.

The Rise of Vision Transformers

Convolutional Neural Networks (CNNs) have long dominated image classification in the dynamic field of computer vision. However, a transformative evolution is underway with the emergence of Vision Transformers (ViTs). The rise of ViTs signifies a significant milestone in the quest for more effective and versatile image analysis. What sets Vision Transformers apart is their ability to decode images in a manner closely resembling human perception. Unlike traditional CNNs, which rely on fixed grid structures, ViTs use self-attention mechanisms inspired by the human visual system. This ingenious adaptation enables ViTs to capture intricate patterns, long-range dependencies, and complex relationships within images, akin to our eyes focusing on relevant image regions during visual analysis.

This breakthrough in self-attention has made ViTs game-changers in image classification. Their capacity to recognize nuanced features and contextual information within images has opened new possibilities across various domains. From satellite image classification to medical image analysis, ViTs have showcased their adaptability and prowess. As we delve further into the era of Vision Transformers, we uncover exciting opportunities to advance our understanding of the visual world. Their ability to decipher complex images with human-like attention to detail promises a bright future in computer vision that will unveil previously hidden insights and push the boundaries of what’s achievable in image classification tasks.

Data Collection and Preparation

Our dataset comprises 5631 images, each meticulously categorized into four distinct classes: cloudy, desert, green area, and water. These categories encompass diverse environmental conditions, from the green regions’ serene beauty to deserts’ harsh aridity. Before training our ViT model, we took great care in preprocessing this dataset, ensuring uniformity in image resolution and normalizing pixel values. A well-prepared dataset serves as the foundation of any successful machine-learning project.

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split #import csv

data_dir = '/kaggle/input/satellite-image-classification/images'

dataset = pd.read_csv('/kaggle/input/satellite-image-classification/data.csv', dtype = 'str') # Ensure you have labels for each image

train_data, test_data = train_test_split(dataset, test_size=0.2, random_state=42)

train_data, val_data = train_test_split(train_data, test_size=0.1, random_state=42)

Vision Transformer Architecture

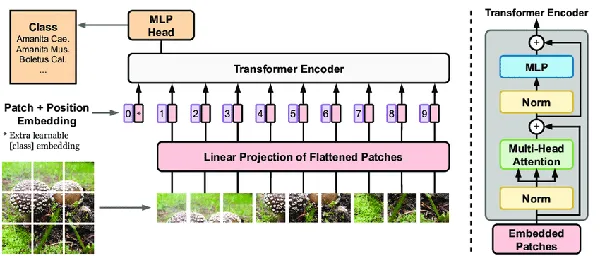

The Vision Transformer (ViT) architecture represents a groundbreaking departure from traditional Convolutional Neural Networks (CNNs) in computer vision. At its core, a ViT model consists of several key components, each contributing to its unique ability to effectively process and classify satellite images.

Input Embeddings

The ViT begins with input embeddings, where each input image patch is linearly embedded into a lower-dimensional representation. These embeddings enable the model to analyze smaller image regions systematically. The choice of patch size and embedding dimension is critical and often depends on the specific task and dataset.

Positional Encodings

Like all images, satellite images have a spatial layout with essential information. To preserve this spatial information, positional encodings are added to the embeddings. These encodings inform the model about the relative positions of different patches, ensuring that spatial relationships are considered during processing.

Transformer Encoder Layers

The core of the ViT architecture consists of multiple Transformer encoder layers. These layers capture intricate patterns and relationships within the input data. Each encoder layer consists of two sub-layers: the Multi-Head Self-Attention Mechanism and the Feed-Forward Neural Network. These sub-layers work together to process and refine the embeddings, allowing the model to focus on relevant image regions and extract hierarchical features.

Multi-Head Self-Attention Mechanism

This component enables the model to weigh the importance of different patches in the context of the entire image. It learns to attend to relevant patches while suppressing noise and irrelevant information. Multiple attention heads allow the model to capture different relationships and patterns.

Feed-Forward Neural Network

A feed-forward neural network further refines the representations following attention mechanisms. It consists of fully connected layers and activation functions, allowing the model to transform the embeddings into more expressive features suitable for classification.

Output Classification Head

There is an output classification head at the end of the ViT architecture. This head typically includes one or more fully connected layers with softmax activation. It maps the learned features to class probabilities, making predictions about the input image’s category.

Fine-Tuning on Satellite Data

With our dataset and ViT architecture in place, we fine-tuned our model. This process involved exposing our ViT to our labeled satellite images, allowing it to learn and adapt to the unique characteristics of each class. As the model fine-tuned itself, it became increasingly adept at distinguishing between cloudy skies, expansive deserts, lush green areas, and serene water bodies.

Data Augmentation Techniques

We implemented data augmentation techniques to boost our model’s ability to generalize to real-world variations in satellite imagery. These transformations, such as rotation, flipping, and zooming, helped our model become more robust and capable of handling various image conditions.

# Define data augmentation techniques

data_augmentation = keras.Sequential([ layers.experimental.preprocessing.RandomFlip("horizontal"), layers.experimental.preprocessing.RandomRotation(0.1), layers.experimental.preprocessing.RandomZoom(0.1),

]) # Create a Vision Transformer (ViT) model

def create_vit_model(input_shape, num_classes): inputs = keras.Input(shape=input_shape) # Apply data augmentation to inputs augmented = data_augmentation(inputs) # Use a pre-trained ViT model (e.g., from TensorFlow Hub) as a base # Replace 'tfhub.dev/path/to/vit_model' with the actual URL vit_model = keras.applications.EfficientNetB0( weights='imagenet', include_top=False, input_tensor=augmented, input_shape=input_shape, ) # Fine-tune the ViT model for layer in vit_model.layers: layer.trainable = True # Add classification head x = layers.GlobalAveragePooling2D()(vit_model.output) x = layers.Dense(512, activation='relu')(x) outputs = layers.Dense(num_classes, activation='softmax')(x) # Create and compile the final model model = keras.Model(inputs, outputs) model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy']) return model # Initialize the ViT model

input_shape = (224, 224, 3) # Adapt to your image size

num_classes = 4 # Cloudy, Desert, Green Area, Water

vit_model = create_vit_model(input_shape, num_classes) # Train the model

history = vit_model.fit(train_data, epochs=10, validation_data=val_data)

#import csvEvaluating Model Performance

Our ViT model’s performance was rigorously evaluated on a separate test dataset. The results were promising, with high accuracy, precision, and recall scores. This level of accuracy is pivotal for applications like land use mapping, environmental monitoring, and disaster response. Our model’s proficiency in classifying images into cloudy, desert, green area, and water categories underscores its potential in real-world scenarios.

# Evaluate the model on the test set

test_loss, test_acc = vit_model.evaluate(test_data) # Visualize training history (e.g., loss and accuracy over epochs)

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label = 'val_accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.ylim([0, 1])

plt.legend(loc='lower right')

plt.show() # Make predictions on new satellite images

# You can use vit_model.predict() to classify images into one of the four categories

#import csvPractical Applications

The practical applications of accurate satellite image classification are multifaceted and offer transformative solutions across diverse domains.

- In agriculture, precisely identifying and classifying crop types from satellite imagery empowers farmers with critical insights into crop health, enabling targeted interventions for disease control and optimizing resource allocation. Furthermore, satellite-based yield prediction models facilitate efficient harvest planning and food security assessments, which are crucial for global agricultural sustainability.

- Early warning systems heavily rely on rapidly classifying satellite images in disaster management. Identifying disaster-affected areas, assessing damage, and strategizing relief efforts become more effective and time-sensitive, ultimately saving lives and minimizing destruction.

- Urban planners harness the power of satellite image classification for comprehensive land use mapping. This aids in optimizing urban development, zoning, and infrastructure planning, fostering sustainable and resilient cities for the future.

- Environmentalists find invaluable support in monitoring ecological changes. By classifying satellite images, they can track deforestation, glacier retreat, and habitat alterations, contributing to informed conservation strategies.

The dataset chosen for this project aptly mirrors these practical applications, underscoring the real-world significance and impact of robust satellite image classification methods.

Future Directions and Challenges

The journey ahead holds exciting possibilities and critical challenges in the dynamic field of satellite image classification with Vision Transformers. While our dataset provides a strong foundation, addressing the scarcity of labeled data remains a crucial challenge. Future research endeavors will likely focus on innovative techniques such as semi-supervised learning and transfer learning to extract valuable insights from limited annotated datasets.

Furthermore, the real-world environment presents an ever-shifting landscape of satellite image conditions. Researchers continually strive to enhance model robustness to maintain relevance, ensuring reliable performance across a broader spectrum of satellite image scenarios, from varying weather conditions to geographical diversity. Navigating these avenues will lead to advancements that extend the boundaries of satellite image classification’s efficacy and applicability.

Conclusion

In conclusion, our journey through satellite image classification using Vision Transformers has showcased the transformative potential of deep learning in handling real-world challenges. With a dataset comprising 5631 images categorized into four distinct classes—cloudy, desert, green area, and water—we’ve demonstrated the power of ViTs in distinguishing between diverse environmental conditions. This work paves the way for impactful applications in environmental monitoring, agriculture, disaster response, and beyond. Our dataset, mirroring the complexities of the natural world, underscores the practical relevance of our endeavors. As we look to the future, we’re excited about the possibilities that await in the ever-evolving landscape of satellite image classification.

Key Takeaways

- Satellite imagery is crucial in diverse fields, including environmental monitoring, disaster management, and urban planning.

- Vision Transformers (ViTs) offer a promising approach for accurate satellite image classification, leveraging self-attention mechanisms and deep learning.

- The dataset used in this project reflects real-world challenges and practical applications, highlighting the potential impact of ViTs in understanding and managing our environment.

Frequently Asked Questions

Answer: Accurate satellite image classification is vital for various applications, such as land use mapping, disaster management, and environmental monitoring. It provides insights into our changing world and aids in decision-making.

Answer: ViTs use self-attention mechanisms, akin to human perception, to process images holistically and capture complex patterns. This differs from CNNs, which rely on fixed grid structures.

Answer: ViTs have shown promise in handling diverse satellite image conditions. They can adapt to various environmental scenarios and effectively classify images under different conditions.

Answer: Practical applications include crop type identification, disaster early warning systems, urban planning, and ecological monitoring, among others. It has wide-ranging benefits across industries.

Answer: Using code to extract attention weights from the ViT model and overlay them on the original image, you can visualize attention maps. This helps interpret why the model made specific classifications.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/10/satellite-image-classification-using-vision-transformers/

- :has

- :is

- :not

- :where

- 1

- 10

- 11

- 12

- 13

- 19

- 224

- 8

- a

- ability

- About

- accuracy

- accurate

- across

- Activation

- actual

- Adam

- adapt

- adaptability

- adaptation

- add

- added

- addressing

- adept

- advance

- advancements

- advantages

- agencies

- Agricultural

- agriculture

- ahead

- aids

- aim

- align

- All

- allocation

- allow

- Allowing

- alterations

- among

- an

- analysis

- analytics

- Analytics Vidhya

- analyze

- and

- and infrastructure

- any

- apart

- applications

- Apply

- approach

- architecture

- ARE

- AREA

- areas

- Array

- article

- AS

- asked

- Assessing

- assessments

- asset

- assists

- At

- attend

- attention

- augmented

- avenues

- await

- base

- Beauty

- became

- become

- becomes

- before

- benefits

- Betterment

- between

- Beyond

- blogathon

- bodies

- boost

- boundaries

- breakthrough

- Bright

- broader

- by

- CAN

- capable

- Capacity

- capture

- Capturing

- care

- categories

- Category

- challenge

- challenges

- change

- Changes

- changing

- characteristics

- choice

- chosen

- Cities

- City

- class

- classes

- classification

- Classify

- Climate

- Climate change

- closely

- code

- collection

- complex

- complexities

- component

- components

- comprehensive

- comprises

- comprising

- computer

- Computer Vision

- conclusion

- conditions

- connected

- CONSERVATION

- considered

- consistent

- consists

- context

- contextual

- continually

- continues

- contributes

- contributing

- control

- Core

- create

- critical

- crop

- crucial

- curated

- cutting-edge

- damage

- data

- data science

- datasets

- Decipher

- Decision Making

- deep

- deep learning

- define

- deforestation

- delve

- demonstrated

- demonstrating

- dependencies

- depends

- DESERT

- detail

- Development

- differ

- different

- Dimension

- disaster

- disasters

- discretion

- Disease

- distinct

- diverse

- Diversity

- do

- domains

- during

- dynamic

- e

- each

- Early

- Ecological

- Effective

- effectively

- efficacy

- efficient

- efforts

- embark

- embedded

- embedding

- emergence

- empowers

- enable

- enables

- enabling

- encompass

- end

- endeavors

- enhance

- ensure

- ensuring

- Entire

- Environment

- environmental

- epoch

- epochs

- Era

- essential

- evaluate

- evaluated

- evolution

- Excel

- excited

- exciting

- expansion

- expansive

- experimental

- exploration

- expressive

- extend

- extract

- Eyes

- facilitate

- farmers

- Features

- field

- Fields

- final

- Find

- fires

- fixed

- flood

- Focus

- focusing

- following

- food

- For

- forest

- fostering

- Foundation

- four

- from

- full

- fully

- functions

- further

- Furthermore

- future

- Gains

- generated

- geographical

- Global

- great

- Green

- Grid

- groundbreaking

- Grow

- Growth

- handle

- Handling

- harness

- harvest

- Have

- head

- heads

- Health

- heavily

- helped

- helps

- Hidden

- High

- highlighting

- history

- holds

- Horizontal

- How

- However

- HTTPS

- Hub

- human

- i

- Identification

- identifying

- image

- image analysis

- Image classification

- ImageNet

- images

- Impact

- impactful

- Impacts

- implemented

- import

- importance

- in

- include

- includes

- Including

- increasing

- increasingly

- industries

- inform

- information

- informed

- Infrastructure

- innovative

- input

- inputs

- insights

- inspired

- interventions

- into

- intricate

- intriguing

- invaluable

- involved

- IT

- ITS

- itself

- journey

- keras

- Key

- known

- Label

- Labels

- Land

- landscape

- layer

- layers

- Layout

- lead

- LEARN

- learned

- learning

- Level

- leveraging

- like

- likely

- Limited

- Lives

- Long

- Look

- loss

- lower

- made

- maintain

- make

- MAKES

- Making

- manage

- management

- managing

- manner

- many

- mapping

- Maps

- matplotlib

- mechanism

- mechanisms

- Media

- medical

- methods

- meticulously

- milestone

- minimizing

- mirroring

- model

- models

- Modern

- monitoring

- more

- multifaceted

- multiple

- Natural

- navigating

- Need

- network

- networks

- Neural

- neural network

- neural networks

- New

- Noise

- numpy

- of

- offer

- offering

- often

- on

- ONE

- opened

- opportunities

- optimizing

- or

- original

- Others

- our

- output

- over

- owned

- pandas

- part

- particularly

- Patch

- Patches

- patterns

- perception

- performance

- Pictures

- pivotal

- Pixel

- Place

- planet

- planning

- plato

- Plato Data Intelligence

- PlatoData

- plays

- Point

- positions

- possibilities

- potential

- power

- powerful

- Practical

- Practical Applications

- precise

- precisely

- Precision

- prediction

- Predictions

- preparation

- presents

- previously

- process

- processing

- profoundly

- project

- promise

- promises

- promising

- provide

- provides

- prowess

- published

- purposes

- Push

- quest

- rapid

- rapidly

- real world

- recognize

- recovery

- refine

- reflects

- regions

- Relationships

- relative

- relevance

- relevant

- reliable

- relief

- rely

- remains

- replace

- representation

- represents

- research

- researchers

- resembling

- resilient

- Resolution

- resource

- response

- Results

- Retreat

- return

- Rich

- right

- Rise

- robust

- robustness

- Role

- satellite

- satellite imagery

- saving

- Scarcity

- scenarios

- Science

- scientists

- scores

- security

- separate

- serve

- serves

- set

- Sets

- several

- showcased

- shown

- significance

- significant

- signifies

- Size

- skies

- smaller

- Solutions

- Source

- Spatial

- specific

- Spectrum

- strategies

- strive

- strong

- structures

- successful

- such

- suitable

- support

- suppressing

- Surface

- Sustainability

- sustainable

- system

- Systems

- targeted

- Task

- tasks

- techniques

- tensorflow

- test

- Testing

- that

- The

- The Future

- their

- Them

- There.

- These

- they

- this

- Through

- time

- time-sensitive

- to

- together

- took

- tool

- track

- Tracking

- traditional

- Train

- Training

- transfer

- Transform

- transformations

- transformative

- transformer

- transformers

- true

- two

- type

- types

- typically

- Ultimately

- uncover

- under

- underscores

- understand

- understanding

- Underway

- unique

- unlike

- unveil

- urban

- URL

- us

- Usage

- use

- used

- using

- Valuable

- Values

- vantage point

- variations

- various

- varying

- versatile

- vision

- visualize

- vital

- volume

- warning

- was

- Water

- Way..

- we

- Weather

- weather patterns

- webp

- weigh

- were

- What

- What is

- whether

- which

- while

- why

- will

- with

- within

- Work

- work together

- working

- world

- X

- Yield

- you

- Your

- zephyrnet

- zooming