Introduction

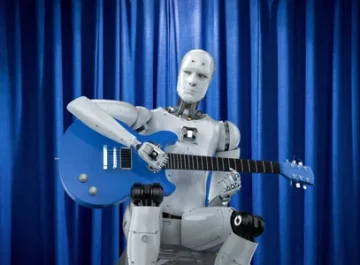

The ability to transform a single image into a detailed 3D model has long been a pursuit in the field of computer vision and generative AI. Stability AI’s TripoSR marks a significant leap forward in this quest, offering a revolutionary approach to 3D reconstruction from images. It empowers researchers, developers, and creatives with unparalleled speed and accuracy in transforming 2D visuals into immersive 3D representations. Moreover, the innovative model opens up a myriad of applications across diverse fields, from computer graphics and virtual reality to robotics and medical imaging. In this article, we will delve into the architecture, working, features, and applications of Stability AI’s TripoSR model.

Table of contents

What is TripoSR?

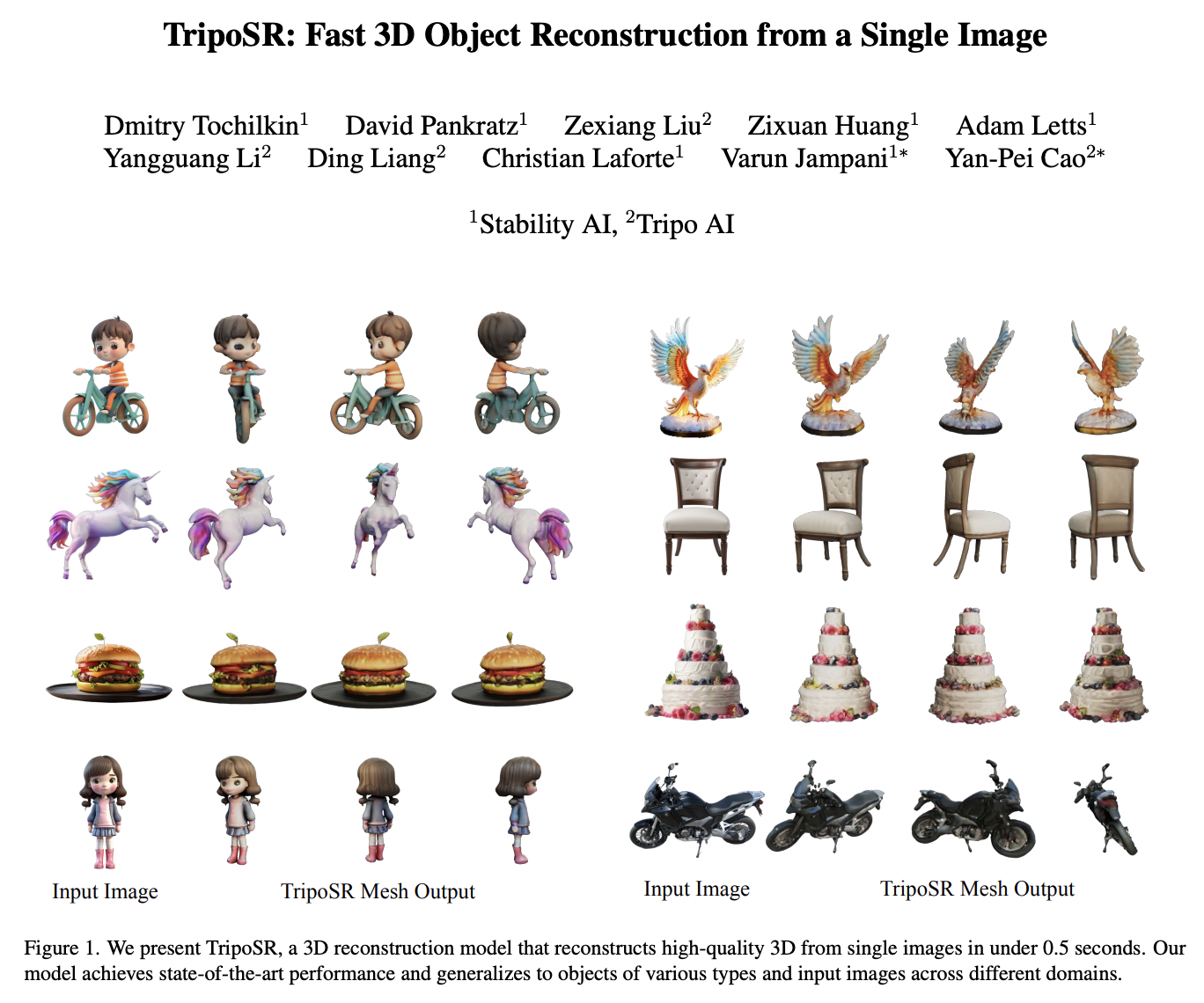

TripoSR is a 3D reconstruction model that leverages transformer architecture for fast feed-forward 3D generation, producing 3D mesh from a single image in under 0.5 seconds. It is built upon the LRM network architecture and integrates substantial improvements in data processing, model design, and training techniques. The model is released under the MIT license, aiming to empower researchers, developers, and creatives with the latest advancements in 3D generative AI.

LRM Architecture of Stability AI’s TripoSR

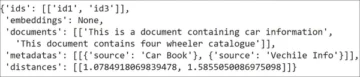

Similar to LRM, TripoSR leverages the transformer architecture and is specifically designed for single-image 3D reconstruction. It takes a single RGB image as input and outputs a 3D representation of the object in the image. The core of TripoSR includes three components: an image encoder, an image-to-triplane decoder, and a triplane-based neural radiance field (NeRF). Let’s understand each of these components clearly.

Image Encoder

The image encoder is initialized with a pre-trained vision transformer model, DINOv1. This model projects an RGB image into a set of latent vectors encoding global and local features of the image. These vectors contain the necessary information to reconstruct the 3D object.

Image-to-Triplane Decoder

The image-to-triplane decoder transforms the latent vectors onto the triplane-NeRF representation. This is a compact and expressive 3D representation suitable for complex shapes and textures. It consists of a stack of transformer layers, each with a self-attention layer and a cross-attention layer. This allows the decoder to attend to different parts of the triplane representation and learn the relationships between them.

Triplane-based Neural Radiance Field (NeRF)

The triplane-based NeRF model comprises a stack of multilayer perceptrons responsible for predicting the color and density of a 3D point in space. This component plays a crucial role in accurately representing the 3D object’s shape and texture.

How These Components Work Together?

The image encoder captures the global and local features of the input image. These are then transformed into the triplane-NeRF representation by the image-to-triplane decoder. The NeRF model further processes this representation to predict the color and density of 3D points in space. By integrating these components, TripoSR achieves fast feed-forward 3D generation with high reconstruction quality and computational efficiency.

TripoSR’s Technical Advancements

In the pursuit of enhancing 3D generative AI, TripoSR introduces several technical advancements aimed at empowering efficiency and performance. These advancements include data curation techniques for enhanced training, rendering techniques for optimized reconstruction quality, and model configuration adjustments for balancing speed and accuracy. Let’s explore these further.

Data Curation Techniques for Enhanced Training

TripoSR incorporates meticulous data curation techniques to bolster the quality of training data. By selectively curating a subset of the Objaverse dataset under the CC-BY license, the model ensures that the training data is of high quality. This deliberate curation process aims to enhance the model’s ability to generalize and produce accurate 3D reconstructions. Additionally, the model leverages a diverse array of data rendering techniques to closely emulate real-world image distributions. This further augments its capacity to handle a wide range of scenarios and produce high-quality reconstructions.

Rendering Techniques for Optimized Reconstruction Quality

To optimize reconstruction quality, TripoSR employs rendering techniques that balance computational efficiency and reconstruction granularity. During training, the model renders 128 × 128-sized random patches from original 512 × 512 resolution images. Simultaneously, it effectively manages computational and GPU memory loads. Furthermore, TripoSR implements an important sampling strategy to emphasize foreground regions, ensuring faithful reconstructions of object surface details. These rendering techniques contribute to the model’s ability to produce high-quality 3D reconstructions while maintaining computational efficiency.

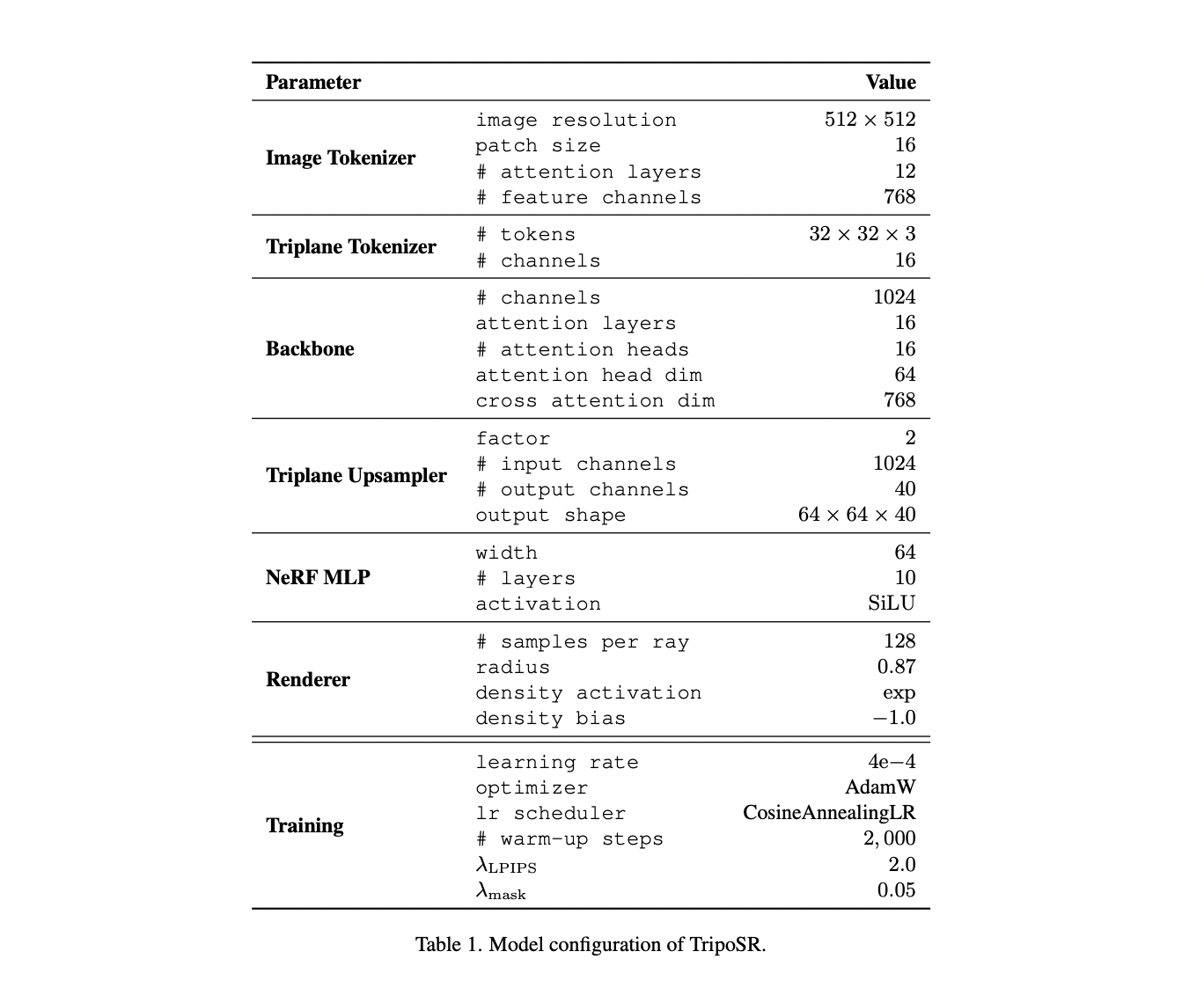

Model Configuration Adjustments for Balancing Speed and Accuracy

In an effort to balance speed and accuracy, TripoSR makes strategic model configuration adjustments. The model forgoes explicit camera parameter conditioning, allowing it to “guess” camera parameters during training and inference. This approach enhances the model’s adaptability and resilience to real-world input images, eliminating the need for precise camera information.

Additionally, TripoSR also introduces technical improvements in the number of layers in the transformer and the dimensions of the triplanes. The specifics of the NeRF model and the main training configurations have also been improved. These adjustments contribute to the model’s ability to achieve rapid 3D model generation with precise control over the output models.

TripoSR’s Performance on Public Datasets

Now let’s evaluate TripoSR’s performance on public datasets by employing a range of evaluation metrics, and comparing its results with state-of-the-art methods.

Evaluation Metrics for 3D Reconstruction

To assess the performance of TripoSR, we utilize a set of evaluation metrics for 3D reconstruction. We curate two public datasets, GSO and OmniObject3D, for evaluations, ensuring a diverse and representative collection of common objects.

The evaluation metrics include Chamfer Distance (CD) and F-score (FS), which are calculated by extracting the isosurface using Marching Cubes to convert implicit 3D representations into meshes. Additionally, we employ a brute-force search approach to align the predictions with the ground truth shapes, optimizing for the lowest CD. These metrics enable a comprehensive assessment of TripoSR’s reconstruction quality and accuracy.

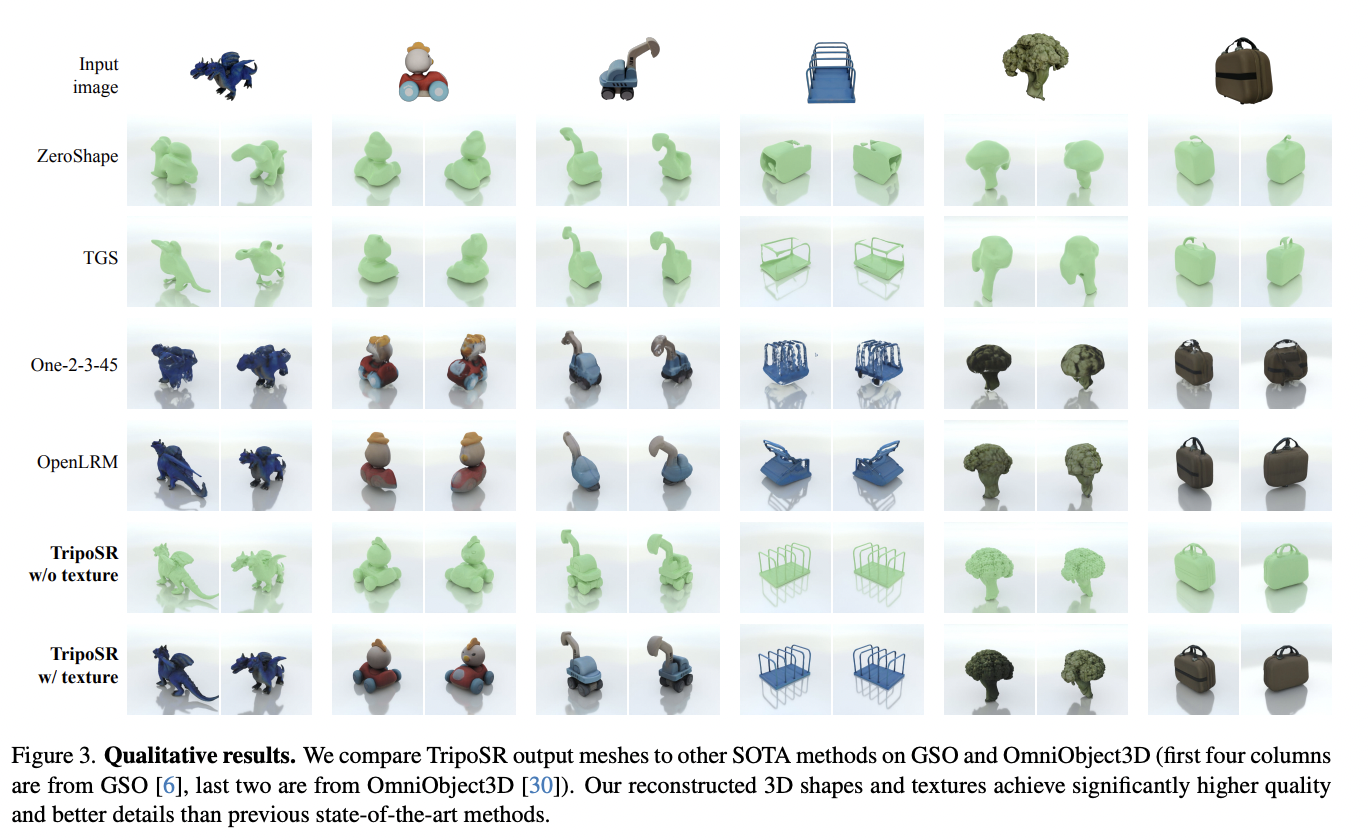

Comparing TripoSR with State-of-the-Art Methods

We quantitatively compare TripoSR with existing state-of-the-art baselines on 3D reconstruction that use feed-forward techniques, including One-2-3-45, TriplaneGaussian (TGS), ZeroShape, and OpenLRM. The comparison reveals that TripoSR significantly outperforms all the baselines in terms of CD and FS metrics, achieving new state-of-the-art performance on this task.

Furthermore, we present a 2D plot of different techniques with inference times along the x-axis and the averaged F-Score along the y-axis. This demonstrates that TripoSR is among the fastest networks while also being the best-performing feed-forward 3D reconstruction model.

Quantitative and Qualitative Results

The quantitative results showcase TripoSR’s exceptional performance, with F-Score improvements across different thresholds, including [email protected], [email protected], and [email protected]. These metrics demonstrate TripoSR’s ability to achieve high precision and accuracy in 3D reconstruction. Additionally, the qualitative results, as depicted in Figure 3, provide a visual comparison of TripoSR’s output meshes with other state-of-the-art methods on GSO and OmniObject3D datasets.

The visual comparison highlights TripoSR’s significantly higher quality and better details in reconstructed 3D shapes and textures compared to previous methods. These quantitative and qualitative results demonstrate TripoSR’s superiority in 3D reconstruction.

The Future of 3D Reconstruction with TripoSR

TripoSR, with its fast feed-forward 3D generation capabilities, holds significant potential for various applications across different fields. Additionally, ongoing research and development efforts are paving the way for further advancements in the realm of 3D generative AI.

Potential Applications of TripoSR in Various Fields

The introduction of TripoSR has opened up a myriad of potential applications in diverse fields. In the domain of AI, TripoSR’s ability to rapidly generate high-quality 3D models from single images can significantly impact the development of advanced 3D generative AI models. Furthermore, in computer vision, TripoSR’s superior performance in 3D reconstruction can enhance the accuracy and precision of object recognition and scene understanding.

In the field of computer graphics, TripoSR’s capability to produce detailed 3D objects from single images can revolutionize the creation of virtual environments and digital content. Moreover, in the broader context of AI and computer vision, TripoSR’s efficiency and performance can potentially drive progress in applications such as robotics, augmented reality, virtual reality, and medical imaging.

Ongoing Research and Development for Further Advancements

The release of TripoSR under the MIT license has sparked ongoing research and development efforts aimed at further advancing 3D generative AI. Researchers and developers are actively exploring ways to enhance TripoSR’s capabilities, including improving its efficiency, expanding its applicability to diverse domains, and refining its reconstruction quality.

Additionally, ongoing efforts are focused on optimizing TripoSR for real-world scenarios, ensuring its robustness and adaptability to a wide range of input images. Furthermore, the open-source nature of TripoSR has fostered collaborative research initiatives, driving the development of innovative techniques and methodologies for 3D reconstruction.

These ongoing research and development endeavors are poised to propel TripoSR to new heights, solidifying its position as a leading model in the field of 3D generative AI.

Conclusion

TripoSR’s remarkable achievement in producing high-quality 3D models from a single image in under 0.5 seconds is a testament to the rapid advancements in generative AI. By combining state-of-the-art transformer architectures, meticulous data curation techniques, and optimized rendering approaches, TripoSR has set a new benchmark for feed-forward 3D reconstruction.

As researchers and developers continue to explore the potential of this open-source model, the future of 3D generative AI appears brighter than ever. Its applications span diverse domains, from computer graphics and virtual environments to robotics and medical imaging, promising exponential growth in the future. Hence, TripoSR is poised to drive innovation and unlock new frontiers in fields where 3D visualization and reconstruction play a crucial role.

Loved reading this? You can explore many more such AI tools and their applications here.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/03/stability-ais-triposr-from-image-to-3d-model-in-seconds/

- :has

- :is

- :where

- $UP

- 06

- 21

- 23

- 2D

- 3d

- 5

- a

- ability

- accuracy

- accurate

- accurately

- Achieve

- achievement

- Achieves

- achieving

- across

- actively

- adaptability

- Additionally

- adjustments

- advanced

- advancements

- advancing

- AI

- AI models

- aimed

- Aiming

- aims

- align

- All

- Allowing

- allows

- along

- also

- among

- an

- and

- appears

- applications

- approach

- approaches

- architecture

- architectures

- ARE

- Array

- article

- AS

- assess

- assessment

- At

- attend

- augmented

- Augmented Reality

- augments

- Balance

- balancing

- been

- being

- Benchmark

- Better

- between

- bolster

- brighter

- broader

- built

- by

- calculated

- camera

- CAN

- capabilities

- capability

- Capacity

- captures

- CD

- clearly

- closely

- collaborative

- collection

- color

- combining

- Common

- compact

- compare

- compared

- comparing

- comparison

- complex

- component

- components

- comprehensive

- comprises

- computational

- computer

- computer graphics

- Computer Vision

- Configuration

- configurations

- consists

- contain

- content

- context

- continue

- contribute

- control

- convert

- Core

- creation

- creatives

- crucial

- curate

- curating

- curation

- data

- datasets

- delve

- Demo

- demonstrate

- demonstrates

- density

- depicted

- Design

- designed

- detailed

- details

- developers

- Development

- different

- digital

- Digital Content

- dimensions

- distance

- distributions

- diverse

- domain

- domains

- drive

- driving

- during

- each

- effectively

- efficiency

- effort

- efforts

- eliminating

- emphasize

- employing

- employs

- empower

- empowering

- empowers

- enable

- encoding

- endeavors

- enhance

- enhanced

- Enhances

- enhancing

- ensures

- ensuring

- environments

- Ether (ETH)

- evaluate

- evaluation

- evaluations

- EVER

- exceptional

- existing

- expanding

- explore

- Exploring

- exponential

- Exponential Growth

- expressive

- faithful

- FAST

- fastest

- Features

- field

- Fields

- Figure

- focused

- For

- Forward

- fostered

- from

- Frontiers

- FS

- further

- Furthermore

- future

- generate

- generation

- generative

- Generative AI

- Global

- GPU

- graphics

- Ground

- Growth

- handle

- Have

- heights

- hence

- High

- high-quality

- higher

- highlights

- holds

- How

- HTTPS

- image

- images

- Imaging

- immersive

- Impact

- implements

- important

- improved

- improvements

- improving

- in

- include

- includes

- Including

- incorporates

- information

- initiatives

- Innovation

- innovative

- input

- Integrates

- Integrating

- into

- Introduces

- Introduction

- IT

- ITS

- latest

- layer

- layers

- leading

- Leap

- LEARN

- leverages

- License

- loads

- local

- Long

- lowest

- Main

- maintaining

- MAKES

- manages

- many

- max-width

- medical

- medical imaging

- Memory

- mesh

- methodologies

- methods

- meticulous

- Metrics

- MIT

- model

- models

- more

- Moreover

- myriad

- Nature

- necessary

- Need

- Nerf

- network

- networks

- Neural

- New

- number

- object

- objects

- of

- offering

- on

- ongoing

- onto

- open source

- opened

- opens

- Optimize

- optimized

- optimizing

- original

- Other

- Outperforms

- output

- outputs

- over

- parameter

- parameters

- parts

- Patches

- Paving

- performance

- plato

- Plato Data Intelligence

- PlatoData

- Play

- plays

- plot

- Point

- points

- poised

- position

- potential

- potentially

- precise

- Precision

- predict

- predicting

- Predictions

- present

- previous

- process

- processes

- produce

- producing

- Progress

- projects

- promising

- Propel

- protected

- provide

- public

- pursuit

- qualitative

- quality

- quantitative

- quest

- random

- range

- rapid

- rapidly

- Reading

- real world

- Reality

- realm

- recognition

- refining

- regions

- Relationships

- release

- released

- remarkable

- rendering

- renders

- representation

- representations

- representative

- representing

- research

- research and development

- researchers

- resilience

- Resolution

- responsible

- Results

- Reveals

- revolutionary

- revolutionize

- RGB

- robotics

- robustness

- Role

- s

- scenarios

- scene

- Search

- seconds

- set

- several

- Shape

- shapes

- showcase

- significant

- significantly

- simultaneously

- single

- solidifying

- Space

- span

- sparked

- specifically

- specifics

- speed

- Stability

- stack

- state-of-the-art

- Strategic

- Strategy

- substantial

- such

- suitable

- superior

- superiority

- Surface

- takes

- Task

- Technical

- techniques

- terms

- testament

- tgs

- than

- that

- The

- The Future

- their

- Them

- then

- These

- this

- three

- times

- to

- together

- tools

- Training

- Transform

- transformed

- transformer

- transforming

- transforms

- truth

- two

- under

- understand

- understanding

- unlock

- unparalleled

- upon

- use

- using

- utilize

- various

- vectors

- Virtual

- Virtual reality

- vision

- visual

- visualization

- visuals

- Way..

- ways

- we

- which

- while

- wide

- Wide range

- will

- with

- Work

- work together

- working

- you

- zephyrnet