Image generated by DALLE-3

In the ever-expanding universe of AI and ML a new star has emerged: prompt engineering. This burgeoning field revolves around the strategic crafting of inputs designed to steer AI models toward generating specific, desired outputs.

Various media outlets have been talking about prompt engineering with much fanfare, making it seem like it’s the ideal job—you don’t need to learn how to code, nor do you have to be knowledgeable about ML concepts like deep learning, datasets, etc. You’d agree that it seems too good to be true, right?

The answer is both yes and no, actually. We’ll explain exactly why in today’s article, as we trace the beginnings of prompt engineering, why it’s important, and most importantly, why it’s not the life-changing career that will move millions up on the social ladder.

We’ve all seen the numbers—the global AI market will be worth $1.6 trillion by 2030, OpenAI is offering $900k salaries, and that’s without even mentioning the billions, if not trillions of words churned out by GPT-4, Claude and various other LLMs. Of course, data scientists, ML experts, and other high-level pros in the field are at the forefront.

However, 2022 changed everything, as GPT-3 became ubiquitous the moment it became publicly available. Suddenly, the average Joe realized the importance of prompts and the notion of GIGO—garbage in, garbage out. If you write a sloppy prompt without any details, the LLM will have free reign over the output. It was simple at first, but users soon realized the model’s true capabilities.

However, people soon began experimenting with more complex workflows and longer prompts, further emphasizing the value of weaving words skillfully. Custom instructions only widened the possibilities, and only accelerated the rise of the prompt engineer—a professional who can use logic, reasoning, and knowledge of an LLM’s behavior to produce the output he desires at a whim.

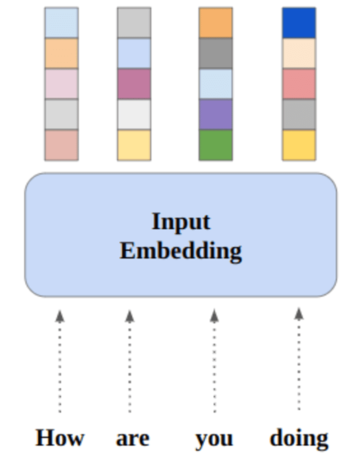

At the zenith of its potential, prompt engineering has catalyzed notable advances in natural language processing (NLP). AI models from the vanilla GPT-3.5, all the way to niche iterations of Meta’s LLaMa, when fed with meticulously crafted prompts, have showcased an uncanny ability to adapt to a vast spectrum of tasks with remarkable agility.

Advocates of prompt engineering herald it as a conduit for innovation in AI, envisioning a future where human-AI interactions are seamlessly facilitated through the meticulous art of prompt crafting.

Yet, it’s precisely the promise of prompt engineering that has stoked the flames of controversy. Its capacity to deliver complex, nuanced, and even creative outputs from AI systems has not gone unnoticed. Visionaries within the field perceive prompt engineering as the key to unlocking the untapped potentials of AI, transforming it from a tool of computation to a partner in creation.

Scrutiny of Prompt Engineering

Amidst the crescendo of enthusiasm, voices of skepticism resonate. Detractors of prompt engineering point to its inherent limitations, arguing that it amounts to little more than a sophisticated manipulation of AI systems that lack fundamental understanding.

They contend that prompt engineering is a mere façade, a clever orchestration of inputs that belies the AI’s inherent incapacity to comprehend or reason. Likewise, it can also be said that the following arguments support their position:

- AI models come and go. For instance, something worked in GPT-3 was already patched in GPT-3.5, and a practical impossibility in GPT-4. Wouldn’t that make prompt engineers just connoisseurs of particular versions of LLMs?

- Even the best prompt engineers aren’t really ‘engineers’ per se. For instance, an SEO expert can use GPT plugins or even a locally-run LLM to find backlink opportunities, or a software engineer might know how to use Copilot during to write, test and deploy code. But at the end of the day, they’re just that—single tasks that, in most cases, rely on previous expertise in a niche.

- Other than the occasional prompt engineering opening in Silicon Valley, there’s barely even slight awareness about prompt engineering, let alone anything else. Companies are slowly and cautiously adopting LLMs, which is the case with every innovation. But we all know that doesn’t stop the hype train.

The Hype Around Prompt Engineering

The allure of prompt engineering has not been immune to the forces of hype and hyperbole. Media narratives have oscillated between extolling its virtues and decrying its vices, often amplifying successes while downplaying its limitations. This dichotomy has sown confusion and inflated expectations, leading people to believe it’s either magic or completely worthless, and nothing in between.

Historical parallels with other tech fads also serve as a sobering reminder of the transient nature of technological trends. Technologies that once promised to revolutionize the world, from the metaverse to foldable phones, have often seen their luster fade as reality failed to meet the lofty expectations set by early hype. This pattern of inflated enthusiasm followed by disillusionment casts a shadow of doubt over the long-term viability of prompt engineering.

The Reality Behind the Hype

Peeling back the layers of hype reveals a more nuanced reality. Technical and ethical challenges abound, from the scalability of prompt engineering in diverse applications to concerns about reproducibility and standardization. When placed alongside traditional and well-established AI careers, such as those related to data science, prompt engineering’s sheen begins to dull, revealing a tool that, while powerful, is not without significant limitations.

That’s why prompt engineering if a fad—the notion that anyone can just converse with ChatGPT on a daily basis and land a job in the mid-six figures is nothing but a myth. Sure, a couple of overly enthusiastic Silicon Valley startups might be looking for a prompt engineer, but it’s not a viable career. At least not yet.

At the same time, prompt engineering as a concept will remain relevant, and certainly grow in importance. The skill of writing a good prompt, using your tokens efficiently, and knowing how to trigger certain outputs will be useful far beyond data science, LLMs, and AI as a whole.

We’ve already seen how ChatGPT altered the way people learn, work, communicate and even organize their life, so the skill of prompting will only be more relevant. In reality, who isn’t excited about automating the boring stuff with a reliable AI assistant?

Navigating the complex landscape of prompt engineering requires a balanced approach, one that acknowledges its potential while remaining grounded in the realities of its limitations. In addition, we must be aware of the double entendre that prompt engineering is:

- The act of prompting LLMs to do one’s bidding, with as little effort or steps as possible

- A career revolving around the act described above

So, in the future, as input windows increase and LLMs become more adept at creating much more than simple wireframes and robotic-sounding social media copy, prompt engineering will become an essential skill. Think of it as the equivalent of knowing how to use Word nowadays.

In sum, prompt engineering stands at a crossroads, its destiny shaped by a confluence of hype, hope, and hard reality. Whether it will solidify its place as a mainstay in the AI landscape or recede into the annals of tech fads remains to be seen. What is certain, however, is that its journey, controversial by all means, won’t be over anytime soon, for better of for worse.

Nahla Davies is a software developer and tech writer. Before devoting her work full time to technical writing, she managed—among other intriguing things—to serve as a lead programmer at an Inc. 5,000 experiential branding organization whose clients include Samsung, Time Warner, Netflix, and Sony.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/the-rise-and-fall-of-prompt-engineering-fad-or-future?utm_source=rss&utm_medium=rss&utm_campaign=the-rise-and-fall-of-prompt-engineering-fad-or-future

- :has

- :is

- :not

- :where

- $UP

- 000

- 2022

- a

- ability

- About

- accelerated

- Act

- actually

- adapt

- addition

- adept

- Adopting

- advances

- AI

- AI assistant

- AI models

- AI systems

- All

- allure

- alone

- alongside

- already

- also

- amounts

- amplifying

- an

- and

- answer

- any

- anyone

- anything

- anytime

- applications

- approach

- ARE

- arguments

- around

- Art

- article

- AS

- Assistant

- At

- automating

- available

- average

- aware

- awareness

- back

- balanced

- basis

- BE

- became

- become

- been

- before

- began

- behavior

- behind

- believe

- BEST

- Better

- between

- Beyond

- billions

- Boring

- both

- branding

- burgeoning

- but

- by

- CAN

- capabilities

- Capacity

- Career

- case

- cases

- cautiously

- certain

- certainly

- challenges

- changed

- ChatGPT

- clients

- code

- come

- communicate

- Companies

- completely

- complex

- comprehend

- computation

- concept

- concepts

- Concerns

- confluence

- confusion

- controversial

- controversy

- Couple

- course

- crafted

- Creating

- creation

- Creative

- Crossroads

- daily

- data

- data science

- datasets

- day

- decrying

- deep

- deep learning

- deliver

- deploy

- described

- designed

- desired

- details

- detractors

- Developer

- diverse

- do

- Doesn’t

- Dont

- double

- doubt

- during

- Early

- efficiently

- effort

- either

- else

- emerged

- emphasizing

- end

- engineer

- Engineering

- Engineers

- enthusiasm

- enthusiastic

- Equivalent

- essential

- etc

- Ether (ETH)

- ethical

- Even

- Every

- everything

- exactly

- excited

- expectations

- experiential

- expert

- expertise

- experts

- Explain

- façade

- facilitated

- fade

- Failed

- Fall

- far

- Fed

- field

- Figures

- Find

- First

- followed

- following

- For

- Forces

- forefront

- Free

- from

- full

- fundamental

- further

- future

- generated

- generating

- GitHub

- Global

- Go

- gone

- good

- Grow

- Hard

- Have

- he

- her

- high-level

- hope

- How

- How To

- However

- HTML

- http

- HTTPS

- Hype

- ideal

- if

- immune

- importance

- important

- importantly

- in

- Inc.

- include

- Increase

- inherent

- Innovation

- input

- inputs

- instance

- interactions

- into

- intriguing

- IT

- ITS

- Job

- joe

- journey

- just

- KDnuggets

- Key

- Know

- Knowing

- knowledge

- Lack

- ladder

- Land

- landscape

- language

- layers

- lead

- leading

- LEARN

- learning

- least

- let

- like

- likewise

- limitations

- little

- Llama

- lofty

- logic

- long-term

- longer

- looking

- magic

- mainstay

- make

- Making

- Manipulation

- Market

- means

- Media

- Media Outlets

- Meet

- mere

- Metaverse

- meticulous

- meticulously

- might

- millions

- ML

- models

- moment

- more

- most

- move

- much

- must

- narratives

- Natural

- Natural Language

- Natural Language Processing

- Nature

- Need

- Netflix

- New

- niche

- nlp

- no

- nor

- notable

- nothing

- Notion

- occasional

- of

- often

- on

- once

- ONE

- only

- OpenAI

- opening

- or

- orchestration

- organization

- Other

- out

- Outlets

- output

- outputs

- over

- Parallels

- particular

- partner

- Pattern

- People

- per

- phones

- PHP

- Place

- placed

- plato

- Plato Data Intelligence

- PlatoData

- plugins

- Point

- position

- possibilities

- potential

- potentials

- powerful

- Practical

- precisely

- previous

- processing

- produce

- professional

- Programmer

- promise

- promised

- PROS

- publicly

- realities

- Reality

- realized

- really

- reason

- related

- relevant

- reliable

- rely

- remain

- remaining

- remains

- remarkable

- reminder

- requires

- Resonate

- revealing

- Reveals

- revolutionize

- revolves

- right

- Rise

- s

- Said

- same

- Samsung

- Scalability

- Science

- scientists

- seamlessly

- seem

- seems

- seen

- seo

- serve

- set

- Shadow

- shaped

- she

- showcased

- significant

- Silicon

- Silicon Valley

- Simple

- Skepticism

- skill

- Slowly

- So

- sobering

- Social

- social media

- Software

- Software Engineer

- solidify

- something

- Sony

- Soon

- sophisticated

- specific

- Spectrum

- standardization

- stands

- Star

- Startups

- steer

- Steps

- Stop

- Strategic

- successes

- such

- sum

- support

- sure

- Systems

- tasks

- tech

- Technical

- technological

- Technologies

- test

- than

- that

- The

- The Future

- The Metaverse

- the world

- their

- Think

- this

- those

- Through

- time

- to

- today’s

- Tokens

- too

- tool

- toward

- trace

- Train

- transforming

- Trends

- trigger

- Trillion

- trillions

- true

- ubiquitous

- understanding

- Universe

- unlocking

- untapped

- use

- useful

- users

- using

- Valley

- value

- various

- Vast

- versions

- viability

- viable

- visionaries

- VOICES

- Warner

- was

- Way..

- we

- What

- What is

- when

- whether

- which

- while

- WHO

- whole

- whose

- why

- will

- windows

- with

- within

- without

- Word

- words

- Work

- worked

- workflows

- world

- worse

- write

- writer

- writing

- yes

- yet

- you

- Your

- Zenith

- zephyrnet