Steve Brierley argues that quantum computers must implement comprehensive error-correction techniques before they can become fully useful to society

“There are no persuasive arguments indicating that commercially viable applications will be found that do not use quantum error-correcting codes and fault-tolerant quantum computing.” So stated the Caltech physicist John Preskill during a talk at the end of 2023 at the Q2B23 meeting in California. Quite simply, anyone who wants to build a practical quantum computer will need to find a way to deal with errors.

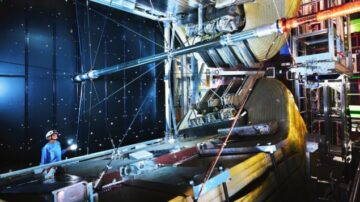

Quantum computers are getting ever more powerful, but their fundamental building blocks – quantum bits, or qubits – are highly error prone, limiting their widespread use. It is not enough to simply build quantum computers with more and better qubits. Unlocking the full potential of quantum-computing applications will require new hardware and software tools that can control inherently unstable qubits and comprehensively correct system errors 10 billion times or more per second.

Preskill’s words essentially announced the dawn of the so-called Quantum Error Correction (QEC) era. QEC is not a new idea and firms have for many years been developing technologies to protect the information stored in qubits from errors and decoherence caused by noise. What is new, however, is giving up on the idea that today’s noisy intermediate scale devices (NISQ) could outperform classical supercomputers and run applications that are currently impossible.

Sure, NISQ – a term that was coined by Preskill – was an important stepping stone on the journey to fault tolerance. But the quantum industry, investors and governments must now realize that error correction is quantum computing’s defining challenge.

A matter of time

QEC has already seen unprecedented progress in the last year alone. In 2023 Google demonstrated that a 17-qubit system could recover from a single error and a 49-qubit system from two errors (Nature 614 676). Amazon released a chip that suppressed errors 100 times, while IBM scientists discovered a new error-correction scheme that works with 10 times fewer qubits (arXiv:2308.07915). Then at the end of the year, Harvard University’s quantum spin-out Quera produced the largest yet number of error-corrected qubits .

Decoding, which turns many unreliable physical qubits into one or more reliable “logical” qubits, is a core QEC technology. That’s because large-scale quantum computers will generate terabytes of data every second that have to be decoded as fast as they are acquired to stop errors propagating and rendering calculations useless. If we don’t decode fast enough, we will be faced with an exponentially growing backlog of data.

The UK’s national quantum strategy is a plan we can all believe in

My own company – Riverlane – last year introduced the world’s most powerful quantum decoder. Our decoder is solving this backlog issue but there’s still a lot more work to do. The company is currently developing “streaming decoders” that can process continuous streams of measurement results as they arrive, not after an experiment is finished. Once we’ve hit that target, there’s more work to do. And decoders are just one aspect of QEC – we also need high-accuracy, high-speed “control systems” to read and write the qubits.

As quantum computers continue to scale, these decoder and control systems must work together to produce error-free logical qubits and, by 2026, Riverlane aims to have built an adaptive, or real-time, decoder. Today’s machines are only capable of a few hundred error-free operations but future developments will work with quantum computers capable of processing a million error-free quantum operations (known as a MegaQuOp).

Riverlane is not alone in such endeavours and other quantum companies are now prioritising QEC. IBM has not previously worked on QEC technology, focusing instead on more and better qubits. But the firm’s 2033 quantum roadmap states that IBM aims to build a 1000-qubit machine by the end of the decade that is capable of useful computations – such as simulating the workings of catalyst molecules.

Quera, meanwhile, recently unveiled its roadmap that also prioritizes QEC, while the UK’s National Quantum Strategy aims to build quantum computers capable of running a trillion error-free operations (TeraQuOps) by 2035. Other nations have published similar plans and a 2035 target feels achievable, partly because the quantum-computing community is starting to aim for smaller, incremental – but just as ambitious – goals.

Setting the scene for a quantum marketplace: where quantum business is up to and how it might unfold

What really excites me about the UK’s National Quantum Strategy is the goal to have a MegaQuOp machine by 2028. Again, this is a realistic target – in fact, I’d even argue that we’ll reach the MegaQuOp regime sooner, which is why Riverlane’s QEC solution, Deltaflow, will be ready to work with these MegaQuOp machines by 2026. We don’t need any radically new physics to build a MegaQuOp quantum computer – and such a machine will help us better understand and profile quantum errors.

Once we understand these errors, we can start to fix them and proceed toward TeraQuOp machines. The TeraQuOp is also a floating target – and one where improvements in both the QEC and elsewhere could result in the 2035 target being delivered a few years earlier.

It is only a matter of time before quantum computers are useful for society. And now that we have a co-ordinated focus on quantum error correction, we will reach that tipping point sooner rather than later.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://physicsworld.com/a/why-error-correction-is-quantum-computings-defining-challenge/

- :has

- :is

- :not

- :where

- $UP

- 10

- 100

- 135

- 160

- 2023

- 2026

- 2028

- 2035

- 87

- 90

- a

- About

- achievable

- acquired

- adaptive

- After

- again

- aim

- aims

- All

- alone

- already

- also

- ambitious

- an

- and

- announced

- any

- anyone

- applications

- ARE

- argue

- Argues

- arguments

- arrive

- AS

- aspect

- At

- BE

- because

- become

- been

- before

- being

- believe

- Better

- Billion

- bits

- Blocks

- both

- build

- Building

- built

- business

- but

- by

- calculations

- california

- CAN

- capable

- Catalyst

- caused

- challenge

- chip

- Codes

- coined

- commercially

- community

- Companies

- company

- comprehensive

- computations

- computer

- computers

- computing

- continue

- continuous

- control

- Core

- correct

- could

- courtesy

- cryptography

- Currently

- data

- deal

- decade

- defining

- delivered

- demonstrated

- developing

- developments

- Devices

- digital

- discovered

- Display

- do

- Dont

- during

- Earlier

- elsewhere

- end

- endeavours

- enough

- Era

- error

- Errors

- essentially

- Even

- EVER

- Every

- excites

- experiment

- faced

- fact

- FAST

- fault

- feels

- few

- fewer

- Find

- finished

- firms

- Fix

- floating

- Focus

- focusing

- For

- found

- from

- full

- fully

- fundamental

- future

- future developments

- generate

- getting

- Giving

- goal

- Goals

- Governments

- Growing

- Hardware

- harvard

- Have

- help

- highly

- Hit

- How

- However

- HTTPS

- hundred

- IBM

- idea

- if

- implement

- important

- impossible

- improvements

- in

- incremental

- indicating

- industry

- information

- inherently

- instead

- Intermediate

- into

- introduced

- Investors

- issue

- IT

- ITS

- John

- journey

- jpg

- just

- just one

- Kingdom

- known

- laptop

- large-scale

- largest

- Last

- Last Year

- later

- limiting

- logical

- Lot

- machine

- Machines

- man

- many

- map

- marketplace

- Matter

- max-width

- me

- Meanwhile

- measurement

- meeting

- might

- million

- more

- most

- must

- National

- Nations

- Nature

- Need

- New

- new hardware

- no

- Noise

- now

- number

- of

- on

- once

- ONE

- only

- Operations

- or

- Other

- our

- Outperform

- own

- per

- physical

- physicist

- Physics

- Physics World

- plan

- plans

- plato

- Plato Data Intelligence

- PlatoData

- Point

- potential

- powerful

- Practical

- previously

- prioritizes

- proceed

- process

- processing

- produce

- Produced

- Profile

- Progress

- propagating

- protect

- published

- Quantum

- Quantum Computer

- quantum computers

- quantum computing

- quantum error correction

- qubits

- quite

- radically

- rather

- reach

- Read

- ready

- real-time

- realistic

- realize

- really

- Recover

- regime

- released

- reliable

- rendering

- require

- result

- Results

- Riverlane

- Run

- running

- s

- Scale

- scene

- scheme

- Second

- seen

- similar

- simply

- single

- smaller

- So

- Society

- Software

- solution

- Solving

- start

- Starting

- stated

- States

- stepping

- Still

- STONE

- Stop

- stored

- Strategy

- streams

- such

- supercomputers

- suppressed

- system

- Systems

- Talk

- Target

- techniques

- Technologies

- Technology

- term

- than

- that

- The

- the information

- their

- Them

- then

- These

- they

- this

- thumbnail

- time

- times

- Tipping

- Tipping point

- to

- today’s

- together

- tolerance

- tools

- toward

- Trillion

- true

- turns

- two

- understand

- United

- United Kingdom

- unlocking

- unprecedented

- unveiled

- us

- use

- useful

- useless

- viable

- wants

- was

- Way..

- we

- What

- What is

- which

- while

- WHO

- why

- widespread

- will

- with

- words

- Work

- work together

- worked

- workings

- works

- world

- world’s

- write

- year

- years

- yet

- zephyrnet