- Graph of Thoughts (GoT) is a novel framework designed to enhance the prompting capabilities of Large Language Models (LLMs) for complex problem-solving tasks.

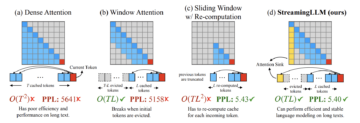

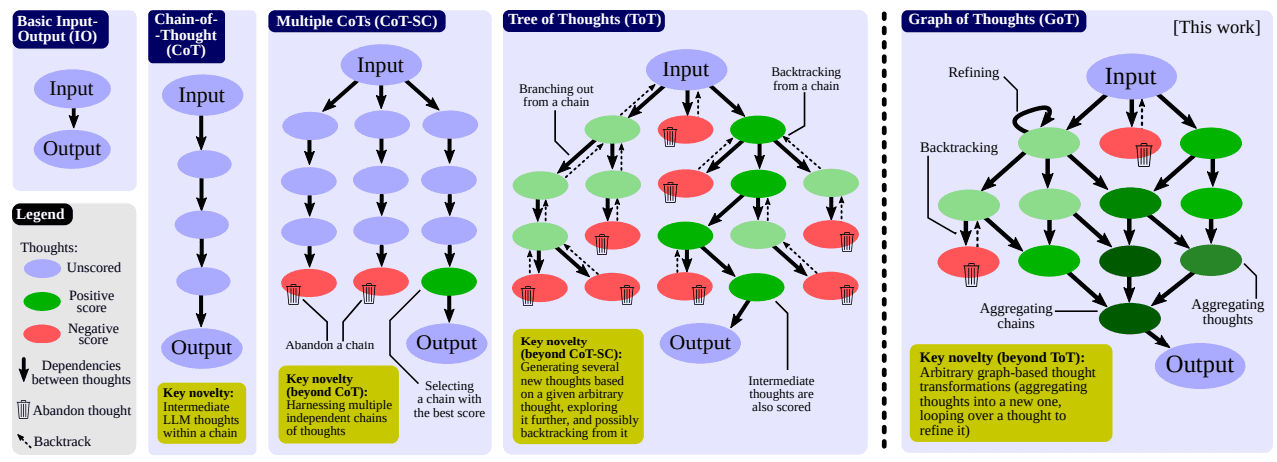

- GoT surpasses existing paradigms like Chain-of-Thought (CoT) and Tree of Thoughts (ToT) by representing the information generated by an LLM as a graph, allowing for more flexible and efficient reasoning.

- The framework has shown significant improvements in task performance, including a 62% increase in sorting quality and a cost reduction of over 31% compared to Tree of Thoughts.

This work brings the LLM reasoning closer to human thinking or brain mechanisms such as recurrence, both of which form complex networks.

The burgeoning landscape of artificial intelligence has given rise to increasingly sophisticated Large Language Models (LLMs) capable of a wide range of tasks. Yet, one of the ongoing challenges is improving these models’ ability to solve elaborate problems efficiently. Enter Graph of Thoughts (GoT), a framework hoping to take a giant leap in this direction. GoT advances the prompting capabilities of LLMs by structuring the information they generate into a graph, thereby enabling a more intricate and flexible form of reasoning.

While existing paradigms like Chain-of-Thought (CoT) and Tree of Thoughts (ToT) have contributed to the structured output and hierarchical reasoning in LLMs, they often operate within a linear or tree-like constraint. This limitation can sometimes hinder the model’s ability to handle complex problem-solving tasks that require multi-dimensional reasoning and the ability to combine disparate pieces of information. Graph of Thoughts addresses this gap by introducing a graph-based structure for managing “LLM thoughts.” This allows for an unprecedented level of flexibility in how information is stored, accessed, and manipulated within the model. With GoT, developers and researchers can fine-tune the prompting strategy to navigate this graph effectively, enabling LLMs to solve intricate problems in a more human-like manner.

Graph of Thoughts operates on a simple yet powerful concept: it models the information produced by an LLM as a graph where each vertex represents a unit of information, often referred to as “LLM thoughts.” The edges between these vertices signify the dependencies or relationships between different units of thought. This graph-based approach allows for:

- Combining arbitrary LLM thoughts into harmonious outcomes

- Refining the essence of complex networks of thoughts

- Strengthening thoughts with the use of feedback loops

In comparison to existing paradigms like CoT and ToT, GoT offers a more flexible and efficient way to manage and manipulate the information generated by LLMs.

Figure 1: Comparison of Graph of Thoughts (GoT) to other prompting strategies (Image from paper)

To implement GoT, developers need to represent the problem-solving process as a graph, where each node or vertex represents a thought or a piece of information. Then, the relationships or dependencies between these thoughts are mapped as edges in the graph. This mapping allows for various operations like merging nodes to create more complex thoughts, or applying transformations to enhance the existing thoughts.

One of the standout features of GoT is its extensibility, allowing it to adapt to a variety of tasks and domains. Unlike more rigid structures, the graph-based representation in GoT can be dynamically altered during the problem-solving process. This means that as an LLM generates new thoughts or gains additional insights, these can be seamlessly incorporated into the existing graph without requiring a complete overhaul.

Moreover, GoT enables the implementation of feedback loops, where the model can revisit and refine its earlier thoughts based on newly acquired information. This dynamic and iterative process serves to significantly enhance the quality of the model’s output, making it a particularly powerful tool for complex tasks that require ongoing refinement and adaptation.

The introduction of GoT may mark a significant advancement in the field of LLMs and their application in complex problem-solving tasks. By adopting a graph-based approach to represent and manipulate the information generated by LLMs, GoT offers a more flexible and efficient form of reasoning. Its success in improving task performance and reducing computational costs makes it a promising framework for future research and applications. Developers and researchers should explore this new paradigm in order to attempt to unlock the full problem-solving potential of their LLMs and improve their prompting.

Matthew Mayo (@mattmayo13) holds a Master’s degree in computer science and a graduate diploma in data mining. As Editor-in-Chief of KDnuggets, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/graph-of-thoughts-a-new-paradigm-for-elaborate-problem-solving-in-large-language-models?utm_source=rss&utm_medium=rss&utm_campaign=graph-of-thoughts-a-new-paradigm-for-elaborate-problem-solving-in-large-language-models

- :has

- :is

- :where

- 7

- a

- ability

- accessed

- accessible

- acquired

- adapt

- adaptation

- Additional

- addresses

- Adopting

- advancement

- advances

- AI

- aims

- algorithms

- Allowing

- allows

- altered

- an

- and

- Application

- applications

- Applying

- approach

- ARE

- artificial

- artificial intelligence

- AS

- attempt

- based

- BE

- been

- between

- both

- Brain

- Brings

- by

- CAN

- capabilities

- capable

- challenges

- closer

- Coding

- combine

- community

- compared

- comparison

- complete

- complex

- computational

- computer

- computer science

- concept

- concepts

- contributed

- Cost

- cost reduction

- Costs

- create

- data

- data mining

- data science

- Degree

- democratize

- dependencies

- designed

- developers

- different

- direction

- disparate

- domains

- driven

- during

- dynamic

- dynamically

- each

- Earlier

- edges

- editor-in-chief

- effectively

- efficient

- efficiently

- Elaborate

- emerging

- enables

- enabling

- enhance

- Enter

- essence

- Ether (ETH)

- existing

- explore

- Exploring

- Features

- feedback

- field

- Flexibility

- flexible

- For

- form

- Framework

- from

- full

- future

- Gains

- gap

- generate

- generated

- generates

- giant

- given

- got

- graduate

- graph

- handle

- harmonious

- Have

- he

- hinder

- his

- holds

- hoping

- How

- HTTPS

- human

- image

- implement

- implementation

- improve

- improvements

- improving

- in

- include

- Including

- Incorporated

- Increase

- increasingly

- information

- insights

- Intelligence

- interests

- into

- intricate

- introducing

- Introduction

- IT

- ITS

- jpg

- KDnuggets

- knowledge

- landscape

- language

- large

- Leap

- learning

- Level

- like

- limitation

- machine

- machine learning

- make

- MAKES

- Making

- manage

- managing

- manipulated

- manner

- mapping

- mark

- master

- matthew

- May..

- means

- mechanisms

- merging

- Mining

- Mission

- model

- models

- more

- Natural

- Natural Language

- Natural Language Processing

- Navigate

- Need

- networks

- New

- newly

- node

- nodes

- novel

- of

- Offers

- often

- Old

- on

- ONE

- ongoing

- operate

- operates

- Operations

- or

- order

- Other

- output

- over

- Overhaul

- Paper

- paradigm

- paradigms

- particularly

- performance

- piece

- pieces

- plato

- Plato Data Intelligence

- PlatoData

- potential

- powerful

- problem-solving

- problems

- process

- processing

- Produced

- professional

- promising

- quality

- range

- recurrence

- reducing

- reduction

- referred

- refine

- Relationships

- represent

- representation

- representing

- represents

- require

- research

- researchers

- rigid

- Rise

- s

- Science

- seamlessly

- serves

- should

- shown

- significant

- significantly

- signify

- Simple

- since

- SOLVE

- sometimes

- sophisticated

- standout

- stored

- strategies

- Strategy

- structure

- structured

- structures

- structuring

- success

- such

- Take

- Task

- tasks

- that

- The

- The Graph

- the information

- their

- then

- thereby

- These

- they

- Thinking

- this

- thought

- to

- tool

- transformations

- tree

- unit

- units

- unlike

- unlock

- unprecedented

- use

- variety

- various

- was

- Way..

- which

- wide

- Wide range

- with

- within

- without

- Work

- years

- yet

- zephyrnet