This article was published as a part of the Data Science Blogathon

Introduction

Tensorflow (hereinafter – TF) is a fairly young framework for deep machine learning, being developed in Google Brain. For a long time, the framework was developed in a closed mode called disbelief, but after a global refactoring on November 9, 2015, it was released to open source. In a year with a small TF, it has grown to version 1.0, acquired integration with Keras, became much faster and received support for mobile platforms. We will only consider the Python API, although this is not the only option – there are also interfaces for C ++ and mobile platforms.

Installation

TF is installed as standard via python-pip. There is a nuance: there are separate installation algorithms for running on a CPU and on video cards.

In the case of the CPU, everything is simple: you need to install a package from pip called TensorFlow.

In the second case, you need:

-

check compatibility with the video card. The CUDA Compute Capability parameter must be greater than 3.0.

-

Install CUDA Toolkit version 8

-

Install cuDNN version 5.1

-

Install TensorFlow-GPU package from pip

However, the documentation states that earlier versions of the CUDA Toolkit and cuDNN are supported, but recommends installing the versions listed above.

The developers recommend installing TF in a separate environment with a virtual env to avoid possible versioning and dependency issues.

Basic TF elements

With the help of “Hello, world” we will make sure that everything is installed correctly:

import tensorflow as tf # connect TF

hello = tf.constant ('Hello, TensorFlow!') # create an object from TF

sess = tf.InteractiveSession () #to built a new session

print (sess.run (hello)) # session "runs" the object

>>> b'Hello, TensorFlow! '

Connect TF with the first line. There is already a rule to introduce a corresponding abbreviation for the framework. The same piece of code is found in the documentation and allows you to make sure that everything was installed correctly.

Computation graph

Working with TF is built around the construction and execution of a graph of computations. A computation graph is a construct that describes how computations will be performed. The basis of TF is the creation of a structure that specifies the order of computations. Programs are naturally structured into two parts – compilation of a computation graph and execution of computations in the created structures.

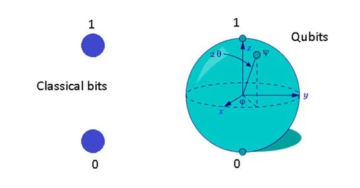

In TF, a graph consists of placeholders, variables, and operations. From these elements, you can assemble a graph in which tensors will be calculated. A tensor is a multi-D array that can be either a single number, a vector of features from the problem being solved or an image, or a whole batch of object descriptions or an array of images. Instead of one object, we can pass an array of objects to the graph and an array of responses will be calculated for it. TF’s work with tensors is similar to how NumPy handles arrays, in the functions of which you can specify the axis of the array relative to which the calculation will be performed.

Sessions

Computational graphs are executed in sessions. The session object (tf.Session) hides the context of the graph execution – the necessary resources, auxiliary classes, address spaces.

Sessions are of two types – the first is regular, which are implemented in tf.Session and other one is interactive, that is implemented in ( tf.InteractiveSession ). The difference between the two is that an interactive session is more suitable for running in the console and immediately identifies itself as the default session. The main effect is that the session object does not need to be passed as a parameter to the calculation function. In the examples below, I will assume that the interactive session, which we declared in the first example, is currently running, and when I need to access the session, I will refer to the object sess.

Further in the post, standard TF images with graph images, generated by a built-in utility called Tensorboard, will appear. The designations there are as follows:

| Variable | Operation | Auxiliary result |

| A graph node usually contains data. | Does something with variables. This also includes placeholders that substitute values into the graph. | Any caching and side calculations like gradients are usually referred to as a link to a separate part of the graph. |

|

|

|

|

Tensors, Operations, and Variables

Let’s create, for example, a tensor filled with zeros.

zeros_tensor = tf.zeros([3, 3])

In general, the API in TF will in many ways resemble NumPy, and tf.zeros()is far from the only function that has a direct analog in NumPy. To see the value of a tensor, you need to execute it. More details about the execution of the graph are a little lower, for now, we will manage with the fact that we will output the value of the tensor and the tensor itself.

print(zeros_tensor.eval())

print(zeros_tensor)

>>> [[ 0. 0. 0.] [ 0. 0. 0.] [ 0. 0. 0.]]

>>> Tensor("zeros_1:0", shape=(3, 3), dtype=float32)

The difference between the lines is that in the first line we calculate the tensor, and in the second line we just print the representation of the object.

The important things displayed by tensor description:

-

Tensors have names. Ours has its zeros: 0

-

There is a concept of a tensor shape, it is similar to the dimension of an array from NumPy.

-

Tensors are typed and types for them are set from the library.

Various operations can be performed on tensors:

a = tf.truncated_normal([2, 2]) b = tf.fill([2, 2], 0.5) print(sess.run(a + b)) print(sess.run(a - b)) print(sess.run(a * b)) print(sess.run(tf.matmul(a, b))) >>> [[-1.12130964 -1.02217746] [ 0.85684788 0.5425666 ]] >>> [[ 0.35249496 0.96118248] [-1.55395389 -1.18111515]] >>> [[-0.06559008 -0.11100233] [ 0.51474923 -0.27813852]] >>> [[-0.16202734 -0.16202734] [-0.8864761 -0.8864761 ]]

In the example above, we use the construction sess.run- this is a method for executing graph operations in a session. It uses the standard generation of a normal distribution, but it excludes everything that falls outside the two standard deviations. A very typical thing for TF – most of the popular options for performing the operation have already been implemented and, perhaps, before inventing the bicycle, it is worth looking at the documentation. The second tensor is a 2×2 multidimensional array filled with a value of 0.5 and is something similar to NumPy and its functions for creating multidimensional arrays.

Now let’s create a tensor-based variable:

v = tf.Variable(zeros_tensor)

A variable participates in computations as a node of a computational graph retains its state, and needs some kind of initialization. So, if in the following example we do without the first line, then TF will throw an exception.

sess.run(v.initializer) v.eval() >>> array([[ 0., 0., 0.], [ 0., 0., 0.], [ 0., 0., 0.]], dtype=float32)

Operations on variables create a computational graph that can then be executed. There are also placeholders – objects that parameterize the graph and mark places for substitution of external values. As it is written in the official documentation, a placeholder is a promise to substitute a value later. Let’s create a placeholder and assign it a data type and size:

x = tf.placeholder(tf.float32, shape=(4, 4))

Another example of use. Here, nodes that serve as an input for the adder is two placeholders:

a = tf.placeholder("float")

b = tf.placeholder("float")

y = tf.multiply(a, b)

print(sess.run(y, feed_dict={a:100, b:500}))

>>> 50000.0

The simplest calculations.

Let’s create and evaluate some expressions as an example.

ans = tf.placeholder(tf.float32)

f = 1 + 2 * ans + tf.pow(ans, 2)

sess.run(f, feed_dict={x: 10})

>>> 121.0

And the computation graph:

X and y, indicating the operations in this scheme, are additional parameters, instead of which there could be the edges of the graph, but we substituted f1 and 2 in the scalar values and this is just a notation in the graph for numbers. In this example, we create a placeholder and, based on it, an expression graph, and after that, we perform graph calculations in the context of the current session. I have not specified the shape in the placeholder parameters, which means that you can input tensors of any size. The only thing that needs to be specified is the tensor type. When calculating parameters inside the session, they are passed through feed_dict- a dictionary with everything that is necessary for calculations.

For example, a sigmoid:

x = tf.placeholder(dtype=tf.float32)

sigma = 1 / (1 + tf.exp(-x))

sigma.eval(feed_dict={x: np.linspace(-5, 5) })

And here is such a graph for her.

In the snippet with the start of the function calculation, there is one point that distinguishes this example from the previous ones. The fact is that instead of a single scalar value, we pass an entire array to the placeholder. TF processes all the values of an array together, within a single tensor (remember that array == tensor). In exactly the same way, we can transfer objects to the graph in whole batches and supply the whole picture to the neural network.

In general, working with tensors is similar to working with arrays in NumPy. However, there are some differences. When we want to reduce the dimension by somehow combining the values in a tensor along a certain dimension, we use those functions that start with reducing.

If we compare with the Theano API – in TF there is no division into vectors and matrices, but instead, you have to monitor the dimensions of tensors in the graph and there is a mechanism for deriving the tensor form, which allows you to get the dimensions even before runtime.

Machine learning

To begin with, let’s analyze the classical linear regression that has already been mentioned more than once, but for training, we will use the gradient descent method.

Where can we go without this picture?

Firstly begin with linear regression and after that add polynomial features.

To extract the synthetic data:

x = np.linspace(0, 10, 1000) y = np.sin(x) + np.random.normal(size=len(x))

They will look something like this:

I will also split the sample into training and control in a 70/30 proportion, but I will leave this and some other routine moments in the full source, the link to which will be a little below.

Let’s build a simple linear regression first.

a_ = tf.placeholder(name="input", shape=[None, 1], dtype = tf.float32) b_ = tf.placeholder(name= "output", shape=[None, 1], dtype = tf.float32) model_op = tf.Variable(tf.random_normal([1]), name='bias') + tf.Variable(tf.random_normal([1]), name='k') * x_

Here I create two placeholders for the attribute and response and a view formula.

A nuance – in the placeholder, the shape parameter contains None. The placeholder dimension means that the placeholder consumes two-dimensional tensors, but along one of the axes, the tensor size is not defined and can be anything. This is done so that the user can transfer values to the graph in whole batches at once. Such specific dimensions are called dynamic, TF calculates the actual dimension of the associated elements at runtime.

The placeholder for the feature is used in the formula, but I will substitute the placeholder for the answer in the loss function :

loss = tf.reduce_mean(tf.pow(y_ - model_output, 2))

TF implements a dozen optimization methods. We will use the classic gradient descent, specifying the learning rate in the parameters.

gd = tf.train.GradientDescentOptimizer(0.001) train_step = gd.minimize(loss)

Initialization of variables – it is necessary for further calculations:

sess.run(tf.global_variables_initializer())

Everything can finally be taught. I will run 100 training epochs on the training part of the sample, after each training I will arrange control on the deferred part.

n_epochs = 100

train_errors = []

test_errors = []

for i in tqdm.tqdm(range(n_epochs)): # 100 _, train_err = sess.run([train_step, loss ], feed_dict={x_:X_Train.reshape((len(X_Train), 1)) , y_: Y_Train.reshape((len(Y_Train), 1))}) train_errors.append(train_err) test_err.append(ses.run(loss, feed_dict={x_:X_Test.reshape((len(X_Test), 1)) , y_: Y_Test.reshape((len(Y_Test), 1))}))

The first session of the implementation of both operations train_stepand loss make directly and training, and evaluation of the errors on the training set, ie actually an estimate of how well we memorized the sample. The second execution of the session is the calculation of losses on the test sample. In the parameter, feed_dictI pass values for placeholders to the graph and do reshape so that the data arrays match in dimension. Where there was a value in the placeholder None, you can transfer any number. Tensors with such indefinite dimensions are called dynamic, and here I use them to transfer batches with examples to the graph for training.

It turns out this is the dynamics of learning:

This graph contains auxiliary variables with gradients and initialization operations, they are placed in a separate block.

And here are the results of calculating the model:

I calculated the values for the graph in this way:

sess.run(model_output, feed_dict={x_:x.reshape((len(x), 1))})

Here I pass the value to the graph only for the placeholder x_- the rest is simply not needed to evaluate the expression model_output.

Polynomial regression

Let’s try to diversify the regression with polynomial features, regularization, and changing the learning rate of the model.

In the generation of the dataset, add a number of degrees and normalize the features using PolynomialFeatures and StandardScaler from the scikit-learn library. The first object will create for us as many polynomial features as we want, and the second normalizes them.

To switch to polynomial regression, replace just a few lines in the calculation graph:

order = 26 x_ = tf.placeholder(name="input", shape=[None, order], dtype=tf.float32) y_ = tf.placeholder(name= "output", shape=[None, 1], dtype=tf.float32) w = tf.Variable(tf.random_normal([order, 1]), name='weights') model_output = tf.matmul(x_, w)

In fact, we are counting now. Obviously, there is a danger of overfitting the model out of the blue, so let’s add regularization penalties to the weights. Add penalties to the loss function (loss in the examples) in the form of additional terms and get almost ElasticNet from sklearn.

loss = tf.reduce_mean(tf.square(y_ - model_output)) + 0.85* tf.nn.l2_loss(w) + 0.15* tf.reduce_mean(tf.abs(w))

For the most popular L2 regression, there is a separate function l2_loss, but feature selection using L1 will have to be implemented manually, but we will have an average overall absolute value of the weights.

For the sake of example, I will add one more significant change that will affect the pace of learning. Quite often, when training heavy neural networks, this is simply a necessary measure in order to avoid problems with training and get an acceptable result. A very simple idea is to gradually lower the step parameter as you learn, avoiding big trouble.

Instead of a constant tempo, we will use an exponential decay, which I took straight from the documentation:

learning_rate = tf.train.exponential_decay(starter_learning_rate, global_step, 100000, 0.96, staircase=True)

The formula is hidden inside the function:

decay_stepsin our example, the value is 100000, decay_rate- 0.96.

We get the following rates of reducing errors in training and control:

In addition to exponential decay, there are other functions that allow you to reduce the learning rate, and of course, nothing prevents you from creating another function to fit your needs.

Saving and loading graphs

We got the model and it would be nice to keep it. Two things that the special serializer object of API does is:

-

Saves the current graph, its state, and variable values to a file;

-

Reads all the same from a file.

All you need to do is create this object:

saver = tf.train.Saver()

The state of the current session is saved using the method save:

saver.save(sess, "checkpoint_dir/model.ckpt")

It is somehow accepted that the saved states of the model are called checkpoints, hence the name of the folders and file extensions. Recovery is performed using the method restore:

ckpt = tf.train.get_checkpoint_state(ckpt_dir) if ckpt and ckpt.model_checkpoint_path: print(ckpt.model_checkpoint_path) saver.restore(session, ckpt.model_checkpoint_path)

First, using a special function, we get the state of the checkpoint (if suddenly there is no saved model in the target directory, the function will return None). By default, the function looks for a file checkpoint, but this behavior can be changed using a parameter. After that, it restores the state of the graph.

Tensorboard

An extremely useful system as part of TF is the web dashboard, which allows you to collect statistics from dumps and logs and observe what actually happens during the calculations. It is extremely convenient that the dashboard runs on a web server and, for example, by running tensorboard on a remote machine in the cloud, you can watch what is happening in your browser window.

Tensorboard is able to:

-

Draw a graph of calculations.

The graph of calculations is worth looking at, at least for self-checking, to make sure that exactly what was planned has been assembled and counted, and no errors were made during coding. -

Show statistics on variables.

You can collect any statistics at all. -

There is a tool for analyzing multidimensional data (for example, embeddings).

To do this, the dashboard has built-in PCA and t-SNE, with which you can try to view data in 2 and 3 dimensions. -

Histograms.

It is possible to build histograms of the distributions of the outputs of the layers of networks and the behavior of variables.

The other side of the coin is that in order for statistics to get into the dashboard, it must be saved to logs (in protobuf format) using a special API. The API is not very complex, grouped into tf.summary.

Even when using Tensorboard, it is also important not to forget about the parameter of name the variables. The name that will be assigned to the variable will then be used to draw the graph, select in the dashboard user interface, in general, everywhere. For small graphs, this is not critical, but as the complexity of the problem grows, problems with understanding what is happening may arise.

There are several types of functions that store variable data in different ways:

tf.summary.histogram("layer_output", w_h)

This function will allow you to collect a histogram for the output of the layer and approximately estimate the dynamics of changes during training. The function tf.summary.scalar(“accuracy”, learning_rate)will keep the number. You can also save audio and pictures.

To save the logs, you need a little more: first, you need to create a FileWriter to write the file.

writer = tf.summary.FileWriter("./logs/nn_logs", sess.graph) # for 1.0

merged = tf.summary.merge_all()

And combine all statistics in one object.

Now you need to merged transfer this object to the session for execution and then FileWriter add new data received from the session using the method.

summary, op_res = ses.run([merged, op], feed_dict={X: X_train, Y: y_train, p_keep_input: 1.0, p_keep_hidden: 1.0}) writer.add_summary(summary, i)

However, for the simple saving of the graph, the following code is enough:

merged = tf.summary.merge_all(key='summaries')

if not os.path.exists('tensorboard_logs/'): os.makedirs('tensorboard_logs/')

my_writer = tf.summary.FileWriter('tensorboard_logs/', sess.graph)

And a nuance: by default, Tensorboard is locally available at 127.0. 1 .1: 6006. Hopefully saved readers a few seconds of time and neurons with this note.

Multilayer perceptron

Let us analyze a canonical example with memorizing the function xor, which the linear model cannot assimilate due to the impossibility of linear division of the feature space.

Multilayer networks learn the function due to the fact that they make an implicit transformation of the feature space into a separable one, or (depending on the implementation) make a nonlinear partition of this space. We will implement the first option – we will create a two-layer perceptron with non-linear activation of layers. The first layer will do a non-linear transformation, and the second layer is almost linear regression that works on the transformed feature space.

We will use the relu function as a nonlinear element.

Let’s define the structure of the network:

x_ = tf.placeholder(name="input", shape=[None, 2], dtype=tf.float32) y_ = tf.placeholder(name= "output", shape=[None, 1], dtype=tf.float32) hidden_neurons = 15 w1 = tf.Variable(tf.random_uniform(shape=[2, hidden_neurons ])) b1 = tf.Variable(tf.constant(value=0.0, shape=[hidden_neurons ], dtype=tf.float32)) layer1 = tf.nn.relu(tf.add(tf.matmul(x_, w1), b1)) w2 = tf.Variable(tf.random_uniform(shape=[hidden_neurons ,1])) b2 = tf.Variable(tf.constant(value=0.0, shape=[1], dtype=tf.float32)) nn_output = tf.nn.relu(tf.add(tf.matmul(layer1, w2), b2))

Unlike Keras and other higher-level libraries, TF, like Theano, assumes a detailed definition of each layer as a collection of some arithmetic operations. This is not true for all types of layers, for example, convolutional and dropout layers are defined by one function, while an ordinary fully connected layer is the declaration of not only variables for weights and shifts, but also the operations themselves (multiplying weights with the output of the previous layer, adding shift, application of the activation function).

Of course, quite often it all turns into a similar function:

def fully_connected(input_layer, weights, biases): layer = tf.add(tf.matmul(input_layer, weights), biases) return(tf.nn.relu(layer))

At the same time, according to my own experience, it is more convenient to declare and initialize variables outside: sometimes you need to use them somewhere else inside the graph (a typical example is Siamese neural networks with common weights) or simply have access for simple logging to a file and displaying current values, and for some reason, I don’t want to use tensorboard.

We use an elementary loss function:

gd = tf.train.GradientDescentOptimizer(0.001) loss = tf.reduce_mean(tf.square(nn_output - y_)) train_step = gd.minimize(loss)

and train:

a = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

b = np.array([[0], [1], [1], [0]])

for _ in range(20000): sess.run(train_step, feed_dict={x_:a, y_:b})

The resulting graph:

In comparison with regression, practically nothing has changed: the same learning process, the same loss function. The only part of the code that has changed a lot is the code for constructing the computational graph. It got to the point that I have accumulated a set of scripts for specific tasks, in which I only change the data feed and the calculation graph.

Of course, there is no lazy fetch check in this example. You can verify that the network is working correctly by calculating the output of the neural network in the graph:

sess.run(nn_output, feed_dict={x_:x})

Of course, in the case of more complex models, validation on lazy sampling and quality tracking as it is trained and methods built into TF for feeding data into the graph are added.

Resource management

Quite often, the world is unfair, and the task may not fit entirely in one device. Or the management bought just one Tesla, and the developers periodically come into conflicts over a busy card. TF has computation control mechanisms for such cases. Within the framework, devices are referred to as “/ cpu: 0”, “/ gpu: 0”, etc. The simplest thing is that you can specify where exactly this or that variable will “live”:

with tf.device('/cpu:0'): a = ...

In this example, the variable аwill be sent to the processor.

You can also transfer a configuration object to the session, with which you can change the conduct of graph calculations. It looks like this:

cfg = tf.ConfigProto() sess = tf.Session(config=cfg)

In the config, first of all, you can enable the parameter log_device_placement in order to understand which computing device the calculation of this or that part of the graph went to.

Let’s say the development team can agree on limiting the consumption of GPU memory. The below code shows that:

gpu_opts = tf.GPUOptions(per_process_gpu_memory_fraction = 0.25) sess = tf.Session(config=tf.ConfigProto(gpu_options = gpu_opts))

In this configuration, the session will not consume more than a quarter of the GPU memory, which means that you can run the calculations of several more models at the same time, and you can also run the model to be counted on the CPU, but the easiest way is to enable the parameter allow_soft_placementso that TF solves these problems itself. For this part of the API, the documentation is still quite fragmentary and some links lead directly to GitHub in the source code of the configuration classes. Some of the properties are marked as obsolete, others are experimental, so you need to be careful here.

Conclusion

TF literally in a year and a half has grown so much that it is time to make separate reviews on the use of convolutional and recurrent networks, reinforcement learning, and the application of the framework to various tasks.

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.

Related

Source: https://www.analyticsvidhya.com/blog/2021/08/tensorflow-an-impressive-deep-learning-library/

- "

- 100

- 9

- Absolute

- access

- Additional

- algorithms

- All

- analytics

- api

- Application

- around

- article

- audio

- browser

- build

- cases

- change

- closed

- Cloud

- code

- Coding

- Coin

- Common

- Compute

- computing

- construction

- consume

- consumption

- Creating

- Current

- dashboard

- data

- deep learning

- developers

- Development

- Devices

- Dimension

- dozen

- Environment

- etc

- execution

- experience

- extensions

- Feature

- Features

- Finally

- First

- fit

- form

- format

- Framework

- full

- function

- General

- GitHub

- Global

- GPU

- here

- How

- HTTPS

- idea

- image

- integration

- interactive

- issues

- IT

- keras

- lead

- LEARN

- learning

- Library

- Line

- LINK

- locally

- Long

- machine learning

- management

- mark

- Match

- measure

- Media

- Mobile

- model

- Most Popular

- names

- network

- networks

- Neural

- neural network

- neural networks

- nodes

- numbers

- official

- open

- open source

- Operations

- Option

- Options

- order

- Other

- Others

- picture

- Platforms

- Popular

- Programs

- Python

- quality

- Rates

- readers

- recovery

- reduce

- regression

- reinforcement learning

- Resources

- response

- REST

- Results

- Reviews

- Run

- running

- saving

- Science

- set

- shift

- Simple

- Size

- small

- So

- Space

- split

- start

- State

- States

- statistics

- store

- supply

- support

- Supported

- Switch

- synthetic data

- system

- Target

- Tempo

- tensorflow

- Tesla

- test

- The Graph

- The Source

- time

- Tracking

- Training

- Transformation

- us

- utility

- value

- Video

- View

- Virtual

- W

- Watch

- web

- web server

- What is

- within

- Work

- works

- world

- worth

- writer

- X

- year